This week, I worked on making our taps and drags more responsive. Before working on double taps, we felt it was best that the we improved the performance of our single-tap interactions. Overall, things were fine for our MVP, however there were a few hiccups, mainly with taps sometimes being registered as small drags and drags not appearing very smooth. Matt K and I came up with a solution where we would ignore additional position updates that occur within a small radius around an initial touch. This makes it less likely that we detect a small drag when the user is really trying to tap. We found that this solution was able to fix our first problem. To make drags smoother, we averaged every couple of position updates before sending a request to the OS. This meant a slower refresh rate, but smoother interpolation of coordinates along a drag. We tinkered around with how many position updates should be averaged, and we found 5-7 gave the best results. Overall, I feel I am on schedule, especially since the tasks that we have remaining are in addition to our MVP. Although I wanted to have a simple double tap test done this week, I felt the changes we made this week were more important to the user experience. Next week, I will work on implementing double taps so that we can hopefully be able to showcase it during our poster session.

Matthew Shen’s Status Report for 4/30

This week, we worked mainly on optimizing the software. I mainly worked on trying to better align the LEDs on the hardware side as there were some instances of misalignment randomly appearing. Next week, I plan to take an oscilloscope to each LED to ensure they each exceed the VIL of the muxes. I will also be working to finish our poster and demo video this week.

We are on schedule as we have completed MVP and are working towards our reach goals now.

Team Status Report for 4/30

This week, we spent most of our time working on obtaining testing values for our presentation. We felt that this went successfully since all of our tests passed. Since we have achieved our MVP, we are not too concerned about obstacles that we may face. Our focus is now to get multi-finger functionality working, which will begin with two finger zoom. Additionally, we may try to make a frame to improve the appearance of our project. All updates to our schedule were shown during the presentation.

Matthew Kuczynski’s Status Report 4/30

Since our MVP was achieved last week, I spent the beginning of the week testing our use case requirements and preparing for the final presentation where I was the speaker. Later in the week, I worked with Darwin on creating a threshold for distance for drags and averaging the position for consecutive data collections where the finger is down so that less update commands are sent and actions like drawing are smoother. Overall, I feel that I am on schedule since our MVP is working and we have completed testing. Next week, I plan to work on the algorithms for two finger gestures such as zoom.

Final Presentation Slides

Video – Touch TrackIR Preliminary Testing

Darwin Torres’ Status Report for 4/23

This week, I helped the team in performing the initial tests of completed integration of all subsystems. Initially, there wasn’t much I could do related to my subsystem as I had to wait the frame to be completed and tested. In the meantime, I worked on the final presentation and report. Once the frame was completed and Matt S and Matt K were able to confirm successful integration of their subsystems, I jumped in to test the integration of my subsystem. As always, a few bugs got in the way, but with the help of Matt K, we were able to get them out of the way. Eventually, we were able confirm the successful integration of all of our subsystems, allowing us to finally use Matt S’ laptop as if it were a touch screen device. However, for the time being, we are limited to single touch commands. The entire team got a good amount of progress done, and I would consider myself on schedule with my tasks. Next week, I will work on adding support for double taps.

Matthew Shen’s Status Report for 4/23

This week, I finalized the hardware. In the picture below, the frame and the Arduino can be seen along with the laptop to be used for the demo. At the moment, we have simply taped the frame onto the screen.

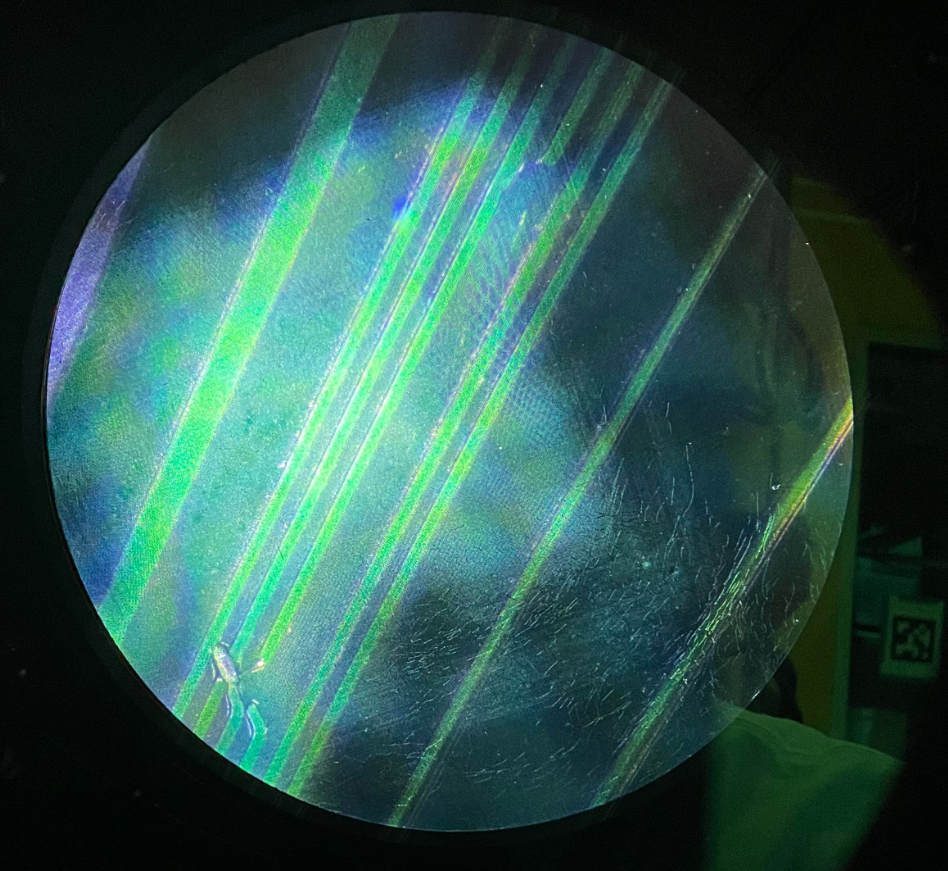

More specifically, I had to work on the long edges this week to complete the frame, as the short edges were tested last week. Unfortunately, after we had already soldered all of our components to the long LED frame, I discovered during testing that JLCPCB had misprinted our board (seen under the microscope below. Fortunately, although there appears to be overlap in the image, there was no short between the wires, so all that was needed was a grueling process of hacking jumper wires between the disconnected nodes. In total, 3 traces needed repair.

I am now officially ahead of schedule, as all of the hardware debugging has been completed. I can now dedicate my focus to helping on the software end. I will mainly work on optimization going forward, as there are several pieces of the code that I know could be accelerated using bit arithmetic. I also may figure out a better way of securing the frame to the laptop than with just tape.

Team Status Report for 4/23

This week, we connected all edges of the frame and spent most of our time debugging the hardware and software, as well as collecting data for measuring our performance against our use-case requirements. Beginning with the hardware, one issue we encountered was a misprint in the bottom PCBs. We were able to hack around this by soldering wires onto the board to fix the broken connection. Before we started testing our software with the frame, Matt S conducted some final debugging and checks to make sure there were no issues with the hardware.

Once the hardware was ok to go, we attached the frame to Matt S’ laptop and began testing of Matt K’s updated Arduino code, which controls the LED/Photodiode pairs and sends data about which pairs are activated to the python backend via USB serial. We encountered some bugs, but eventually after some squashing we were able to confirm that the control signals and output data were correct.

Afterwards, once we confirmed the Arduino subsystem was well-integrated with the hardware, we began our first true test of our entire system through a python script that used the data sent over by the Arduino to calculate coordinates and send commands to Darwin’s updated Touch Control subsystem. At first, a few bugs had came about due to a small miscommunication about the updated interface, but we were able to quickly fix them. Once we were passed that, we finally got our first glimpse at Touch TrackIR in action! Physical finger taps, holds, and drags on the screen were successfully translated to the expected touch inputs in the OS. We were able to click on links, close tabs, move windows, and scroll through webpages. The smoothness of the experience is acceptable, but we still feel there is room for improvement. For example, we can reduce latency by increasing baud rate, removing print statements, and by simplifying the structure of the data that is being used in our coordinate calculations to eliminate unnecessary loops.

Overall, thanks to the progress this week, we put ourselves perfectly on schedule. Next week, we plan on constructing a cover for our PCB frame and adding support for double taps to allow us to perform “right clicks”.

Matthew Kuczynski’s Status Report for 4/23

This week we worked on integrating our subsystems, so much of my work involved testing the Arduino and Python serial code that I had finished last week and debugging it. Previously, I had not been able to test my code because the PCB wasn’t finished, so this week I was able to get it working properly. Then, I worked on getting the testing metrics for our requirements so that they could be reported in the final presentation. Since we have our MVP working, I am definitely on track. Next week, I will now work on adding additional features to our project like multi-finger functionality and improving the speed of the single touch function.