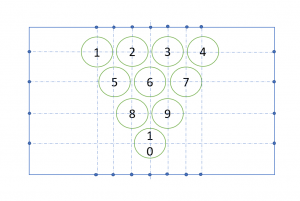

This week I finalized and rigorously tested the cup detection algorithm. With the camera about 3 feet high, angled down about 25 degrees. It can accurately detect the 10 cups in the a pyramid formation in under 1 second. It then uses the data from the depth map to project the 2D image into real world 3D coordinates. I placed the cups in predefined positions so I could test the accuracy of the coordinate generated. It was accurate within +/- a few millimeters. This was a major milestone for this part of the project. I then cleaned up the repository to be fit for repetitive testing and validation of cups in various formations.

Here is a picture of one of my early testing setups:

I also started to put together the first prototype of our housing with the camera on it. Here is a picture of the housing so far:

Creating the calibration map to link the 3D coordinates to specific cups (as seen in last week’s status report) took a little longer than expected. I prioritized getting the cup detection algorithm to our MVP to make testing and demoing smooth and robust. It will also make the calibration much easier to test this week as I focus on that section of our project for this coming week.