This week I made a lot of progress on the calibration settings for our robot. I have decided to separate the calibration flow from the actual gameplay flow in order to make testing and setup more flexible. The calibration flow can detect a rectangular playing mat and and identify the main cup locations by detecting black points within the playing mat. It then will save the calibration data (pixel coordinates of each detected point) to a file which will be loaded in by the gameplay program at runtime. Here is an example of running the calibration flow on a playing mat image:

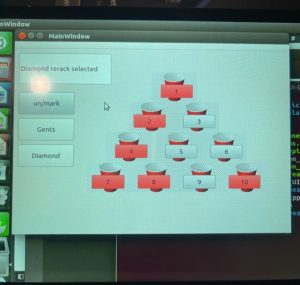

It will then save the 2D pixels coordinates for each point, indexed from 0 to 9, for a 10 cup formation. The gameplay flow will then compare the detected cup pixel coordinates to these calibrated coordinates so that the UI can display the cup formation state to user.

I was using a test image I created to do the initial testing of the calibration tool. This week I am getting a full sized printout of the playing mat to test with the calibration tool.

By the end of this week, the calibration tool should be working end to end with a 10 cup formation. We should be able to calibrate, detect cups, select a cup, physically aim at a cup, and shoot a pong ball (not necessarily hit the cup perfectly yet).