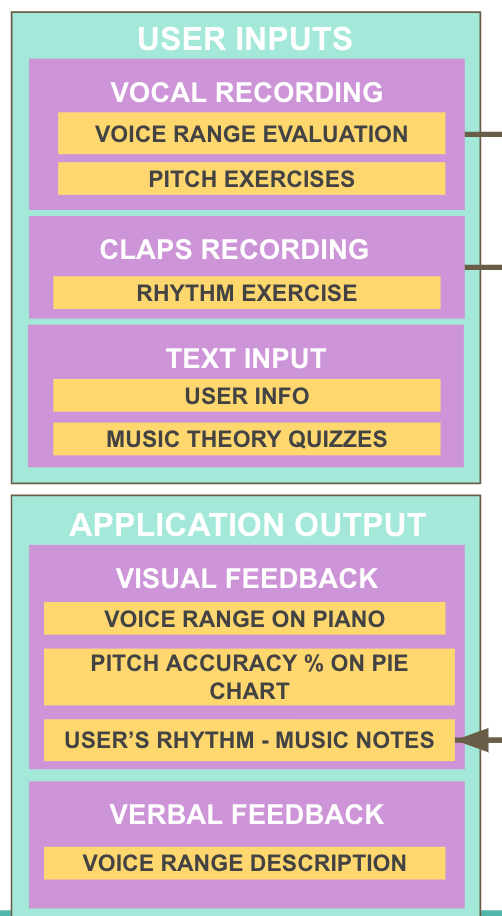

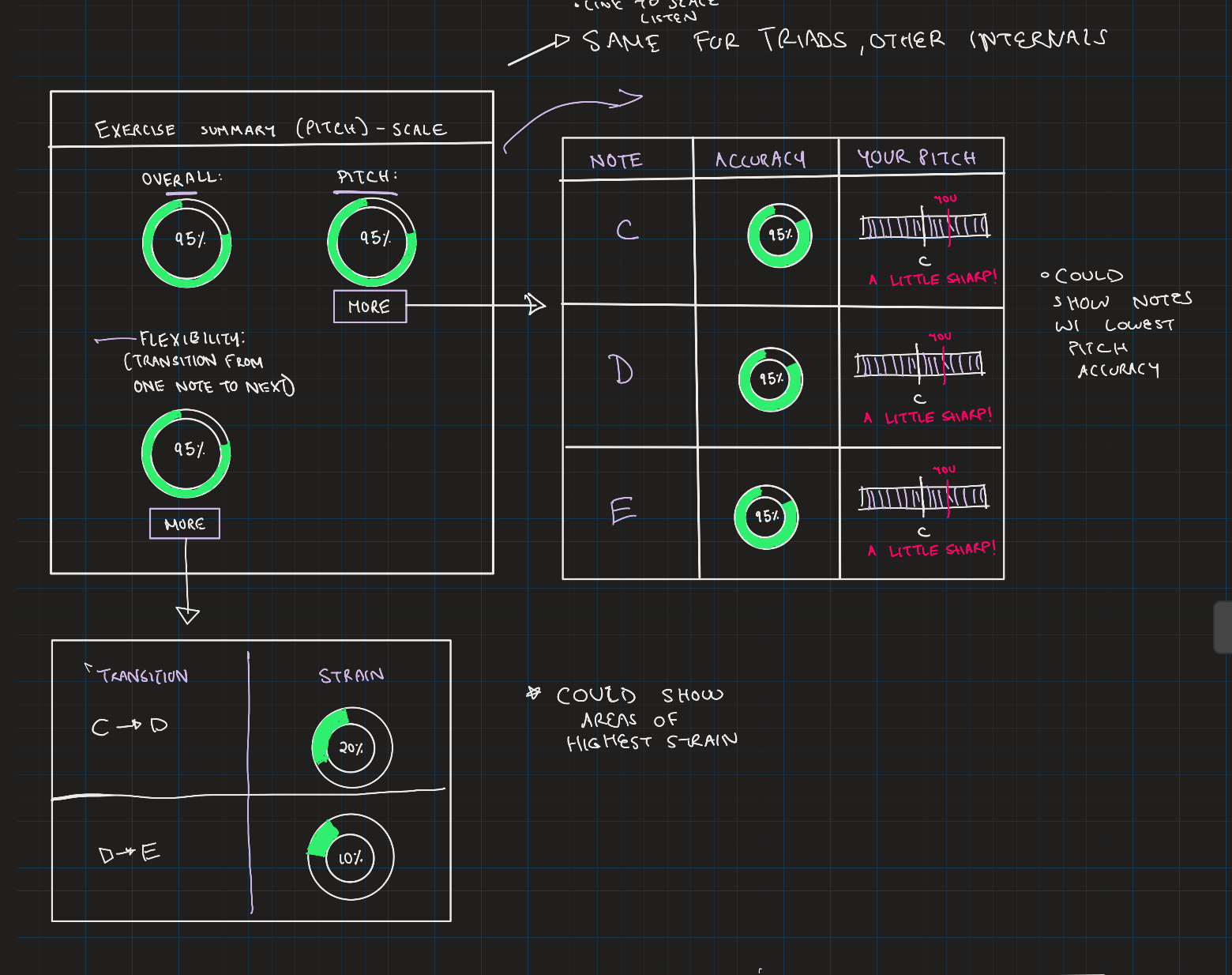

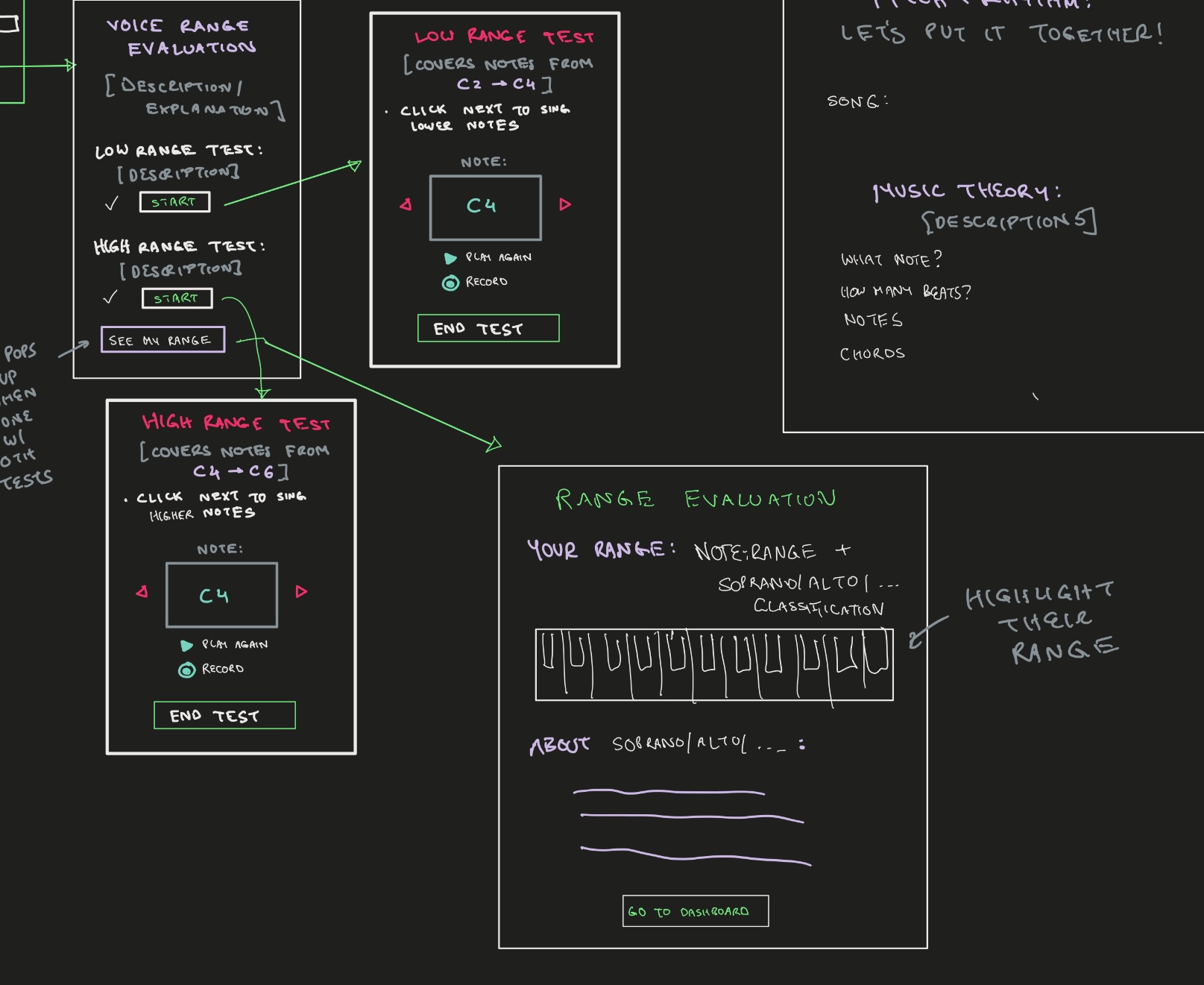

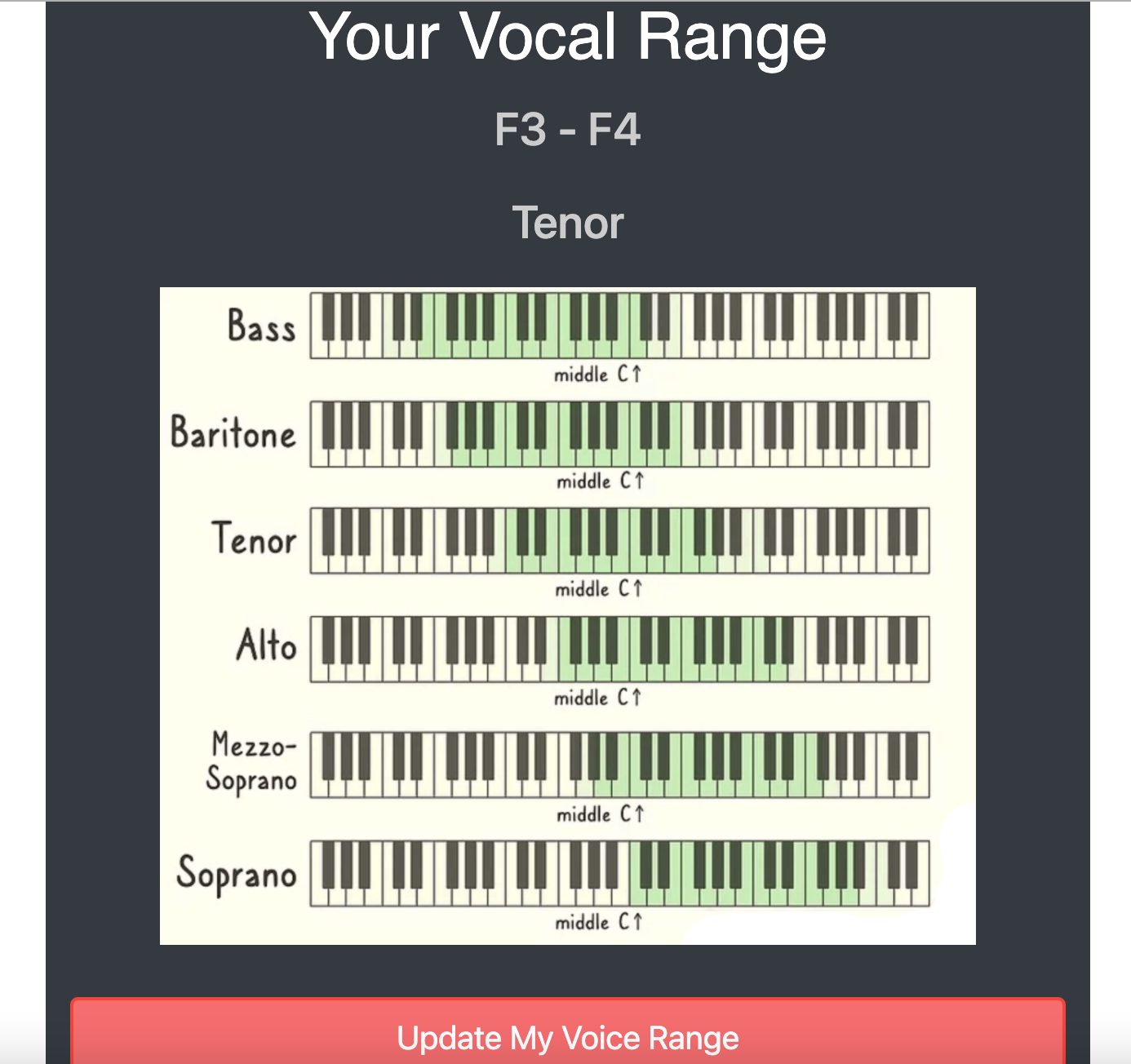

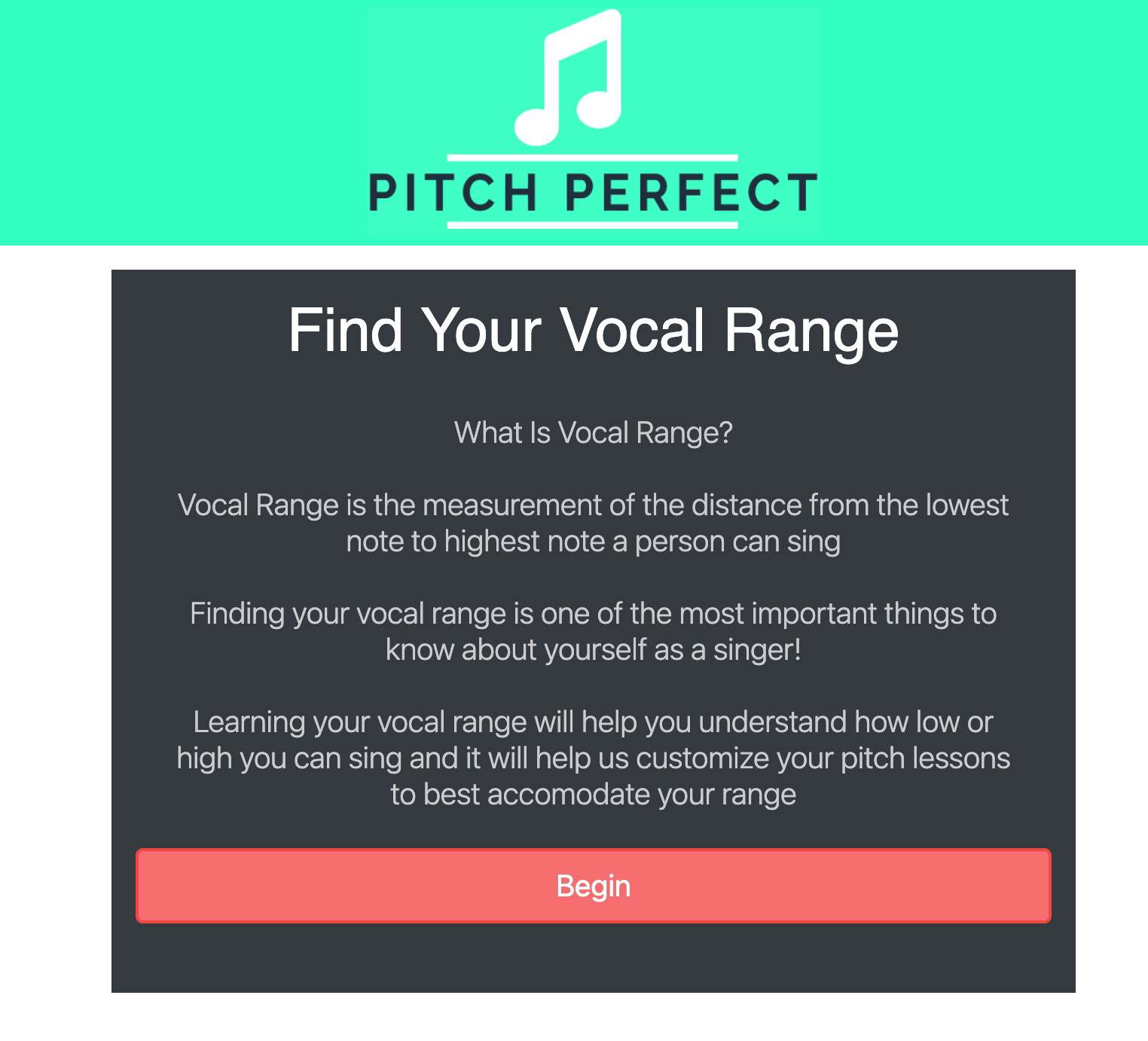

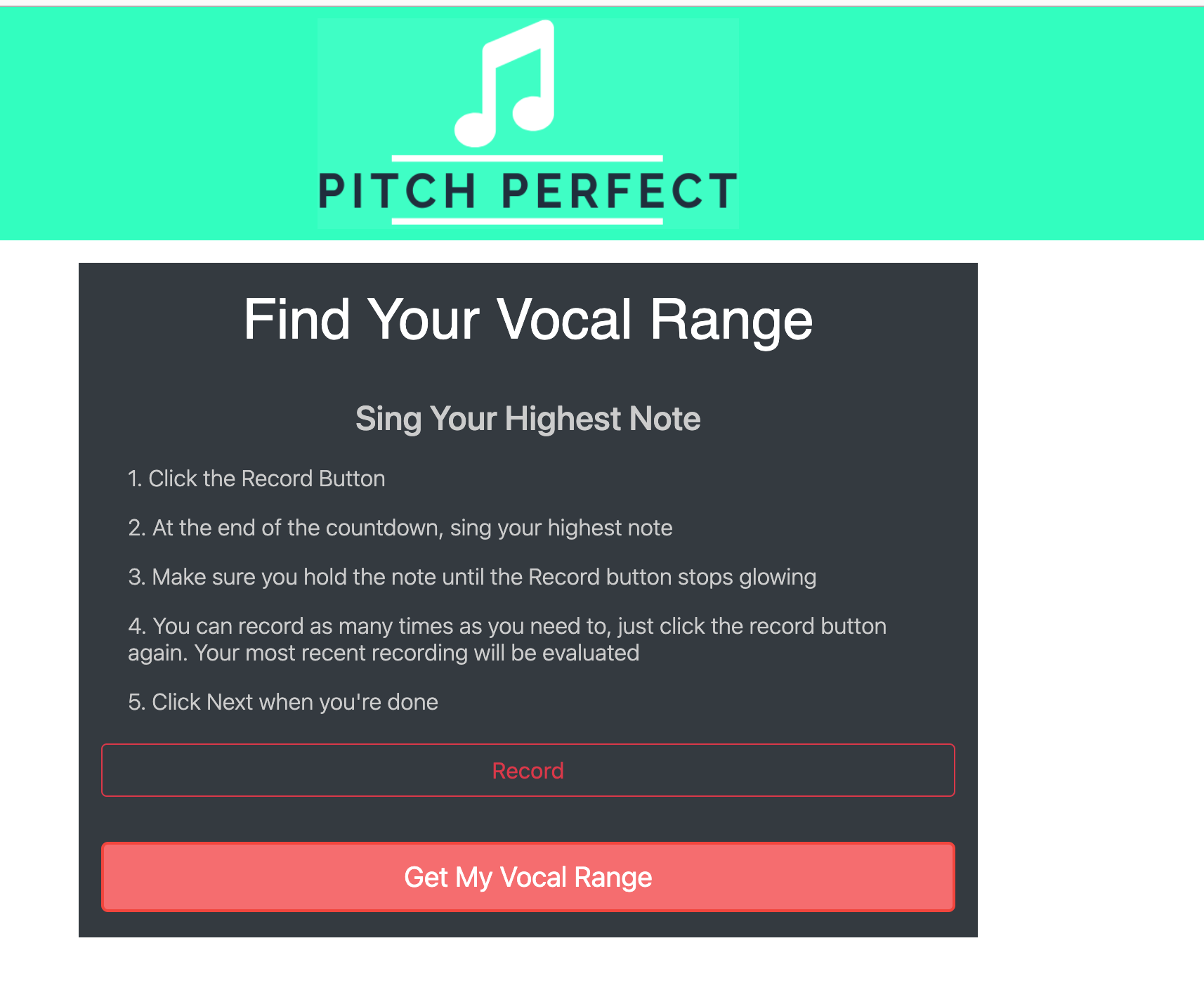

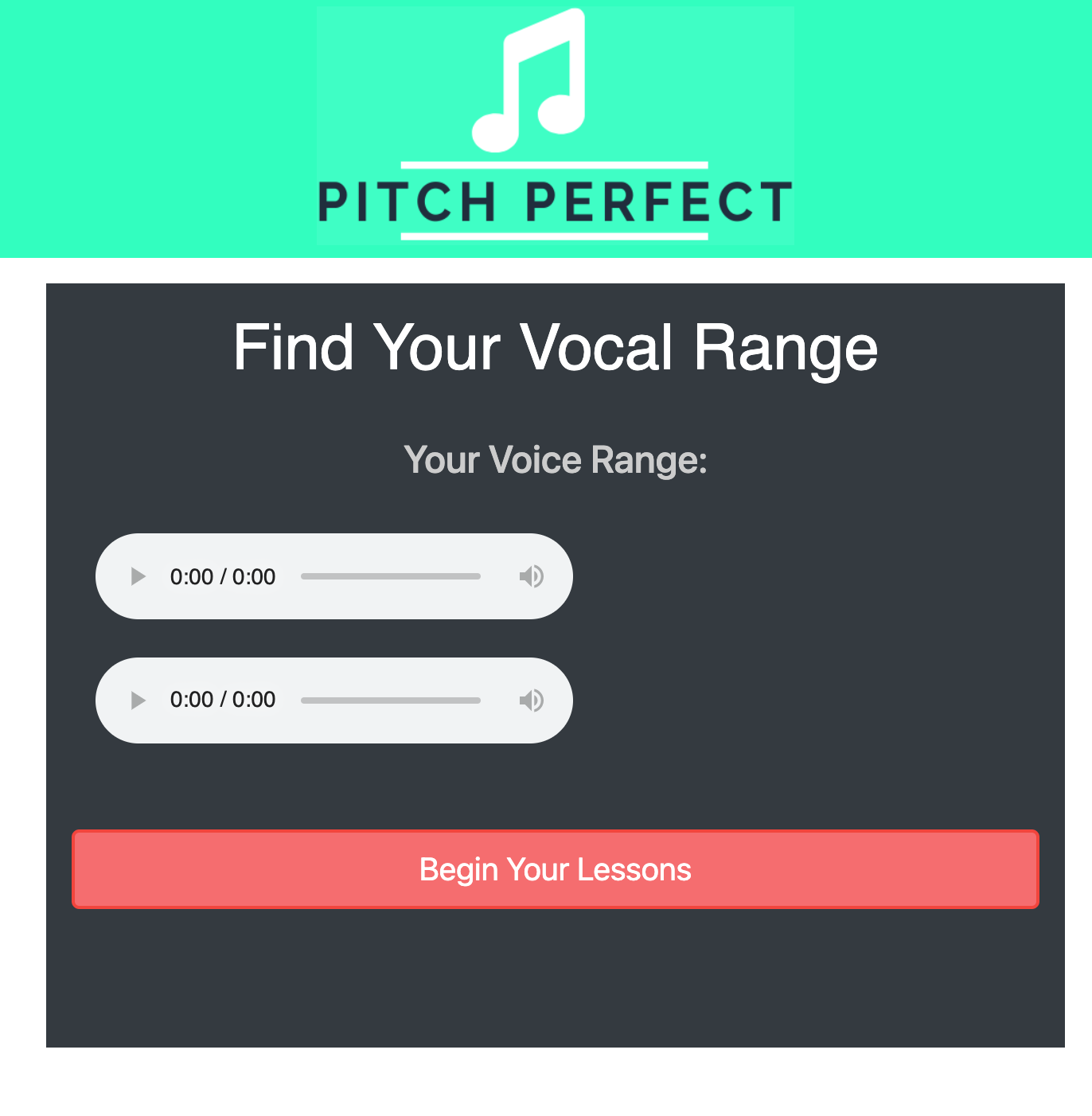

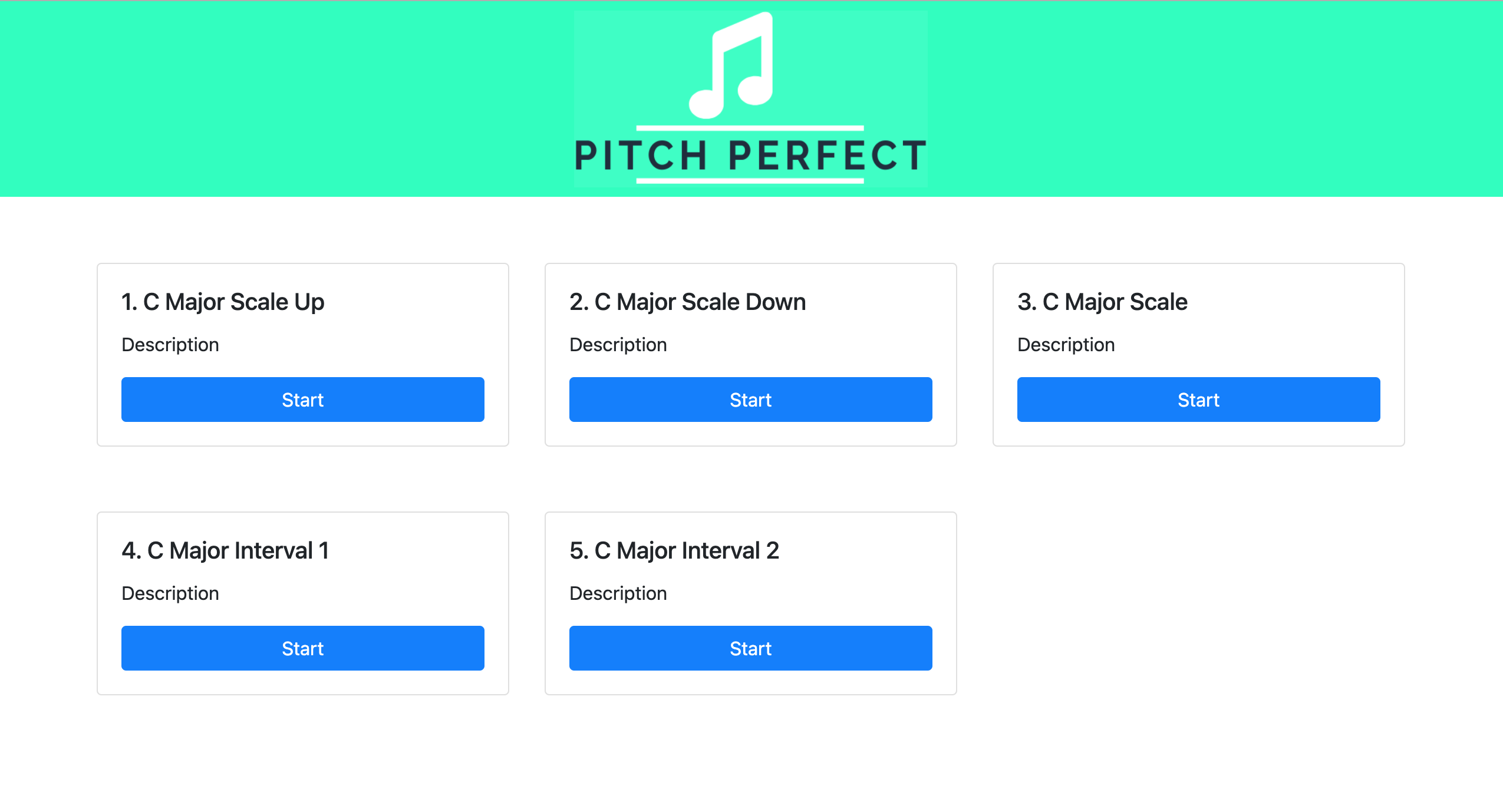

This past week, I was able to integrate Carlos’ pitch detection and feedback algorithm with the user interface. I used the metrics from the algorithm to get the voice range evaluation. The algorithm outputs a note range, and I wrote some code to map that note range to a voice range category (“Soprano” , “Alto”, “Bass”, etc) to show to the user. I also wrote code to use this category to generate pitch lessons, and send expectancy metrics to the pitch feedback so that each user gets a custom lesson and evaluation based on their voice range. I designed the web app so that users can update this voice range at any time. I used the metrics from the pitch detection/feedback algorithm to show a user how sharp and flat a user and how well a user was able to hold each using charts from chart.js library. The pictures of this feedback can be found in the team status report. I’ve attached a picture of the example voice range feedback I got for my own voice range.

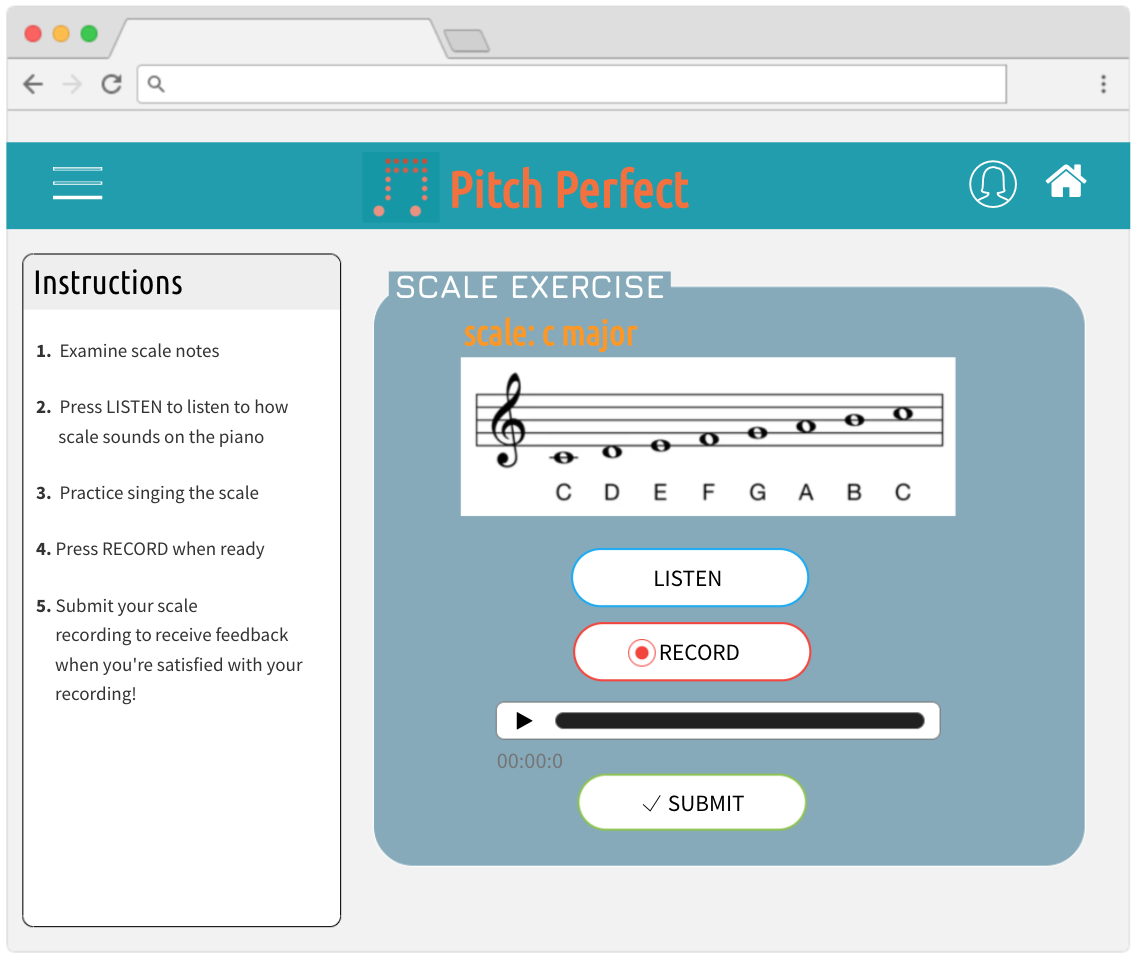

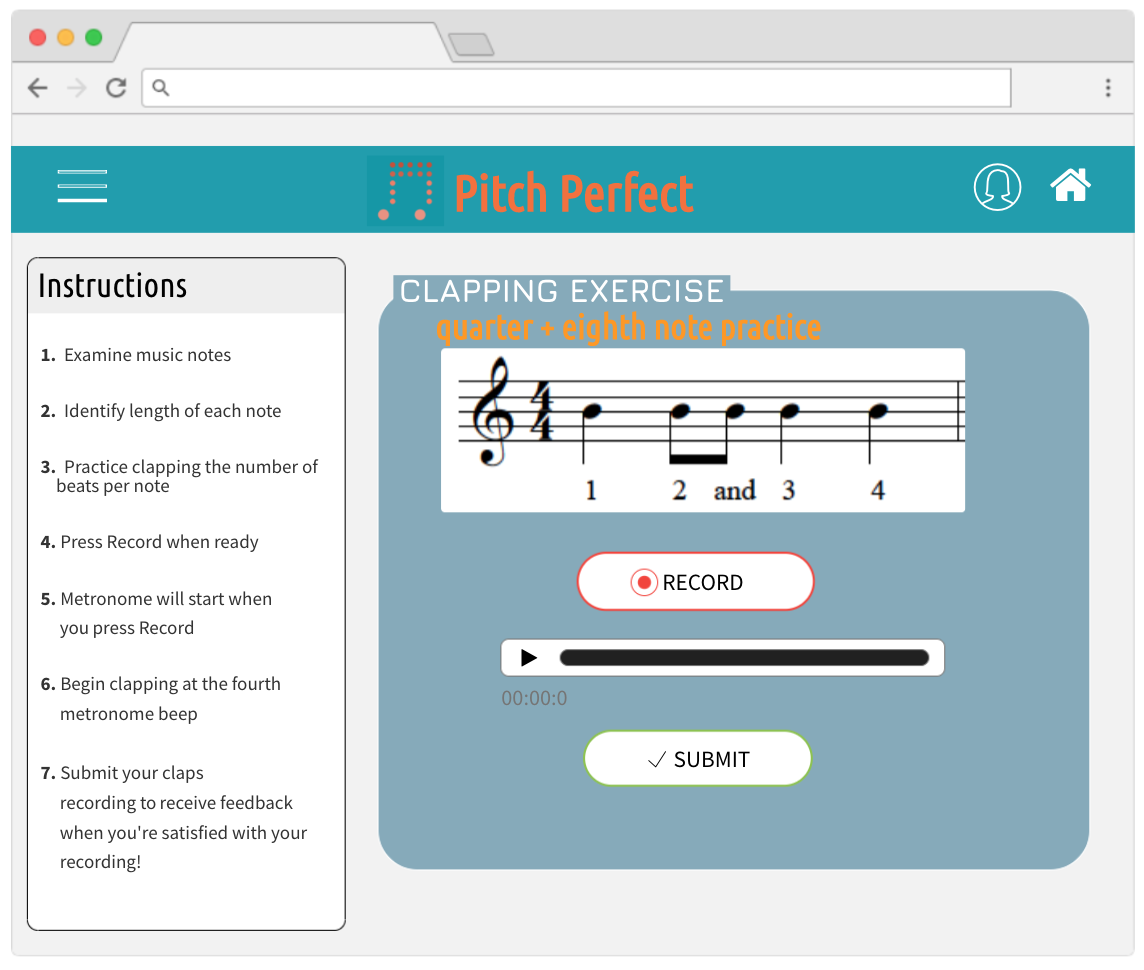

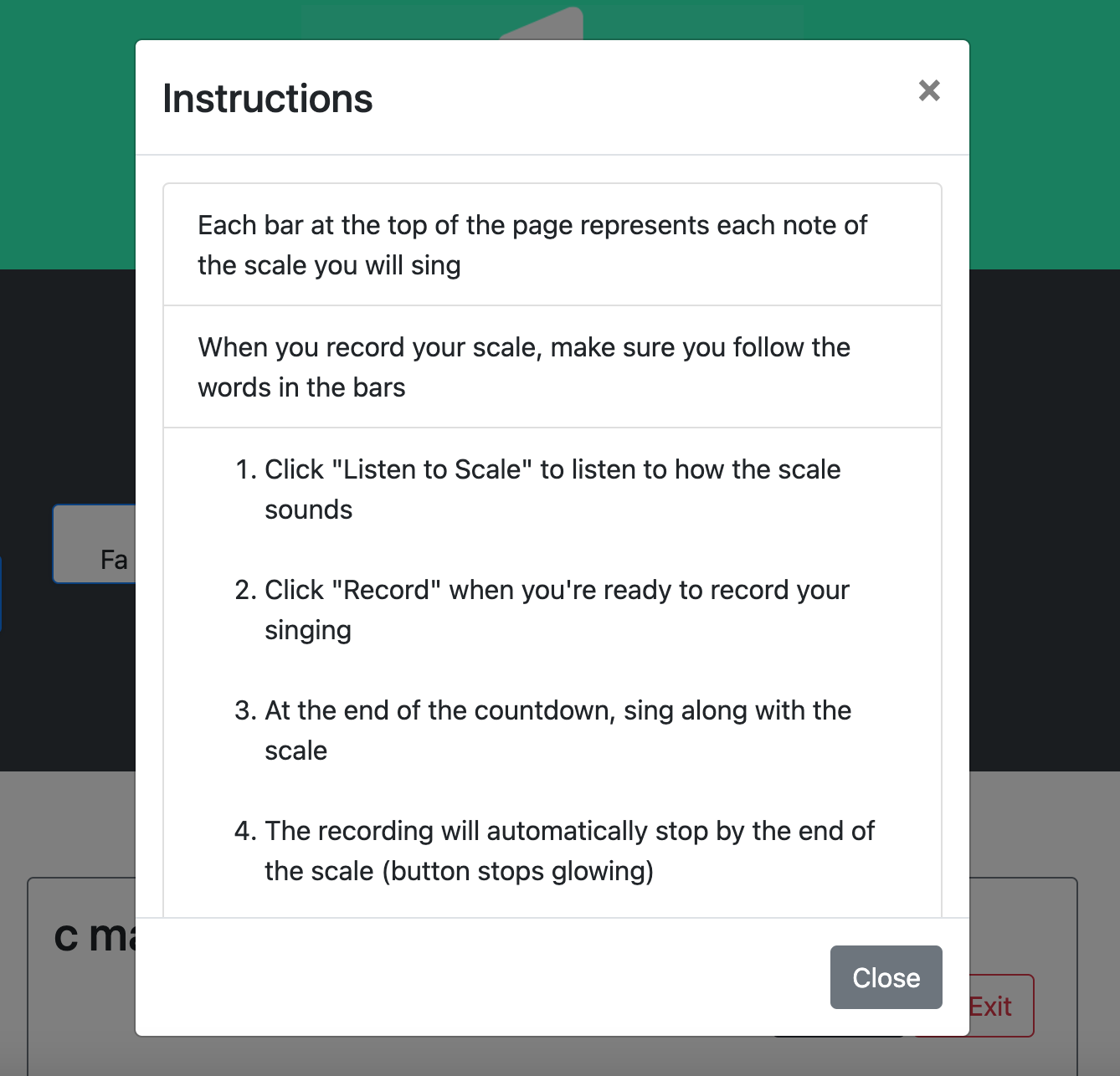

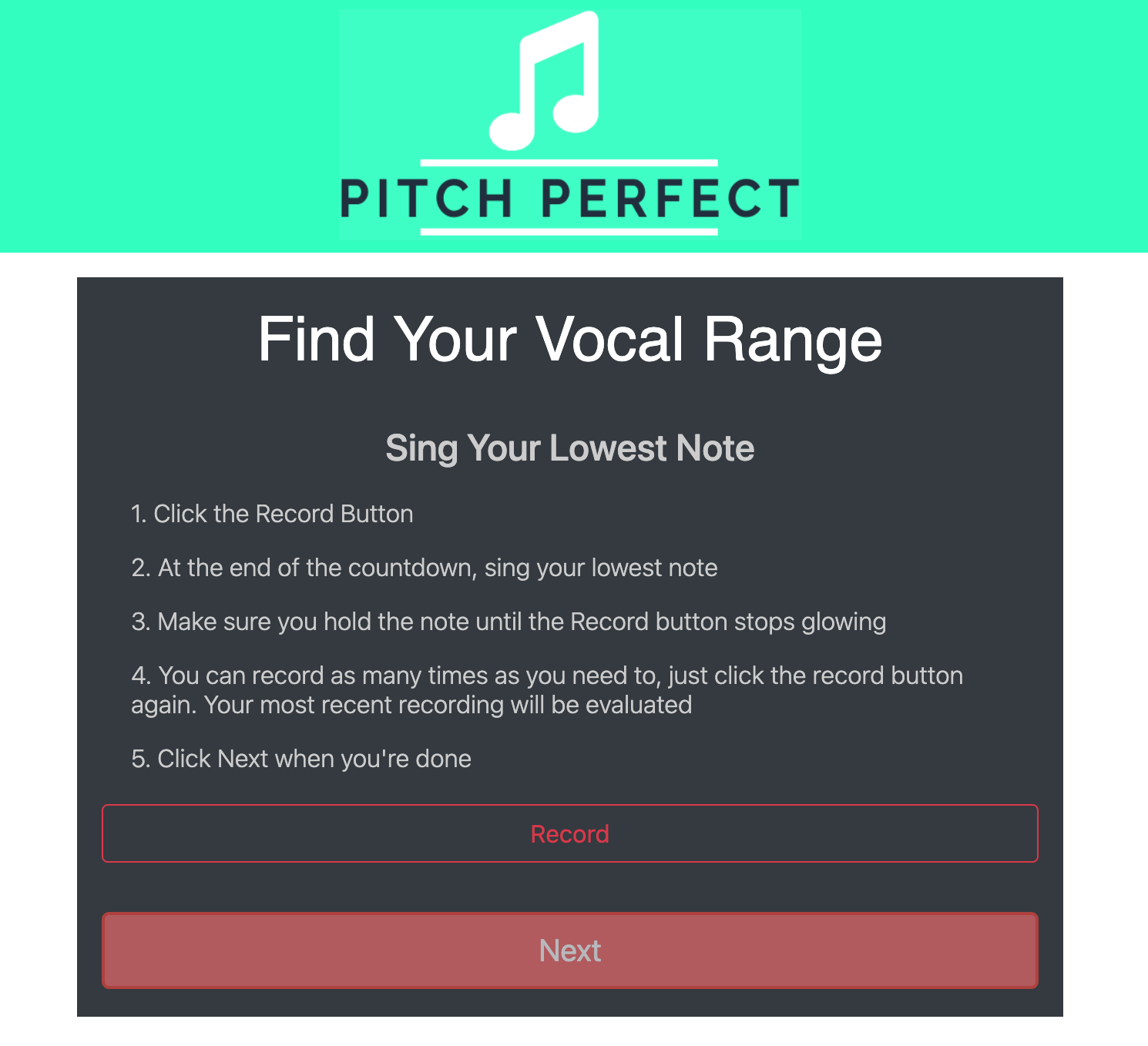

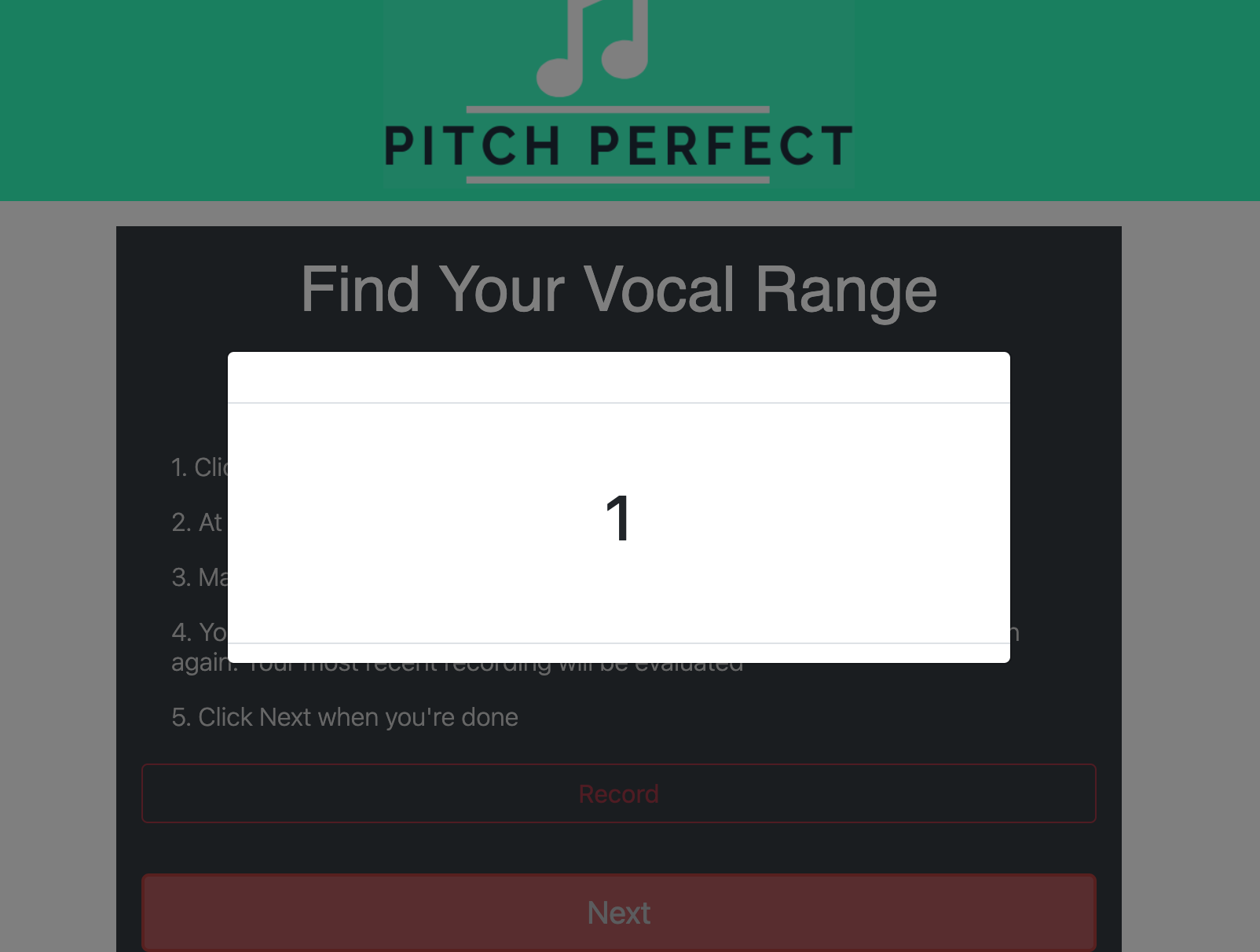

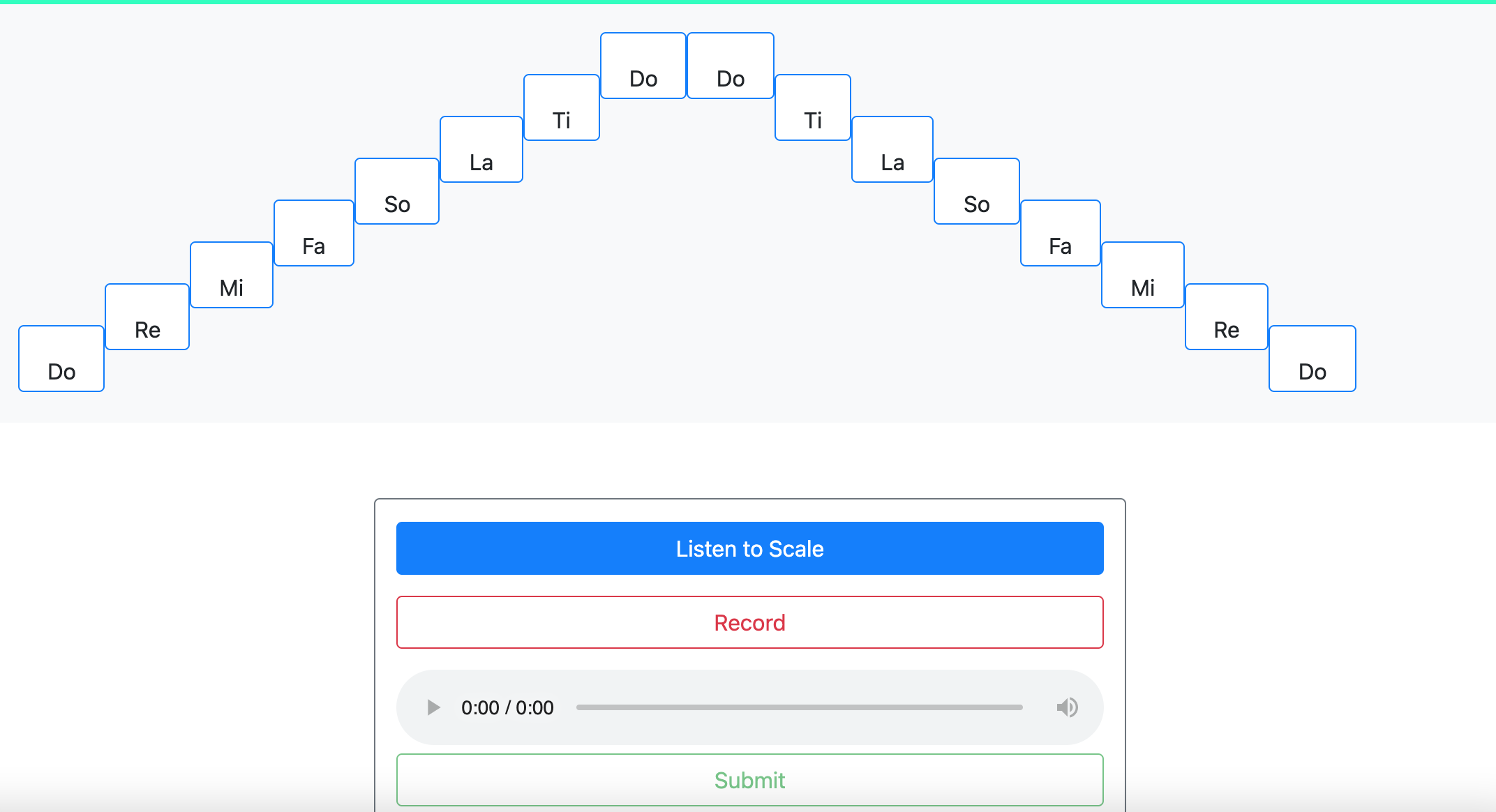

Using the feedback I got from the user experience surveys last week, I made modifications to the pitch exercise so that there are now pop-up instructions, and more navigation buttons (exit buttons, back buttons etc).

The pitch detection algorithm can return empty metrics if the recording is flawed, so I added a case where if the detection algorithm detects a faulty recording, I put in an error message and tell the user to try recording again for both the pitch exercises and the voice range evaluation. This was the best alternative to a page just crashing.

I’ve actually been able to accomplish everything I’ve wanted to put into the web application, and am on schedule. Right now I am just hoping deployment works out.

For the upcoming week, I’ll keep cleaning up and refining the user interface, adding more instructions and navigation for the exercises, and working the welcome page.

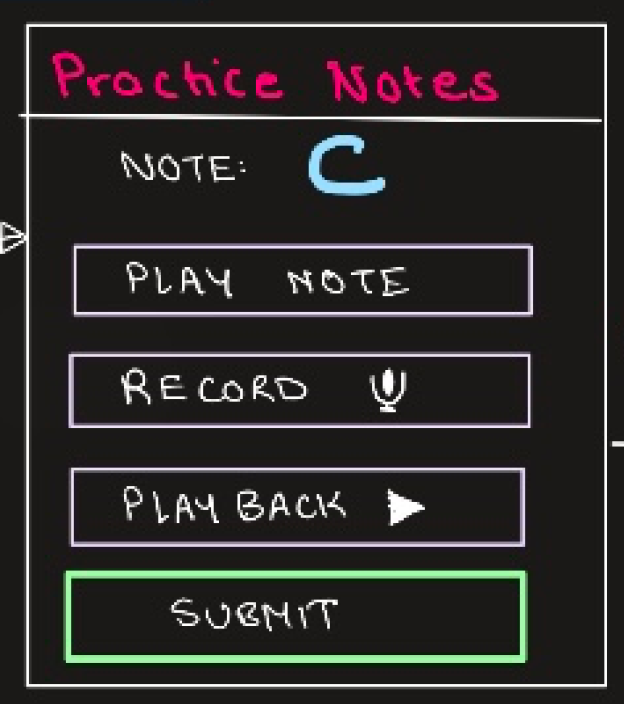

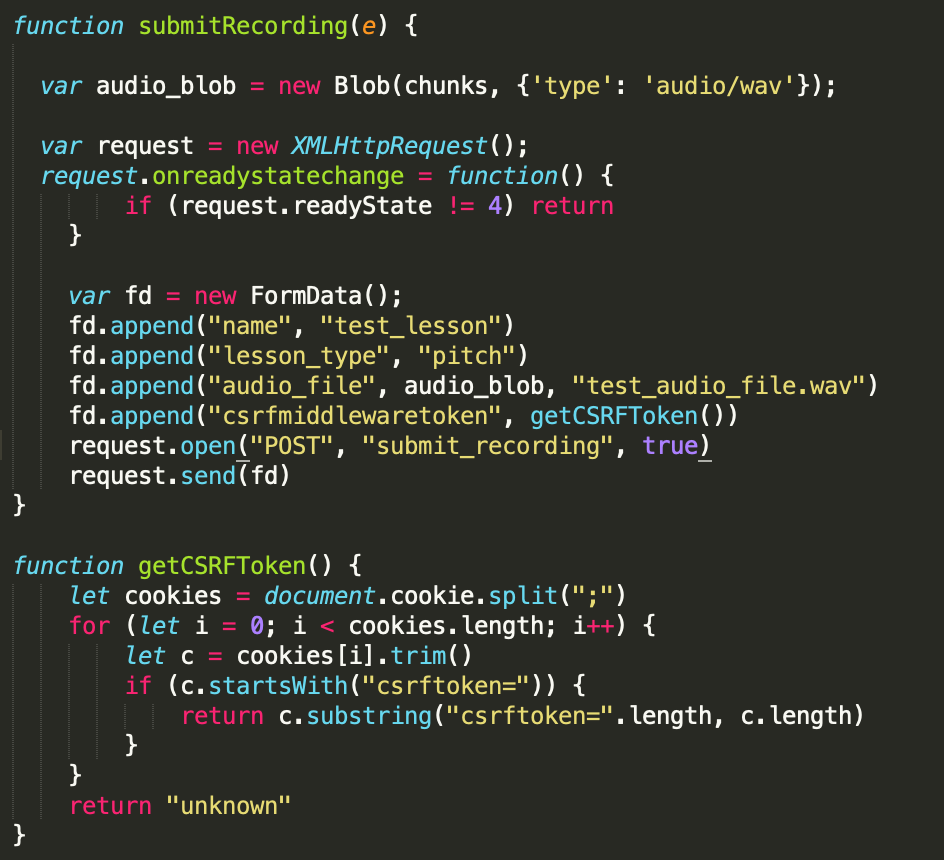

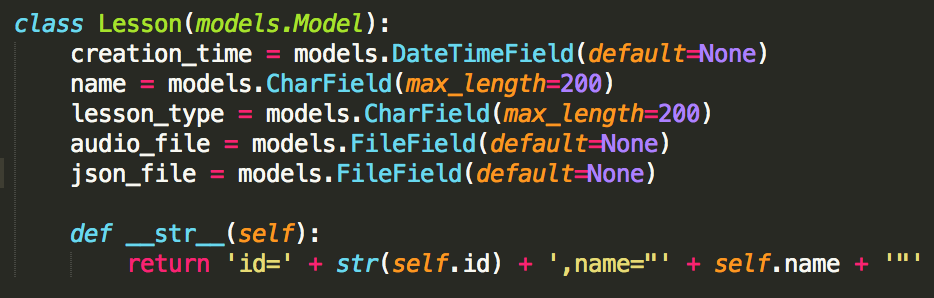

I have planned to link this model to a form that will send the audio recording back to the server as a POST request. This still needs needs to be implemented in code and tested. That’s one of the tasks I plan to complete earlier in this week.

I have planned to link this model to a form that will send the audio recording back to the server as a POST request. This still needs needs to be implemented in code and tested. That’s one of the tasks I plan to complete earlier in this week.