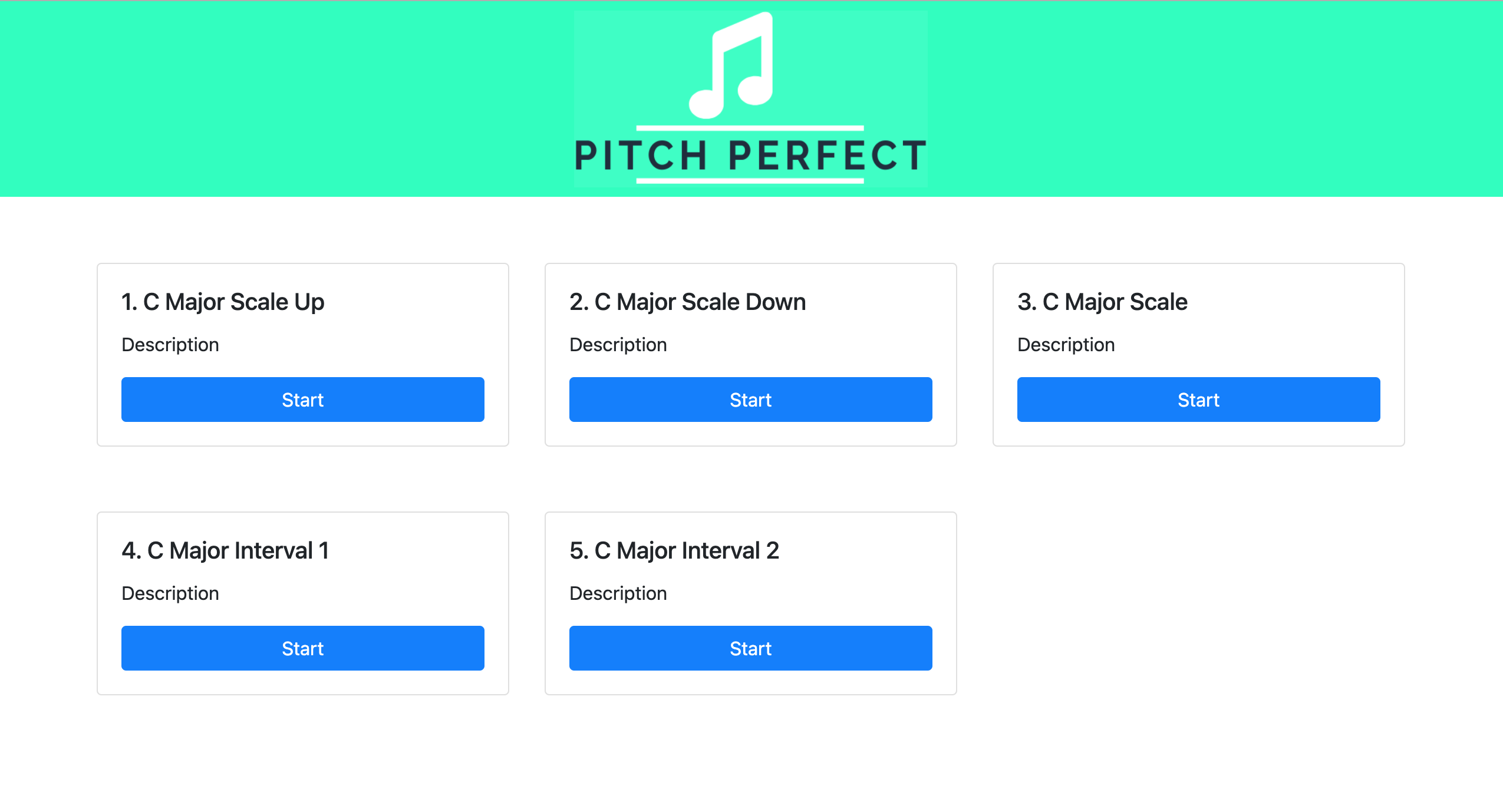

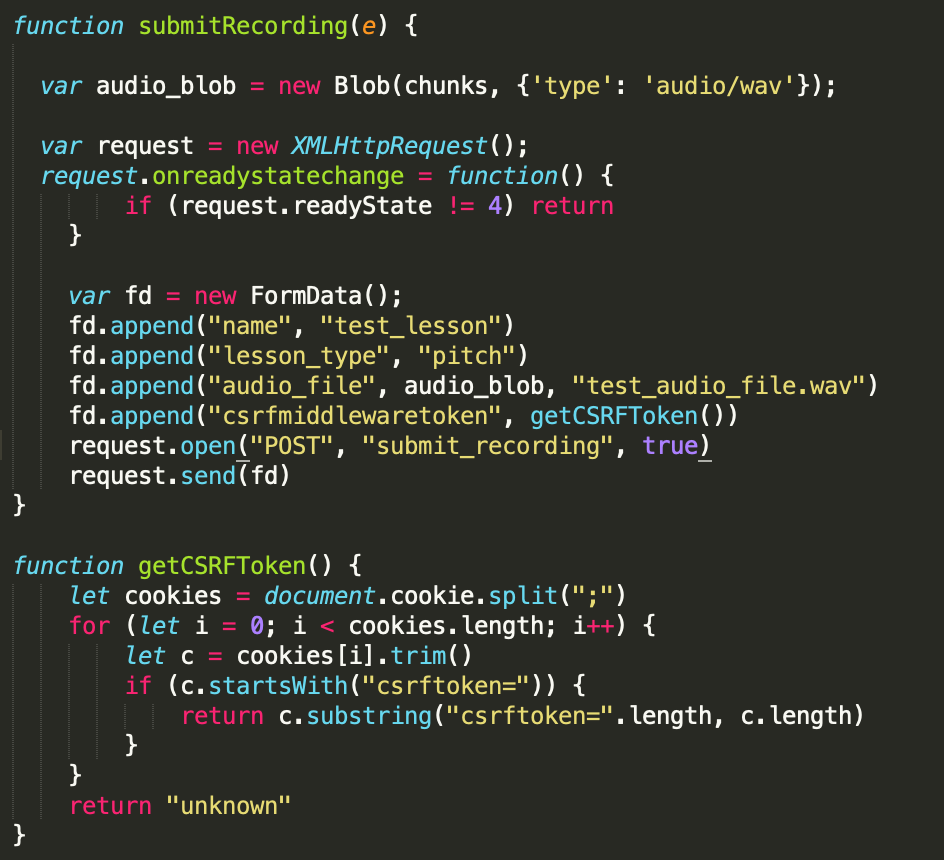

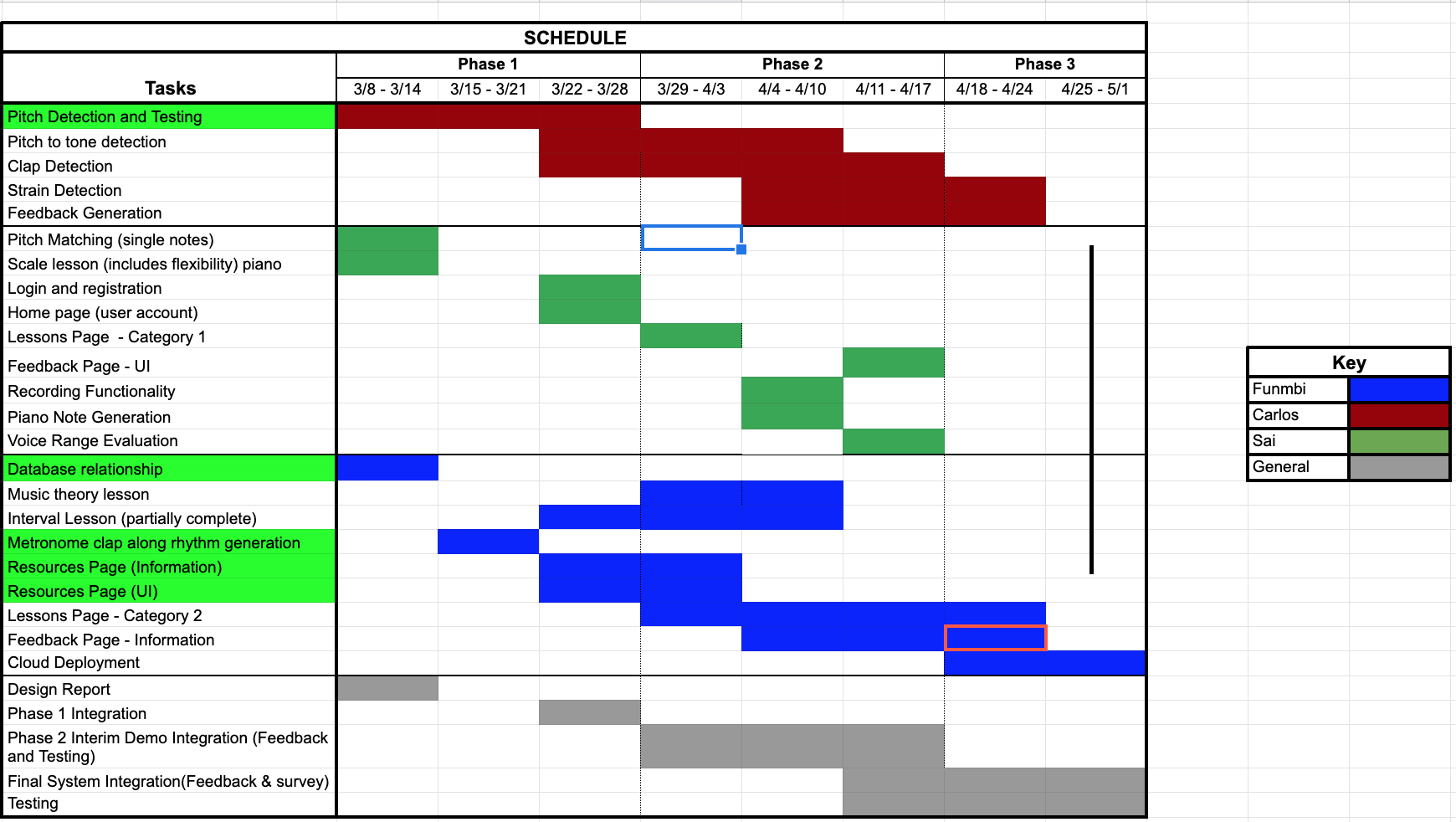

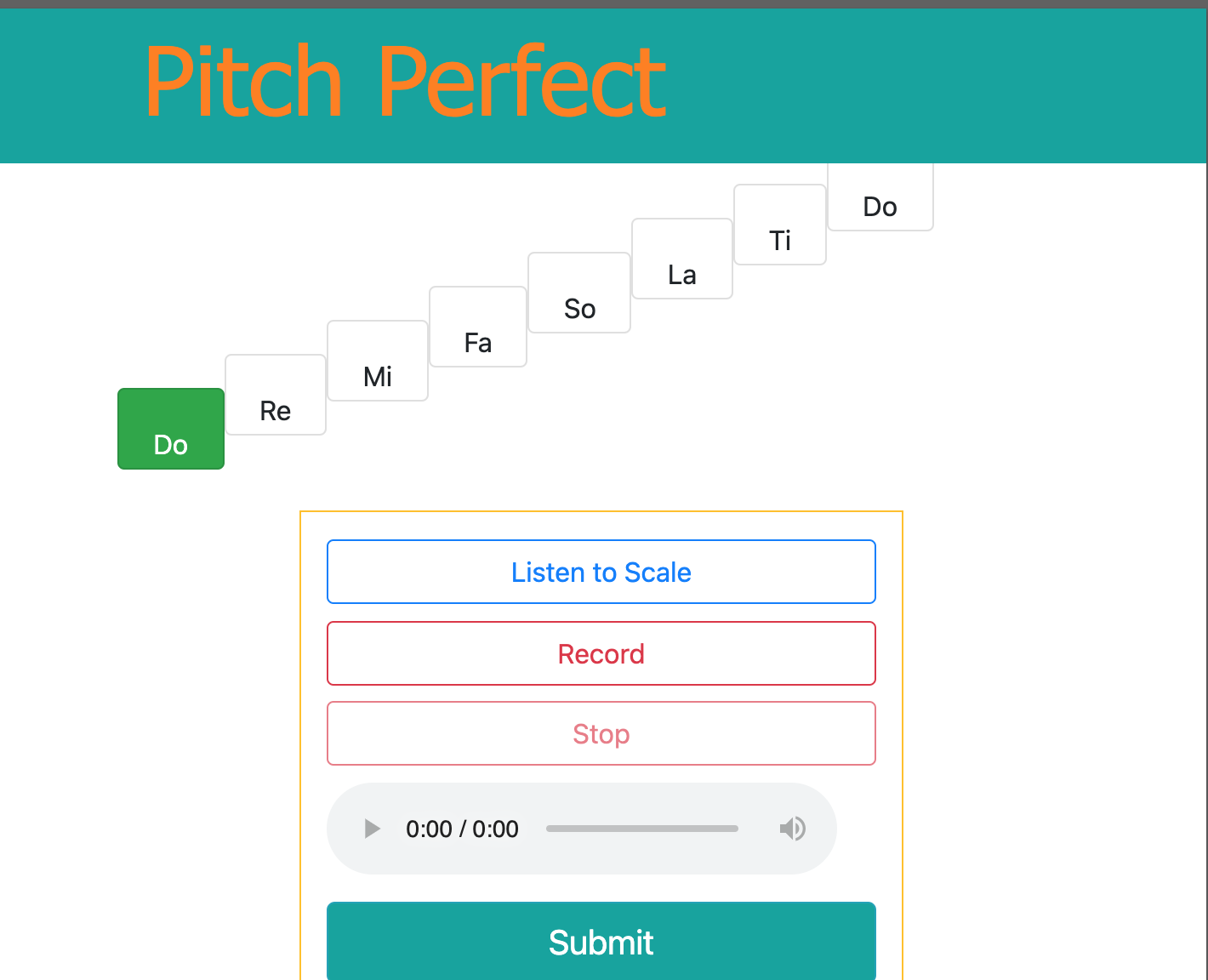

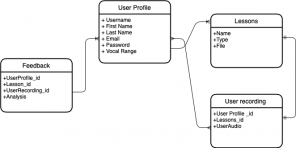

This week, there were some file formatting issues for the recorded audio files that were submitted on the web application. I found out that the API I’m using to record a user’s audio input on a browser doesn’t produce uncorrupt/clean .wav files that you can open or process on the backend, but produces clean .webm files. I found an ffmpeg package that I can call on the server to convert .webm files to .wav files. This package has been successful and produces clean .wav files. We made some design changes to our pitch exercises, there is now a countdown when you press the record button and we wanted to add breaks in between notes when listening to how the scale/interval sounds via piano notes. I had to change the algorithm that produced the sequence of piano notes and displays/animates the solfege visuals. There is a now a dashboard/list of some of the different pitch scale/interval exercises. But now, new exercises can be easily added by just adding a model with its sequence of notes, durations, and solfege. I also spent some time refining the solfege animation/display since some edge cases came up with adding more pitch exercises. I want to add a more refined display for the solfege display this upcoming week using some bootstrap features. Another minor edit I made was adding a nice logo for web app and making the title bar more engaging/bright. I feel like I’m behind schedule, since I thought integration would happen this past week, but we will spend a lot more time on this project together to make sure the integration gets done before next week.

For the upcoming week, I’ll be working on creating the voice range evaluation that will be part of the registration process, integrating with Funmbi’s features, and working with her to create the web app’s main dashboard. I will also integrate my code with Carlos’ Pitch detection algorithm to receive feedback from the audio recordings. I will also try to plan out what the User Experience survey questions/prompts will look like.

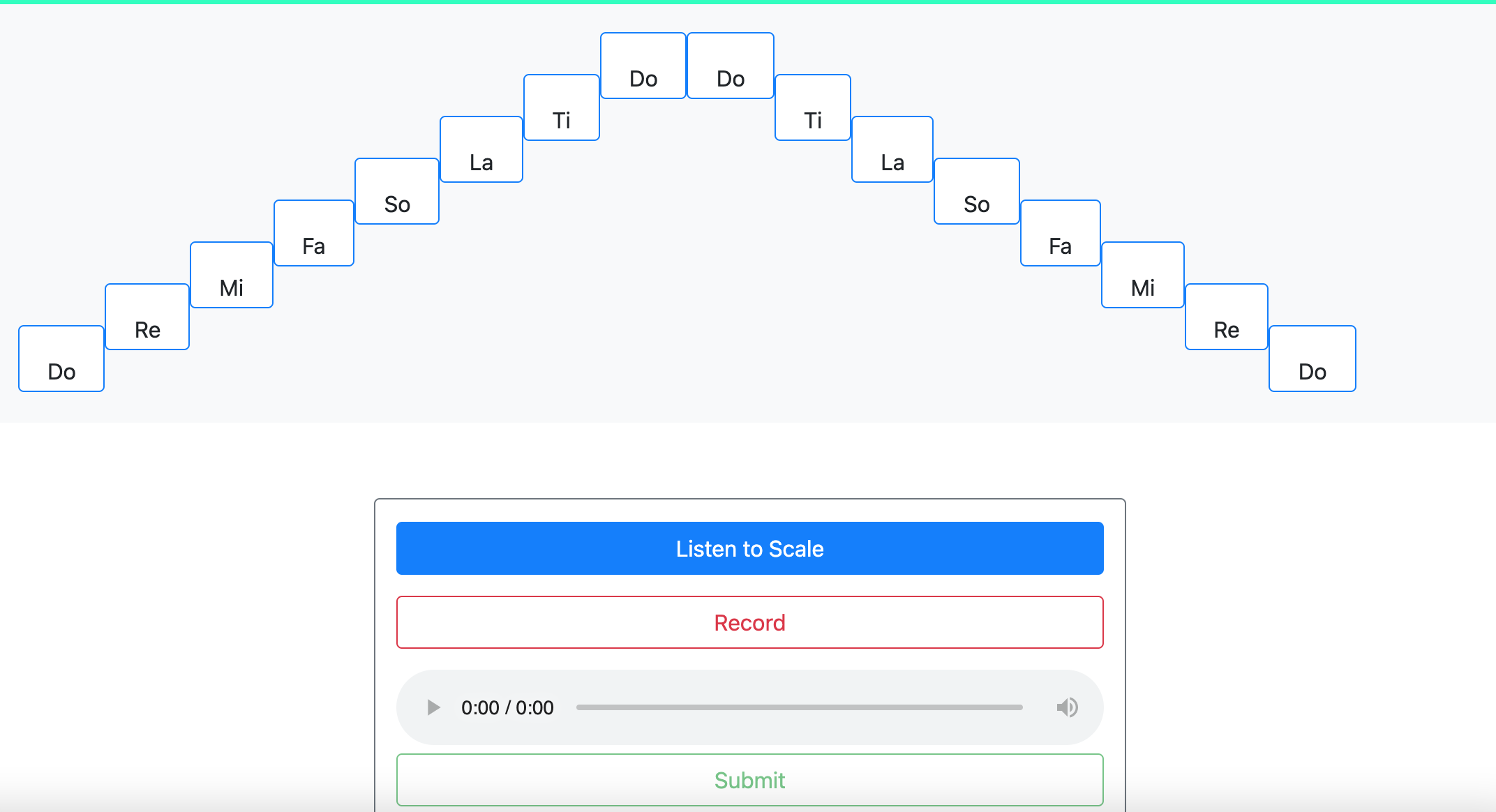

Below are some pictures of the new exercise format and the pitch exercise dashboard.

.

.