We are writing our report and making our demo video!

Team Status Update for 04/25 (Week 11)

Progress

This week, we worked on the final demo; creating videos so it is clear what we have accomplished so far. We are almost done with the engineering part of the project, and we are collecting performance metrics. We created the final presentation and planned out what we will use for the final demo vide.

Deliverables next week

Next week, we will work on the final video and report.

Schedule

On schedule.

Team Status Update for 04/18 (Week 10)

Progress

Path-finding

The algorithm is complete and ready for testing. It uses A* as mentioned before, and the robot is able to arrive at the grid-cell the goal point is located in. Testing is needed to check its robustness.

Pointing

Pointing to the room is almost done. It works well on one side of the room using a multitask regression model. It needs additional data to cover more parts of the room and for hyperparameter fine-tuning.

2D to 3D Mapping

Mapping now runs at 17 fps and data is formatted properly. The map needs to be integrated with point data.

Schedule

On schedule. Next week, we will put together a video for our demo.

Team Status Update for 04/11 (Week 9)

Progress

Point recognition

The multitask model works the best for the small 3×3 room point environment. I will work on collecting a dataset for the 5×5 larger point dataset next week.

Path finding

The path-finding algorithm is in development. It will be a variation of A* algorithm, using 8-connectivity grid representation of the room. With a robust implementation of path-finding, driving to user/robot will be fairly easily done. The implementation will be complete by next week.

2D to 3D Mapping

The mapping has been optimized as far as possible, and the inaccuracies were cleaned up.

Deliverables next week

Next week, we will continue working on our individual systems in preparation for integration.

Schedule

On schedule.

Team Status Update for 04/04 (Week 8)

Progress

We made good progress on pointing, mapping, and 2D to 3D mapping for our demo this week.

Deliverables next week

Gestures

Pointing is coming along. It worked on a toy dataset of 2700 poses, but now we want to build more robust models and collect a larger dataset to do so.

Mapping

The 2D mapping is finally complete. It generates a grid map (and a txt representation of it) that can be used in other functionalities. This will be demonstrated at the interim-demo in the coming week.

Deliverables next week

We will show demo videos during the midpoint demo.

Schedule

On schedule.

Team Status Update for 03/28 (Week 7)

Progress

Gesture Recognition

We made good progress, making sure everything we had before still works with the remote setup. We transitioned into using videos instead of camera streams, optimized the output stream of gestures, and deployed SVM methods of gesture recognition.

In addition, we experimented with different ways to recognize the point.

Risk Management Plan

Most of our risks of the parts not arriving have been resolved, as they arrived this week.

For gesture recognition, being able to work on videos removes our risk for not having enough time to run everything remotely. The major risks left for gesture recognition is not being able to detect the point, but that can potentially be resolved with additional hardware (another camera perspective) and limiting the point problems by the size of the bins we choose.

————————–

Our risk management is pretty much the same; only difference is that we will have to work individually on each risk management.

(From the design proposal doc: )

The largest risk for our project is localization of the robot. Our tasks of going back to home, going to the user, and going to the pointed location all require the robot to know where it is on the map. We are trying to mitigate the risk by using multiple methods to localize the robot. We are using data from our motor encoders to know where the robot has traveled on the 2D map. Additionally, we are going to use the camera view and our camera 3D to 2D mapping in order to get a location of the robot in the room. By having two methods to localize the robot, we can maximize the chances of localization.

Classifying the gestures incorrectly is also a risk. OpenPose can give us incorrect keypoints, which would cause an error in the classificaiton process. To address this, we are using multiple cameras to capture the user from multiple angles in the room. So, we have backup views of the user to classify gestures. Running OpenPose on more cameras decreases our total FPS, so our system can only have at most 3 cameras. We chose 3 cameras to balance the performance of our system and the cost of the hardware required with the accuracy we can get in gesture classification. in addition, we will have backup heuristics to classify the gestures if our system can not confidently recognize a gesture.

Schedule

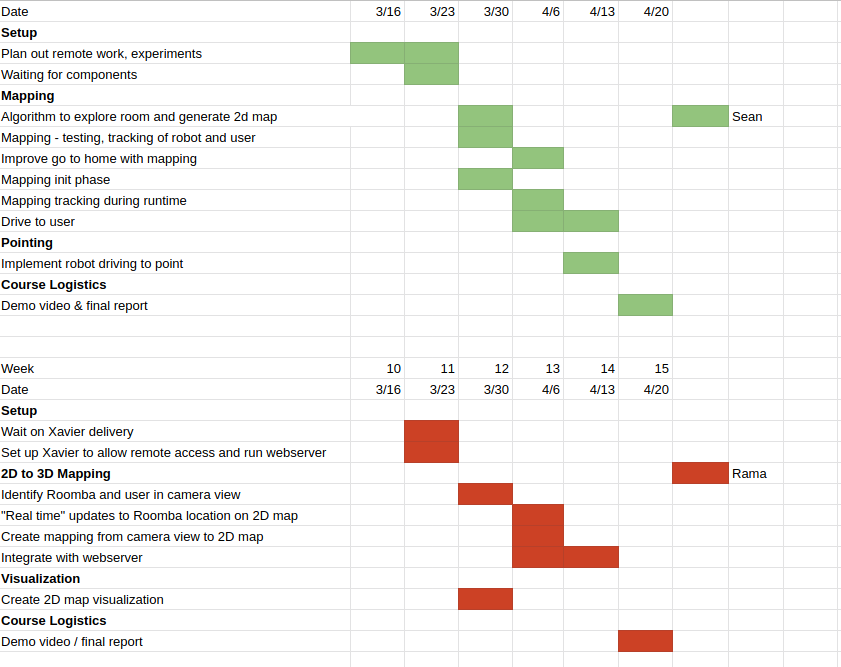

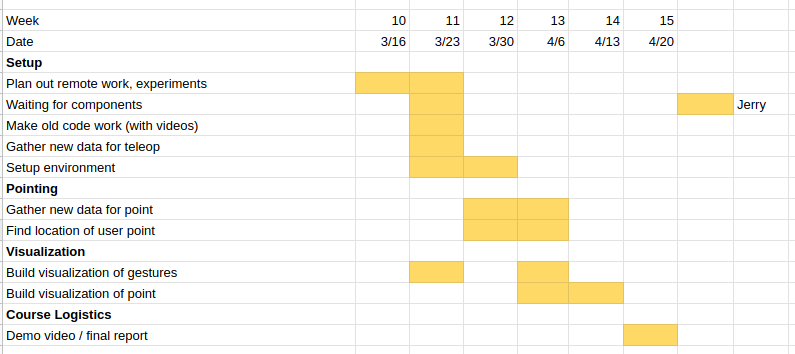

Gantt chart for the revised schedule:

Team Status Update for 03/21 (Week 5, 6)

Progress

This week, we lost access to our lab and our hardware, so we had to re-scope part of our project due to the recent situation. We wanted to transition to remote by keeping the integrity of the project but also having enough individual components for each of us to work on. We originally planned to do everything in simulation, but now we are shipping the hardware and building out each component individually. More information is attached in our statement of work.

Deliverables next week

Gesture recognition

We are working on ways to run the system locally. We lost remote access to our Xavier board, so we are figuring out alternatives on AWS or if we can get it shipped to us. The top priority will be to get the gestures we already recognize working again on the new setup. We are also waiting for our webcams and equipment to ship, as the webcam quality from the laptop is much worse.

Robot manipulation

We don’t have an access to the robot, so for the next couple weeks, we will work on the robot through simulation. This will give us some ideas on how the robot will behave with the algorithms. We hope to get some working 2D-mapping algorithm through the simulation.

Schedule

Behind schedule, but we have readjusted it for remote work.

Team Status Update for 03/07 (Week 4)

Progress

This week, we made good progress towards implementing mapping and gesture recognition using ML methods like SVM.

Robot Mapping

This week, we (partially) implemented the 2D mapping algorithm. There are two phases to the algorithm: edge-following and scanning the rest of the room. The edge-following works fine, but we are still experimenting with the second phase. We need to continue tuning and potentially come up with a new algorithm. Since mapping is a significant part of the project and we don’t want any bottlenecking, we must complete the algorithm by the beginning of the week after the break.

Gesture Recognition

We deployed our data gathering pipelines to collect data for teleop and point data. We also trained both multiclass and one versus all (OVR) SVMs for each task and tried them with our main camera script. Our best teleop model gave around 0.94 accuracy with SVM params C = 10, gamma = “scale”, and polynomial kernel. It was interesting to see the cases that our models beat the heuristics, especially when the user is standing on the of the screen or not directly facing the camera.

Deliverables next week

Next week, we will continue working on gesture recognition with more features and explore 2D point tracking with models. We will also explore the possibility of tracking with multiple cameras. We also will start exploring methods to locate the robot from the image in order to start working on our 2D to 3D mapping synchronization.

Schedule

On Schedule.

Team Status Update for 02/29 (Week 3)

Progress

We finished the first version of our MVP! We integrated all our systems together for teleop control and docking to the charging point. It was great to see all the components come together. The gesture recognition is still shaky and the home docking system needs to use our mapping system, but we will work on that next.

Software

We experimented with different cameras and are working on pipelines for data collection to train ML models to recognize gestures from keypoints. In addition, the sockets we used for our webserver are unstable, so we did work to make sure that crashes were greatly reduced.

Hardware

It was good to see the hardware components coming together. We were able to control Roomba via headless RPi which was our MVP. Additionally, we began building the mapping algorithm for the robot. It would require a decent amount of testing and fixing, but we hope to finish it by spring break. 2D mapping is essential for the robot’s additional tasks, so we have to make sure the algorithm works correctly before starting to use the generated map.

Deliverables next week

Still a few fixes to finish our MVP and we want to start using a 2D mapping system from the Roomba.

Team Status Update for 02/22 (Week 2)

We all put in a ton of work this week (~60 hrs) towards our MVP. Most of the individual components are completed, and we will integrate our system next week.

OpenPose and Xavier board

We were able to successfully run OpenPose on the Xavier board at 30 FPS after a few optimizations. Additionally, we were able to setup the Xavier board with the CMU network, so we can ssh into our board to work on it anytime.

Gesture Recognition

We were able to start classifying our 3 easiest gestures, left hand up, right hand up, and both hands up with heuristics using images and video examples. We set up the camera we purchased and we are able to classify gestures live at 30FPS. We also developed heuristics for tele-op commands, but are going to try model based approaches next week.

Webserver

After our tests with OpenPose on the board showed that it only used less than 20% CPU, we decided to host our webserver on the Xavier board. This lets us reduce the latency for communication to AWS. We also tested python flask and node.js express servers. We decided on using node.js because it was asynchronous and could handle concurrent requests better. We also tested the latency to the server, and it took around 70ms, so we are still on track for being under our 1.9s response time requirement.

Raspberry Pi

We successfully setup the Raspberry pi for the robot module. Raspbian was installed, which might be helpful programming the robot in the early stage before we move on to the headless protocol. Rama also setup a web server for the RPi so we can control it without a physical connection.

Roomba

We setup the Roomba and are able to communicate with it to pyserial. Sean was able to experiment with the Roomba Open Communication Interface to send motor commands and read sensor values.

Design Review Presentation

We all worked on the design review presentation next week. The presentation made us discuss our solution approaches to different problems. Now that we have tested many of our individual components, we had a much better perspective on how to tackle many of our problems.

Drive-to-Point

It is still early to think about this functionality since it is beyond our MVP, but we spent some time this week discussing about potential solution approaches to it. We will try out both methods and determine which is the right way to proceed.

-

- Method 1: Using multiple images from different perspectives to draw a line from the user’s arm to the ground.

- Using trig with angles from core to arm, arm to shoulder, and feet position to determine ground.

- Method 2: Using neural network classifier to predict the position in the room using keypoints as input

- Collect training data of keypoints and proper bin

- Treat every 1ft x 1ft square in the room as a bin in a grid

- Regression model to determine x and y coordinate in the grid

- Method 1: Using multiple images from different perspectives to draw a line from the user’s arm to the ground.

Deliverables next week

We are on track to complete our MVP and we hope to finish our tele-op next week!