Progress

Gesture Recognition

We made good progress, making sure everything we had before still works with the remote setup. We transitioned into using videos instead of camera streams, optimized the output stream of gestures, and deployed SVM methods of gesture recognition.

In addition, we experimented with different ways to recognize the point.

Risk Management Plan

Most of our risks of the parts not arriving have been resolved, as they arrived this week.

For gesture recognition, being able to work on videos removes our risk for not having enough time to run everything remotely. The major risks left for gesture recognition is not being able to detect the point, but that can potentially be resolved with additional hardware (another camera perspective) and limiting the point problems by the size of the bins we choose.

————————–

Our risk management is pretty much the same; only difference is that we will have to work individually on each risk management.

(From the design proposal doc: )

The largest risk for our project is localization of the robot. Our tasks of going back to home, going to the user, and going to the pointed location all require the robot to know where it is on the map. We are trying to mitigate the risk by using multiple methods to localize the robot. We are using data from our motor encoders to know where the robot has traveled on the 2D map. Additionally, we are going to use the camera view and our camera 3D to 2D mapping in order to get a location of the robot in the room. By having two methods to localize the robot, we can maximize the chances of localization.

Classifying the gestures incorrectly is also a risk. OpenPose can give us incorrect keypoints, which would cause an error in the classificaiton process. To address this, we are using multiple cameras to capture the user from multiple angles in the room. So, we have backup views of the user to classify gestures. Running OpenPose on more cameras decreases our total FPS, so our system can only have at most 3 cameras. We chose 3 cameras to balance the performance of our system and the cost of the hardware required with the accuracy we can get in gesture classification. in addition, we will have backup heuristics to classify the gestures if our system can not confidently recognize a gesture.

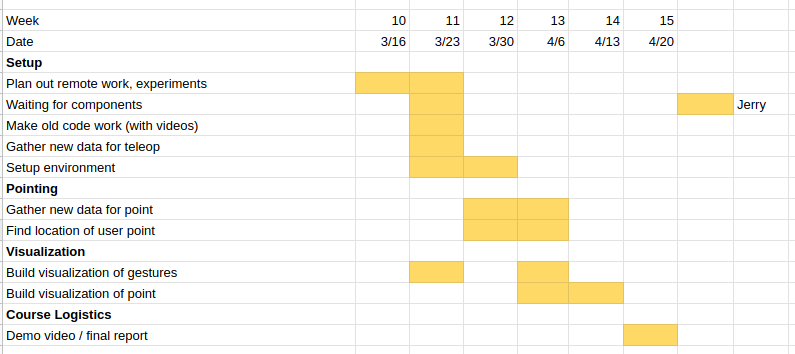

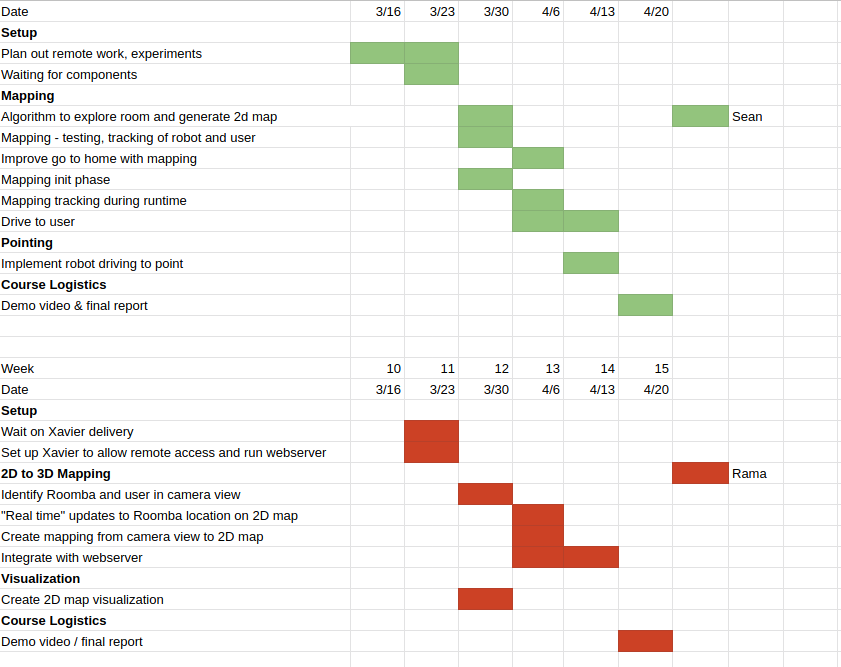

Schedule

Gantt chart for the revised schedule: