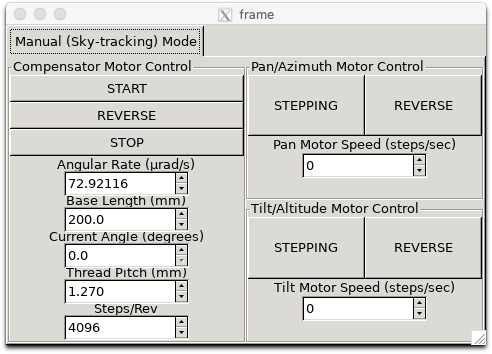

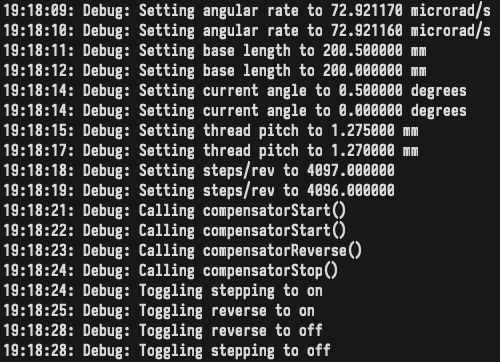

I was able to verify that CV code compiles on the Pi and works with the motor controllers and libgphoto2 camera input. I wrote a GUI app (attached is a screenshot) for the motor controllers and fixed some bugs that my teammates pointed out to me. I haven’t integrated the CV code into the GUI app yet, so I am behind on that. Next week we have our final presentation, and the week after that we have the final demo, so I will spend next week working on those with my teammates, working on integrating the CV code into the GUI, and working on any last-minute software improvements.