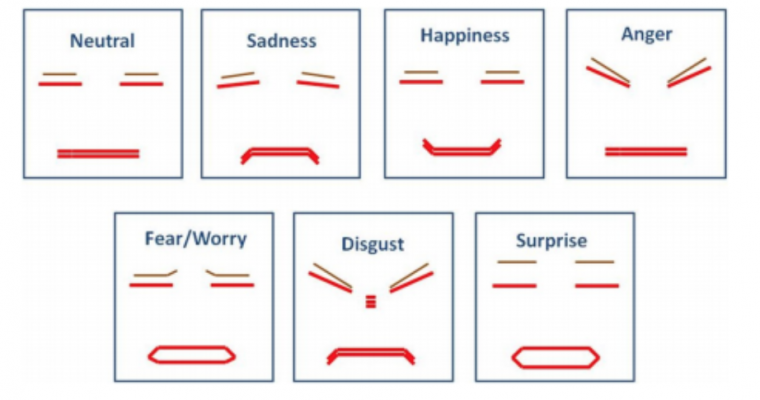

On Sunday, I cut out the cardboard pieces for the head, and one of the arms.I also found a mini servo motor in my home, so I attached that to the arm. I soldered the motor shield that came in last week, and attached it to the Raspberry Pi. I ran the servo motor code that I wrote on Friday for the mini servo, and debugged a few errors with it. I also programmed four emotions on the eyes display: happiness, sadness, anger, and worry. I made a video which showcased the progress I had made so far for the interim demo on Monday.

On Monday, I assembled the pieces for the arm. I connected the mini servo to it, and was able to rotate the arm via the motor code I wrote. After the interim demo, I tested the arm out more, and realized that the mini servo did not have enough torque for the arm, as I had hoped it would. To get around not having servo horns for the regular servos, which have enough torque, I hotglued a piece of cardboard to the servo gear. I can attach the servo to the arm via the cardboard piece, which will let me move the arm with a normal servo motor.

On Wednesday, I downloaded the libraries needed for the ML and audio parts on my laptop, which took much longer than I anticipated, since these libraries were large and I had many issues through out the process. After I setup my laptop with the appropriate libraries and environment, I was able to get Ashika’s ML code and Jade’s audio code working on my laptop. This is essential since the final demo would be running on my laptop and hardware.

On Friday, I downloaded the libraries needed for the audio part on the Raspberry Pi, which also took longer than anticipated. After setting up the Raspberry Pi, I attached the audio hardware to the Pi, and got the audio code working through the hardware and Pi on my end. This is essential since the final demo would be running on my laptop and hardware. I also tried to get the text display code working with the Pi and text display hardware. However, there were a few issues with the libraries and environment setup, and Ashika and I were not able to finish debugging it.

In the upcoming week, I would like to finish debugging/updating the text display code with Ashika, write code to get the face display working, since it came in on Friday, and assemble the head and arms and attach it to the body.