Abha’s Status Report for April 4

On Sunday, I tried to debug the issues with showing an image on the eyes display through an SD card. Initially, my plan was to put an image of a cat eye on the SD card, and the eye display would read it. Most of the time was spent on trying to get sample code online working with the files that they had given. After working through a few issues, I was able to get an image of flowers (supplied by Adafruit) displayed on the screen. My next goal was to get my own image displaying on the screen. However, even after converting my image to the appropriate file type, the display couldn’t read it. After some internet digging, the issue was that the header of my image was not in the correct format. To fix it, I would need to convert the image to hexcode, and convert the first 40 bytes of hexcode to string to read the header (and I did not want to do this lol).

On Monday, I took a step back and tried other ways to convert my image to the correct file type. Eventually, one of the websites that I used was able to convert it with the appropriate header. I (finally) got the image onto the display. However, there was two issues with the image displayed (shocking). The first one was that the colors were messed up. The second was that the image would scroll down the display every time the image was refreshed (the origin wasn’t constant for some reason). This meant that if I changed the eye image to show a different sentiment, the eye wouldn’t be in the same location.

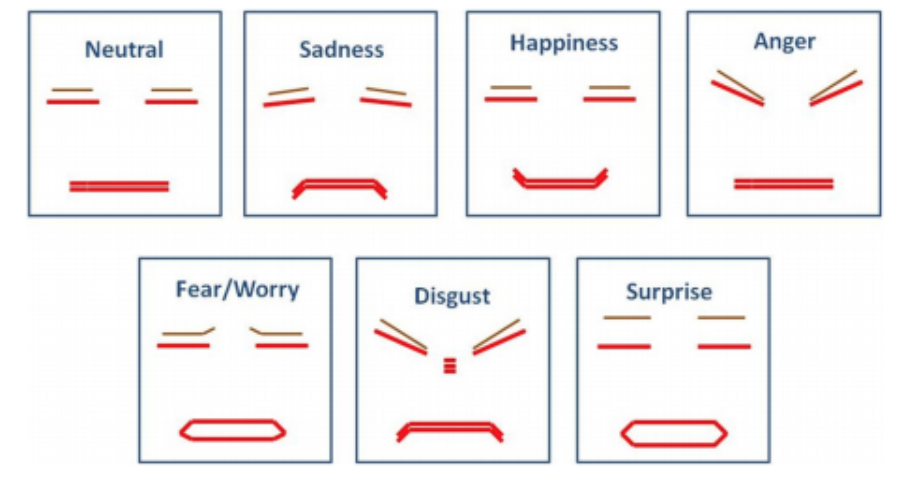

On Wednesday, I tried to debug the scrolling image situation from Monday. However, I was not able to figure out the issue. I also played with drawing lines and shapes instead to draw eyes instead of using issues. For example, I was able to do a few of these eyes that also show emotion well.

However, we realized that just the eyes is not enough to show sentiments. The shape of the mouth is also important. Therefore, we decided to buy one screen for the entire face, which would display eyes, nose, and mouth so that we could alter the shape of the eyes and mouth to show sentiment. I ordered the display and it should come in next week.

On Friday, I switched gears to writing code for moving the motors on the Raspberry Pi. Unfortunately, it took some time to setup an environment where I could work with a Raspi since I didn’t have a mouse at home. I wrote some basic code to move the motors.

Next week, I will test the motors code, integrate Ashika’s code with the text display, and work on the head display when that comes in. I am waiting to get the head display before I cut out the cardboard pieces for the head to ensure that I have the correct dimensions for the display cutoff first.