Design review slides: Team_D2_-_Mon_11_15

Design review document: Design_Review_Project_Report

Carnegie Mellon ECE Capstone, Spring 2019 – Olivia Xu, Haohan Shi, Yanying Zhu

Design review slides: Team_D2_-_Mon_11_15

Design review document: Design_Review_Project_Report

Our USB microphone, speaker and RPi camera arrived this week. I mainly worked on these tasks this week:

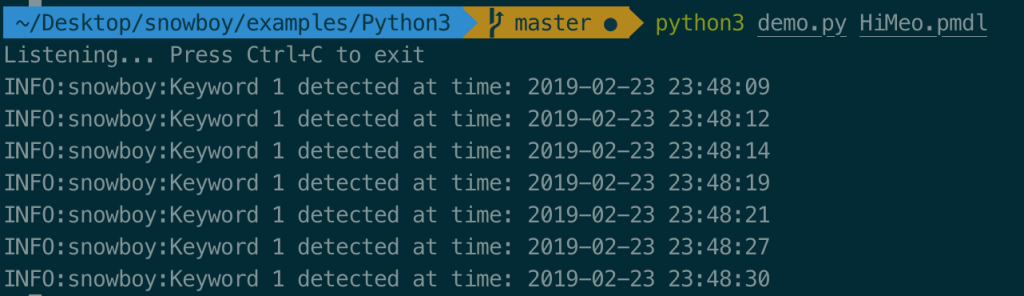

My goal next week is to start adding command recognition to our system, and ask for more input to our hotword detection model.

We are currently on schedule, camera input and speaker + microphone in/output is correctly configured. And our movement control is also in progress.

We encontered dead Raspberry Pi this week, luckily we still have a backup RPi but we may consider order one more. Which is something we need to take care of in our project management.

Our Polulu robot kit and Raspberry Pi arrived this Tuesday, so my major accomplishment this week is:

My goal for next week is to implement the hotword detection on RPi when microphone and speaker arrives and start designing block diagram for our complete system for design review, which includes what RPi and Polulu should do on different tasks, when and how does the communication between the two occur, and the requirements for each part of the system.

We are currently on schedule because we started working on the actual implementation of movement control such as edge and obstacle detection this week, and the basic functionality of our control system which is hotword detection is currently ready to test on RPi.

Our main concern this week to our project is the computation power of RPi, especially graphics computation, after some research on facial detection and video recording examples on RPi, we noticed that the framerate on video can be as low as 7-8 FPS when opencv + facial recognition is on, and due to the fact that we have lots of other functionalities and components for RPi to take care of, we need to pay attention to the CPU usage for different components.