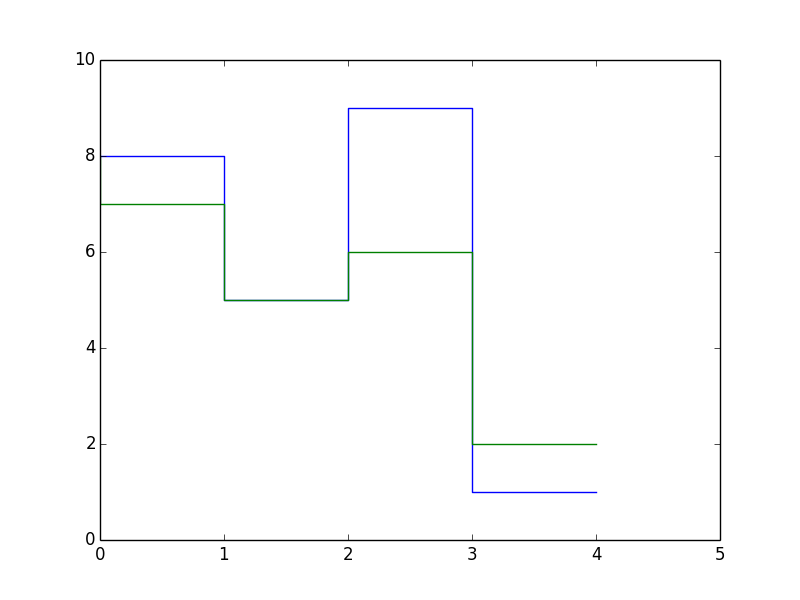

This week I implemented a version of the algorithm with DTW. The code is able to take in vocal input and distinguish between vocal inputs that are more similar. To test it out, I recorded myself singing the same song twice and second totally different song and was able to have the ranked output that the first two were the most similar matching out of any other pairing in the available 3 songs. That is

1: song 1

2: song 1

3: song 2

1-2 was deemed more similar than 1-3 and 2-3.

This was great to see because it is a simple working version of the app. Imagining 2 and 3 to be the library and 1 to be the vocal input, we were able to see 1 matched to the right song in the library.

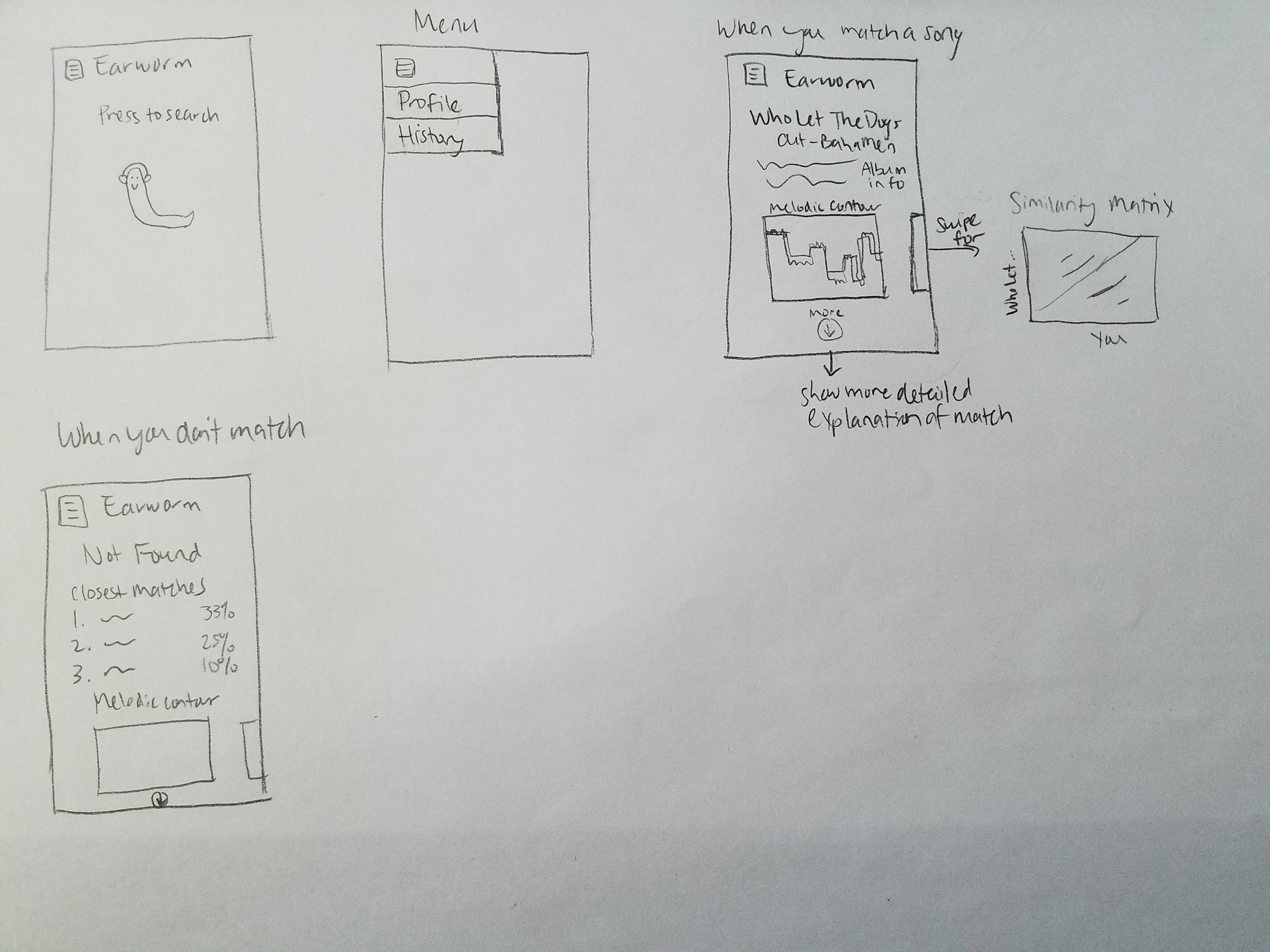

The next steps include:

-Getting the library to be processed MIDIs in the same format as the vocal input I am extracting now

-Seeing how well the ranking system works when the library is expanded and the comparisons grow upwards to in the hundreds. We might need to process the model at that point.

I believe that if next week I can begin the debugging step as described above, we should be in great shape.

TEAM UPDATE:

- What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

- Biggest risk is that it is too slow and not very accurate — some naive plans include keeping the library fairly slow and not attempting to do a weighted average with the similarity-matrices.

- Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

- So far no changes were made to the design, the proof of concepts code we have so far seems to be working correctly. Next week as we begin to debug the situations that resemble the actual production environment, we might need to.

- Provide an updated schedule if changes have occurred.

- No changes.