Final Presentation Slides

Status Report: Nolan

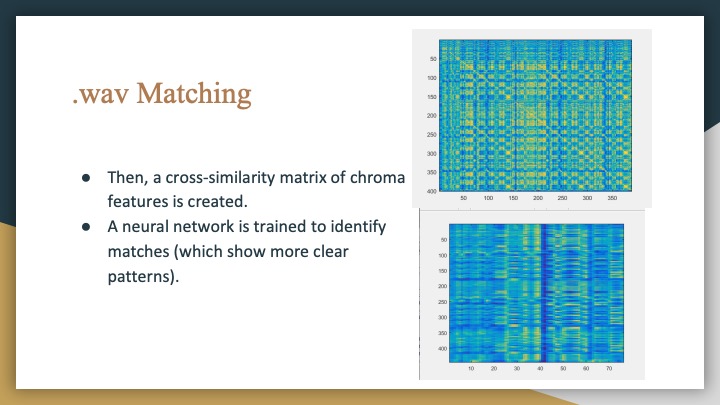

The neural network is trained on our database of 63 songs (these are sort of arbitrarily chosen, I went on a youtubeToWav converter downloading spree).

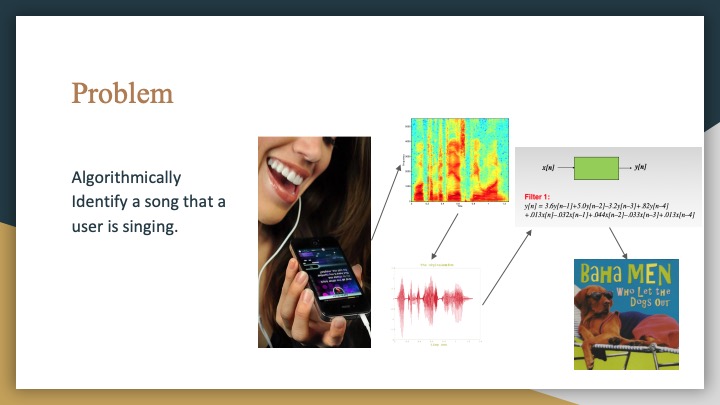

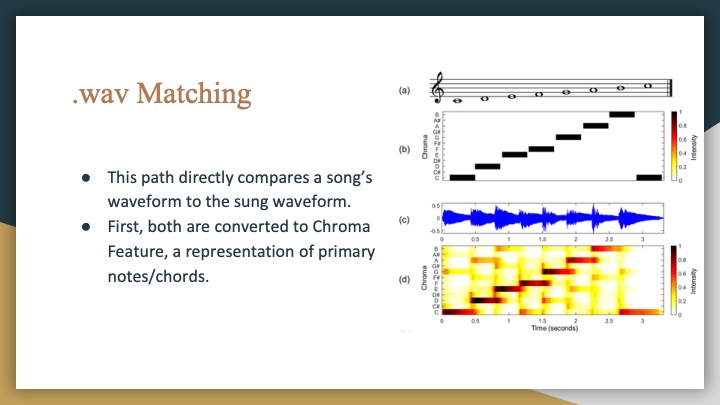

To reiterate, the model is as follows: A song will be recorded in .wav format, then converted into a chroma feature (actually, a CENS format, which includes some more normalization and smoothing). This CENS is used to produce a cross-similarity matrix with every song in the model’s CENS. These matrices are all classified (the classifier outputs the probability that a matrix represents a match), and the highest-probability-of-match songs are ranked. Currently, the network’s mean squared error is about 1.72%.

Before the demo, I’m cleaning up the integration and making sure that everything can smoothly connect with the visualization webapp and with anja’s dynamic time warping. Since my neural network is in python/keras and my preprocessing is in MATLAB, I’m using MATLAB’s engine for python to integrate those.

Anja Status Report #11 Last one

The pitch contours are looking fairly good. I cleaned them further by trying to remove spurious troughs in the contour. I did this by taking max-series and then removing spurious peaks.

For improvement, I have been fine tuning the analysis. I have considered different options instead of just DTW:

For every alignment that looks really good, the autocorrelation would be completely symmetric, since the stretched function would look just like the one matched against it. So if I could instead get a measure of symmetry, or rather skewness, of the autocorrelation, this would show that for whatever amount of stretching needed (even if it were a lot), if it were very closely aligned then that would be the optimal match.

Besides the analysis, I have considered the complexity as well. Currently it matches within about 3 minutes for a 5 songs database, so this will need to be sped up completely. I am going to play around with the hop size for the DTW windowing. I have already bucketized the database contours and the query contours by about 0.1%, which seems to be the highest that still preserves information.

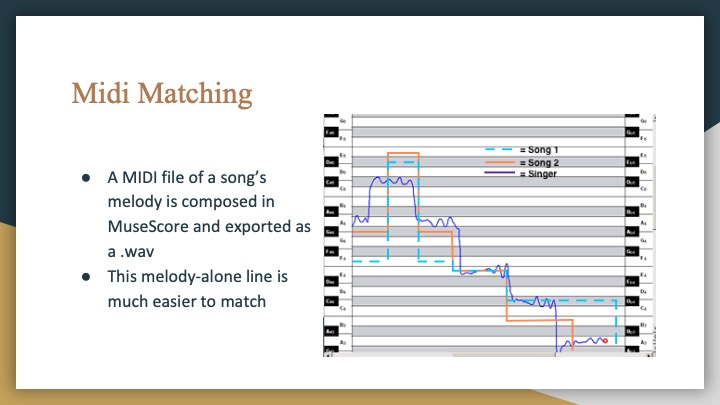

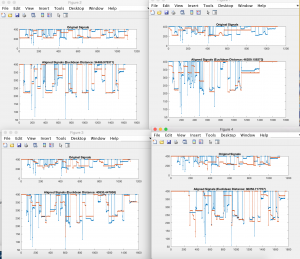

pictured above are some alignment plots. The blue is the sung query, and the contour of a song in the database at a segment in the song range that seemed to be the closest to what was sung.

Overall, I’m still going to be bumping up accuracy and complexity until the demo.

Wenting: The Final Status Report

I’ve made a ton of progress since last week. As mentioned last week, I was looking for a library to capture audio and had to do a lot of digging to find one to save .wavs, which is the audio file format being used for the backend processing. I’ve never worked with React before this so it has been a big learning experience.

I’ve gotten almost all the features for the web app implemented. The user can record a clip that is then posted to a Flask server and saved. The file is then passed to Matlab for processing and the results (melodic contours, matched song titles) returned back to the frontend for rendering the visual results. My last task is to get the results to display at the correct time. I have put together the design for displaying and just need to have it trigger at the right time (i.e. when the processing is complete).

Public demo is in 2 days so all I can say is it’ll be done by then. 🙂