This week I began testing our training data and the data that we are getting from our microphone. I performed cosine similarity on the frequency magnitude of the two sound files and found that their frequency spectrum are nearly identical with a cosine similarity of .87. I also began to determine our testing and validation setup to try to mimic a human heart. I found that we would not need a gel and that playing the sounds through a speaker would be sufficient.

Team – Week 3 Status Report

Ryan – Week 3 Status Report

Ari’s Week Progress Status 3

This week I accomplished many things. First off, I was able to transfer the signal from our stethoscope to a computer. I was also able to visualize the signal using a oscilloscope and was able to create recordings of the sounds the microphone produced.

My goal for next week is to apply the algorithm that Eri and Ryan create to see how it compares to our testing data. I also want to think more about our testing process and see how we can make it better.

Journal Entry Week 3: Eri

- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

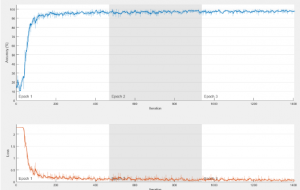

- This week, I finished removing the noise of the signal. I used what I did last week, but instead of just working with the amplitude I found peaks of the Shannon entropy, since it emphasizes the average intensity signals more efficiently, while also attenuating the high and low energy signals.

- I was also able to classify each peak as S1 or S2, since S1 heart beats have a lower frequency signal than that of S2.

- One problem I ran into using the Shannon energy was that it sometimes just completely removed some low intensity signals that were not actually noise.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Progress is slightly behind schedule, since I was hoping that by the end of this week I could have a clean data that is segmented and filtered to work with. So far my algorithm can define where S1 and S2 is, but it does not segment five full beats yet. I believe this next step will not be that difficult since it just needs to start collecting information at the first S1 and end at the fifth S2, so I will finish working on that by the end of tomorrow, and our team will hopefully be back on schedule. - What deliverables do you hope to complete in the next week?

- Realistically, since I have a lot of midterms next week, I do not think I will be able to have a working classification algorithm that determines whether the heart sound is normal or abnormal yet, but this week I will do further research on SVM algorithm and start using the segmented and filtered clean data to experiment with the SVM classification algorithm.

Team – Week 2 Status Report

Ryan – Week 2 Status Report

At the beginning of the week, Eri and I once again worked together on reducing noise of the heart sound test data. We first began by applying a band-pass filter to get rid of the higher and lower frequency noises that aren’t audible to a human ear (20 kHz). I’ve attached the original heart sound here:

Then here is the audio file after filtering out those frequencies:

As you can tell the background noise was reduced, so that the S1 and S2 beats are more audible and easier to analyze. We had an issue when there were sounds that were within the frequency caused by scuffling the mic, which we have to do further noise reduction or just eliminate those portions of the audio to analyze whether an abnormality exists.

I then decided to help designing the actual ML algorithm to classify the data because I’ve worked with Convolutional Neural Networks in my class and this would eliminate the need to segment the data because CNN’s are time shift invariant. The structure of my network is this:

I was able to achieve a 99.5% accuracy in classifiying images of handwritten digits, so I have to test the accuracy with audio files with this structure. I plan on testing this first thing tomorrow and will have number results in the next weekly update. Of course changes to this structure will have to occur to optimize the results for this dataset.

We are now on schedule because we were able to eliminate noise from the sound file as well as eliminate the need for segmentation of the heart sounds. We do want to test the accuracy of the segmentation and then classification through Support Vector Machines in the future to compare with these results.

Ari – Week 2 Status Report

Status Report #2

Arihant jain

Team A4 (Smart Stethoscope)

My task for the week was to work on the prototype of the physical device stethoscope, and work on wiring the prototype together with the parts we got and test the sound on a speaker.

- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

- This week, I received the parts I had ordered last week and started to connect them and test the audio signal with an oscilloscope and then set up the wiring for turning the signal into a 3.5mm output so that we could listen to the microphone stethoscope system on a speaker.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

- Progress on track!

- What deliverables do you hope to complete in the next week?

- We have a working prototype and now just need to see how much we can make the sound quality match our testing data.

Eri: Journal Entry Week 2

This week I worked on removing noise from the heart sound data. I did this by first finding the average of all the peaks and then removing extra high or low amplitudes. Below is the graph of the original heart sound, along with the heart sound after the loud and quiet noises are removed.

Next I used a Fourier transform to find the heart sound in frequency. I then got rid of all the frequencies that are not within the range of 20Hz to 150 Hz – the range of the human heart sound – using a low pass and high pass filter. In addition to this, I also experimented with other algorithms such as the moving average; however it had a very slow computation speed and it did not remove the noise as well as desired. I am currently on schedule, since my goal was to finish the denoising algorithm. Although it does not one hundred percent get rid of all the noise yet, it is pretty close. This week I will work on segmenting the heart sound into 5 full lub dub beats using deep learning. I did some research this week of which algorithms work best, but I have not decided which to move forward with yet.