Since last week, we have all been working on integrating the ML portion with the Rasberry Pi. Although the code ran well on Ari’s local computer, there were a lot of importing module issues on the Pi. Therefore, this week I had to work on fixing the ALSA audio issues on the Pi to successfully record directly from the stethoscope.

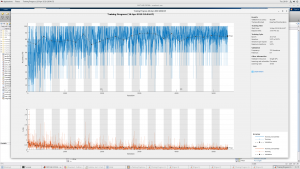

Aside from fixing the import issues on the Pi, I also worked on checking the sensitivity vs specificity on the ML testing algorithm by using a Confusion Matrix. Eri and I initially found that only 65% of our abnormal test sounds were being classified as abnormal, so we decided to use more abnormal heart sounds in the training algorithm because there was previously a lot more normal heart sounds than abnormal in the dataset. After we fixed this, we saw that we were now getting around a 85% true positive rate and 85% true negative rate, which is what our specifications originally aimed for. The overall validation accuracy was also 89%.

For next week, we as a team have to finish the integration portion as well as ensuring that it also works with the speaker using a double-blind experiment.