This week, our team focus has been on establishing the requirements for our upcoming demo. We hope that by setting up our project for this demo, we can also have a platform built to test out parameters of our project and evaluate the results.

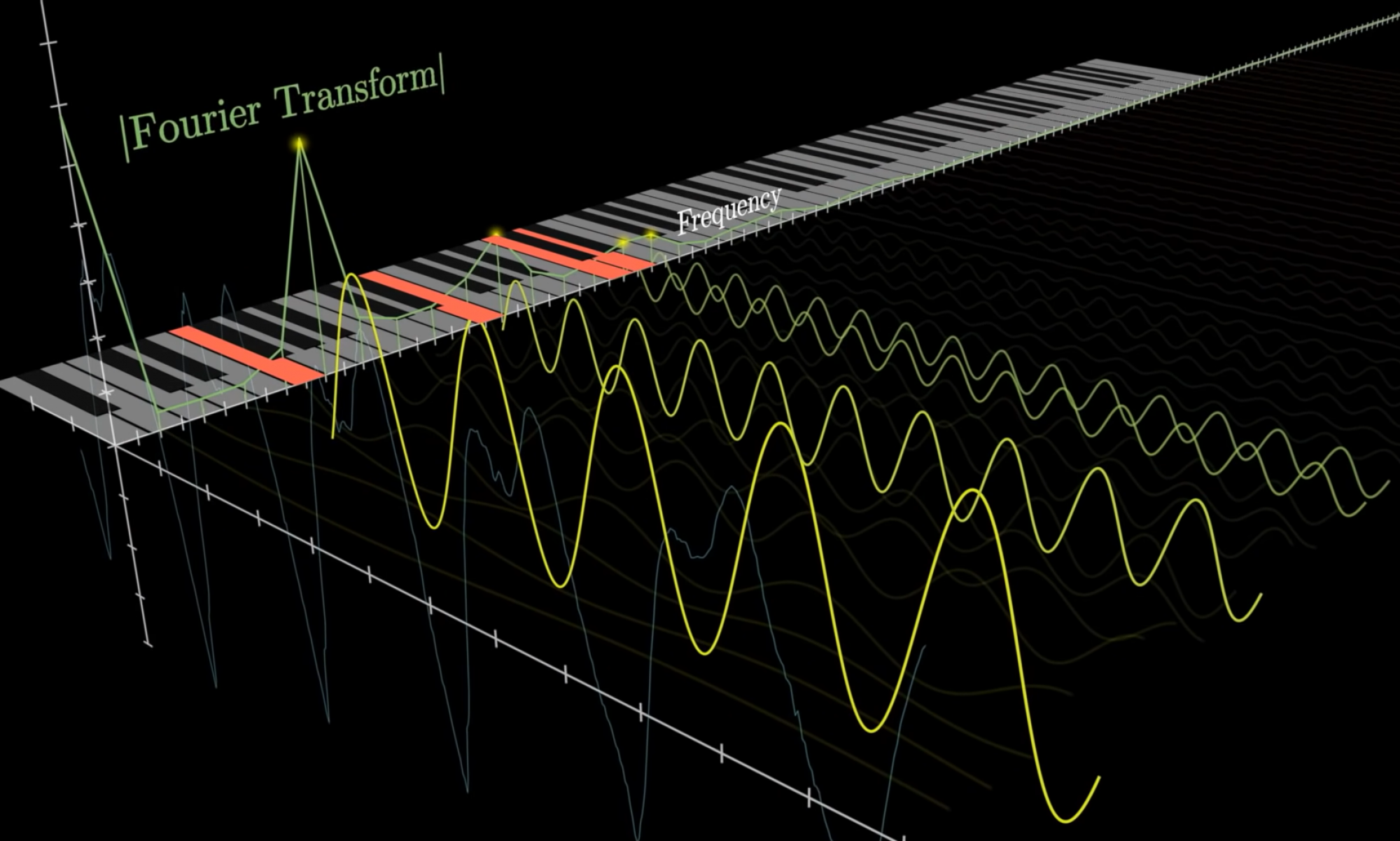

For our demo, we are hoping to have a web app that allows users to record audio to send to the audio processing modules. The audio processing will perform the Fourier Transform on the audio to get which frequencies comprise our audio. We will then take the frequencies that correspond to the keys of the piano and filter the frequencies of the recorded audio such that the only remaining frequencies are those that the piano can produce. This filtered set of frequencies represents what notes of the piano can be pressed to produce the snippet of audio. These many snippets of frequencies (at this point filtered to only contain those the piano can produce) are then sent to the note scheduler that takes in which notes to play and outputs a schedule representing when to play each key on the piano. This note scheduling module is important as it will help to identify whether a particular note’s frequency is present in consecutive samples, thus handle the cases of whether we need to keep a note pressed between many sample, re-press a note to achieve a new, higher volume, or release a note so that the frequency dies out. Lastly, these many audio samples, only containing piano frequencies, will be stitched together and played back through the web app. The audio processing module also creates graphical representations of the original recording (as time vs amplitude) and the processed recording (as time vs filtered amplitude- representing the piano keys) and displays them to the user.

Our goal with this demo is to not only show off a snippet of our project pipeline, but also provide a framework to test parameters and evaluate the results. We have many parameters in our project such as window of time for the FFT, window of frequencies to perform averaging for each note, the level of amplitude difference between consecutive frequencies that determine whether we re-press a note or keep it pressed down, just to name a few. We hope that with this demo, we can fine tune these parameters and listen to the output audio to determine whether the parameter is well-tuned. The output playback from our demo is the sound we would hear if the piano were able to perfectly replicate the sound with its available frequencies, so if we use our demo to test an optimal set of parameters, this will help the piano recreate the sound as best as possible.

We have also placed an order through AliExpress for a batch of solenoids. It is set to come in by the 11th of November. We will evaluate the effectiveness of the solenoids and quality from the AliExpress order, and if the solenoids are good, we will place an order for the rest of the solenoids we need.

In the next weeks, we are planning to define tests we can perform with our demo program to tune our parameters. We will also be planning how to test the incoming batch of solenoids and what will determine a successful test.