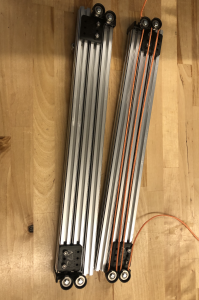

This week, I worked on mounting and motorizing the linear slides on the robot. Both slides were mounted mirroring one another on the chassis. The pulleys were strung with a string that was attached to the motor that would pull the slides. I found that the motor alone was insufficient for lowering the slides, so I added some tension to pull the slides down when unwinding the string by attaching surgical tube between adjacent slides.

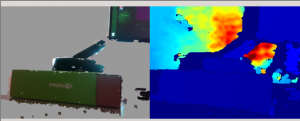

Overall, the slides were able to successfully extend and detract with a bar mounted between them as shown in the following clip:

There are some slight issues with detracting fully which I think is related to minor tension issues. The slides were able to individually detract otherwise.

At one point in the week, one set of slides were completely stuck and required significant disassembly to resolve. In order to fix and further help avoid this issue, lubricant grease was applied to the slides.

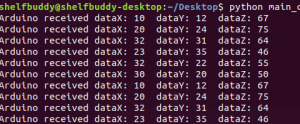

Finally, I also tested the claws this week and found one to have significantly better grip range and torque than the other. Using the servo code written a few weeks ago, I was able to get the claw to grab a small, light box. The claw was also mounted onto the end of the slides.