This week I continued to work with my team to improve the entire process of our robot, tweaking some values to make it more consistent, and improving the navigation process. We primarily focused on the robots navigation back to the basket after it retrieves the object, and depositing the item in the basket. The team’s progress is described in this weeks team status report. I also worked on the final presentation which Ludi presented earlier this week.

Team Status Report for 11/20

This week our team worked on the navigation and retrieval process of the robot. After speaking with Tamal and Tao we reconsidered our robot’s sequence of steps, and worked on the code required for the robot’s navigation and retrieval. This week, we accomplished the following:

- We wrote code for our robot to rotate 360 degrees in search for the April Tag on the basket. Once the robot detects the Tag on the basket, it rotates to be perpendicular to the basket, and drives up the basket stopping about 1 foot in front of the basket.

- Next we wrote code for our robot to rotate and look for the April Tag on the shelf. Once the tag on the shelf is detected, the robot rotates so it is facing perpendicular to the shelf.

- Then, the robot moves around the basket, and drives up to the shelf, stopping about 1 foot from the shelf.

- Then the linear slides extend until they reach the maximum height, about three feet.

- Once the slides are extended, the robot searches for the laser pointer.

We accomplished the above steps this week, and a video can be seen below:

Currently the robot is sometimes able to center itself to the item with the laser and drive towards it, but this step does not work all of the time. Thus, next week we plan on improving the item detection step, and working on the grabbing the object from the shelf.

Bhumika Kapur’s Status Report for 11/20

This week I worked with my teammates on the navigation and retrieval process of the robot. We all worked together on those tasks and our progress is detailed in the team status report. I also worked on improving the CV component of the project as there are some errors that occasionally occur in different lighting conditions, but I am hoping those will be resolved with more testing soon. Next week I will continue to work with my team on the robot and our final presentation,

Bhumika Kapur’s Status Report 11/13

This week I worked on both the edge detection and April tag code.

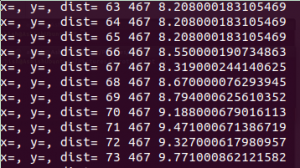

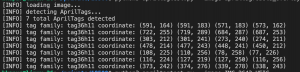

Firstly, I improved the April tag detection so the algorithm is able to detect an April tag from the camera’s stream, and return the center and coordinates of the tag along with the pose matrix, which allows us to calculate the distance to the tag and the angle. The results of this are shown below:

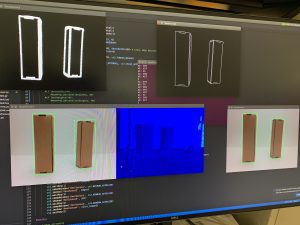

Second, I worked on improving the edge detection code, to get a bounding box around the different boxes visible in the camera’s stream. The bounding box also allows us to get the exact location of the box, which we will later use to actually retrieve the object. The results of this are shown below:

Finally, I worked with my team on the navigation of the robot. By combining our individual components our robot can now travel to the exact location of the April tag which marks the shelf. The robot is also able to drive up to the exact location of the item which has the laser point on it, and center itself to the object. Over the next week I plan to continue working with my team to finish up the final steps of our implementation.

Bhumika Kapur’s Status Report for 11/6

This week I worked on all three components of my part of the project, the laser detection algorithm, the April tag detection, and the edge detection.

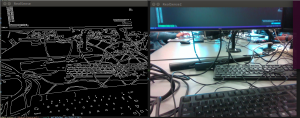

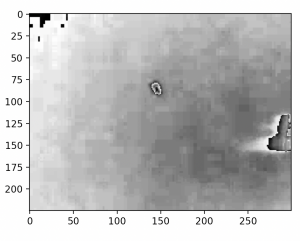

For the edge detection, I was able to implement edge detection directly from the Intel RealSense’s stream, with very low latency. The edge detection also appears to be fairly accurate and is able to recognize most of the edges I tested it on. The results are shown below:

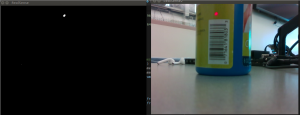

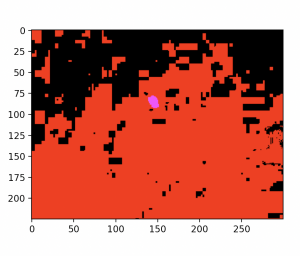

Next I worked on the laser detection. I improved my algorithm so that it would result in an image with a white dot at the location of the laser if it is in the frame, and black everywhere else. I did this by thresholding for pixels that are red and above a certain brightness. Currently this algorithm works decently well but is not 100% accurate in some lighting conditions. The algorithm is also fairly fast and I was able to apply it to each frame from my stream. The results are shown below:

Finally I worked on the April Tag detection code. I decided to use a different April Tag python library than the one I was previously using as this new library returns more specific information such as the homography matrix. I am a bit unsure as to how I can use this information to calculate the exact 3D position of the tag, but I plan to look into this more in the next few days. The results are below:

During the upcoming week I plan to improve the April Tag detection so I can get the exact 3D location and, and also work on integrating these algorithms with the navigation of the robot.

Bhumika Kapur’s Status Report for 10/30

This week I was able to start working on the camera and Jetson Xavier setup. We got the Xavier setup earlier in the week, and I worked on getting the Intel RealSense Viewer setup on the Xavier. Once that was setup, I worked on downloading the necessary Python libraries on the Xavier that are needed to use the Intel RealSense data. That took me some time as I ran into many errors with the download but, the library is now set up.

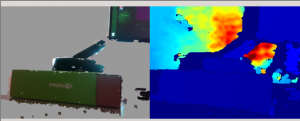

Once the viewer and the library were setup I began working on actually using the camera’s stream. I ran many of the example scripts given on the Intel RealSense website. Two of the more useful scripts that I was able to run were a script for detecting depth of object in the stream, and a script for using depth image to remove the background of an image. The results are shown below:

The first image shows the result of running the depth script, which returns the depth of a pixel in meters. The x,y, and depth information is printed out. The second image shows the result of using depth information to remove the background and focus on a specific object.

Next week I plan to use these scripts in conjunction with the edge and laser detection algorithms I have written, so that the robot can use that information to navigate.

Team Status Report for 10/30

This week we received all of the parts that we ordered, and began producing our robot. We setup the Jetson Xavier and Bhumika has been working on the computer vision aspect of the project using that. Ludi was able to assemble most of the chassis. Esther build the linear slides, and both Ludi and Esther are working on getting the motor shields to work. Next week we will continue to work on assembling the robot, and integrating the different components such as the chassis and the linear slides. Overall we are satisfied with the progress we have made so far.

Bhumika Kapur’s Status Report for 10/23

This week I worked on two things. First, I worked on getting our camera, the Intel Realsense setup. We were able to obtain a new camera with its cable, and get it somewhat working on one of our team member’s laptop, and it also worked well on our TA (Tao’s) laptop. The next step is to connect the camera with the Jetson Xavier and get it setup there.

I also worked on the laser detection algorithm. I tried a few different methods that I found online with varying results. The first algorithm that I tried only used the RGB values of the image, and manipulated these values to calculate the degree of red compared with the other pixel values. The results are shown below, where the laser is the circular spot:

The next algorithm I used involved using the HSV values and thresholding then, and then anding these values to find the laser in the image. This method seemed to work, but need more testing to determine the best threshold values. The results are shown below, with the laser in pink:

Overall both algorithms were able to detect the laser in a simple example, but I will need to perform more testing to determine which algorithm is preferred when shining the laser on an object. Next week, I plan to work on setting up the camera and improving on this algorithm.

Bhumika Kapur’s Status Report for 10/9

This week I worked on a few tasks. First, I worked with my teammates to complete the design presentation. We had to reevaluate some of our requirements and solution approaches for the presentation. We also discussed our solution approach in detail and researched how each component would connect to other components.

I also received the Intel RealSense camera from Tao this week, and spent some time trying to set it up. I was able to get it connected to my laptop and using a VM, I am able to access the camera. The next step is to setup the camera in the Intel RealSense Viewer. I have been attempting to get the camera setup in the viewer, but the viewer crashes whenever I open it, so I will need to debug that this week.

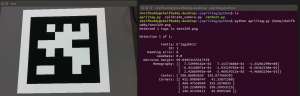

I also worked on the April tag detection code. As I mentioned last week April tag code is originally in Java/C so I followed some tutorials online in Python, which I am most familiar with, to get the software setup. Currently I am able to draw a bounding box around the April tag, detect the specific family the tag is from, and output the exact coordinates of the tag in the image. My next steps are to combine this with the data that I would receive from the camera to output the exact location of the April tag so the robot can navigate to it. I hope to do that next week.

Example image/output:

Bhumika Kapur’s Status Report for 10/2

This week I continued the research I have been doing into the computer vision component of our project. After speaking with Tao this week, our team decided to use the Jetson Xavier NX rather than the Jetson Nano due to the fact that the Xavier NX has 8GB of memory compared to the 2GB of memory in the Jetson Nano. So, I researched cameras that would be compatible with this new component. I looked into the camera in the ECE inventory list that is specifically created for the Xavier NX, but, it has a very low FOV (only 75 degrees). So, I did more research into the sainsmart imx219, and it seems like this is compatible with the Xavier NX as well, and has a higher FOV (160 degrees), so we will most likely go ahead with this camera.

I also talked about depth sensing with Tao, and he suggested that we look into stereo cameras if we wanted a camera with depth sensing capabilities. Unfortunately, many of the stereo cameras compatible with the Xavier NX are quite expensive/sold out. So, I looked into the idea of using a distance sensor such as an ultrasonic sensor from adafruit along with a camera to properly navigate the robot.

This week I also looked into the April tag detection software, which is originally written in C and Java, but I found a python tutorial that I followed. I don’t have the software fully operating yet, but hope that it will be functional by next week. I also worked on the design presentation with my team, and we discussed our progress and project updates.

Next week I plan to continue working on the April tag detection code, and try to setup the camera from the ECE inventory with the Jetson Xaiver NX.