This week we finished the mechanical assembly of the robot, and are preparing for the interim demo for the following week. Bhumika has mainly worked on the computer vision area, with edge detection, laser detection, and april tag detection working decently well. The linear slides system is motorized and can successfully stretch up and down. The claw is experimented fully and can successfully grab objects within dimensions. The navigation code of the robot is under implementation. Currently, the four motors can receive commands from the Jetson Xavier and spin according to direction. We will continue to fine-tune our specific areas of focus and start working on integration later in the week.

Bhumika Kapur’s Status Report for 11/6

This week I worked on all three components of my part of the project, the laser detection algorithm, the April tag detection, and the edge detection.

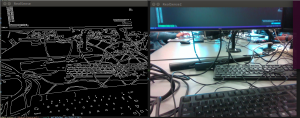

For the edge detection, I was able to implement edge detection directly from the Intel RealSense’s stream, with very low latency. The edge detection also appears to be fairly accurate and is able to recognize most of the edges I tested it on. The results are shown below:

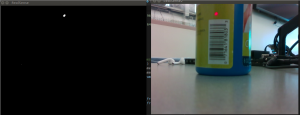

Next I worked on the laser detection. I improved my algorithm so that it would result in an image with a white dot at the location of the laser if it is in the frame, and black everywhere else. I did this by thresholding for pixels that are red and above a certain brightness. Currently this algorithm works decently well but is not 100% accurate in some lighting conditions. The algorithm is also fairly fast and I was able to apply it to each frame from my stream. The results are shown below:

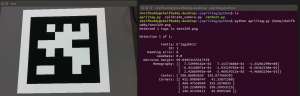

Finally I worked on the April Tag detection code. I decided to use a different April Tag python library than the one I was previously using as this new library returns more specific information such as the homography matrix. I am a bit unsure as to how I can use this information to calculate the exact 3D position of the tag, but I plan to look into this more in the next few days. The results are below:

During the upcoming week I plan to improve the April Tag detection so I can get the exact 3D location and, and also work on integrating these algorithms with the navigation of the robot.