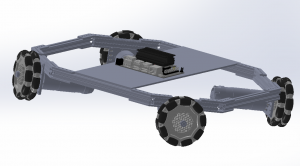

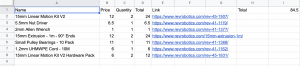

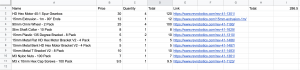

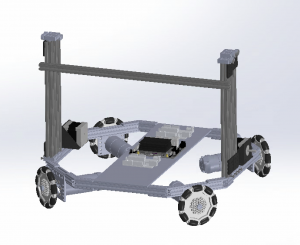

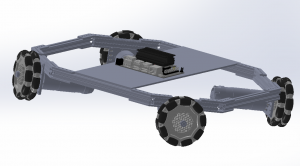

At the beginning of the week I had an extensive discussion with my team to finalize the specific hardware we need to order and the associated bill of materials. We realize that budget is a concern, and based on that I slightly modified the base of the robot chassis to be smaller and more compact.

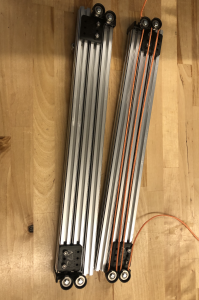

The base is now roughly 35cm * 35cm, where the straight extrusion on the sides are 20cm, and the diagonal extrusions connecting to the wheels are 12cm. Reverting to a smaller size would save up a log of cost material, and also make the linear shaft system steadier. Since the height of our robot is nearly 1 m high, and our claw system would stretch forward to reach an object of 1.5 lbs at max, I was initially concerned that the robot might “tip” forward when reaching out to a heavy object. However, based on the relative distances and weights of components, based on the lever rule in physics, the mass times distance towards the side of the robot is roughly 6 times as heavy than the same calculation done on the object side. Therefore, the need of a counterweight is also removed from my initial prototype of the robot.

I also did some other metrics calculations to confirm our system would meet our technical requirements. We aim to have the robot move at a speed of 0.5 m/s when not performing other computations. Based on our motor specs: 150 rpm and a wheel diameter of 90mm, according to the equations f = 2 pi f and v = rw, we derive that the ideal speed of our robot is 0.7065. Yet, our robot has a considerable weight, so taking a rough 20% deduction, our robot can still travel at a speed of 0.5625 m/s. Hence, I would anticipate our robot would just meet the requirements, and it would be interesting to experiment the results. I also calculated the power our system would consume, and find that our battery can last around 3.5 hours per charge where the robot is operating at full capcacity. This confirms that our robot is efficient enough to be used multiple times.

I am on schedule, although I didn’t find much time to explore the software side of the drivetrain, and we are a bit behind on ordering the materials. Next week our team would focus on the design report, and we would send the materials next Tuesday and hopefully have some parts delivered by then.