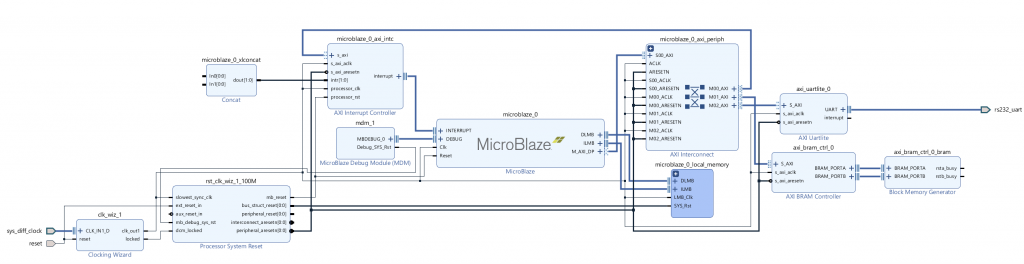

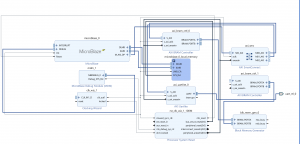

I was able to work on quite a few different things this week. By the time of the last status report and the demo, I worked with Vishal on the interface and the communication between the FPGA and the computer, and we were able to have it completely integrated by the demo, which was presented during our demo.

Last week, I mentioned that the arrival time of the various pixel locations is higher than our target requirement of 1.5 s. I addressed this issue with the other team members and we were able to determine that we would be able to provide appropriate feedback to a user for a particular exercise if we passed an additional byte at the start that indicated the type of exercise being performed. This byte is located at the bottom of the stack after the erosion output depicted in last week’s status report.

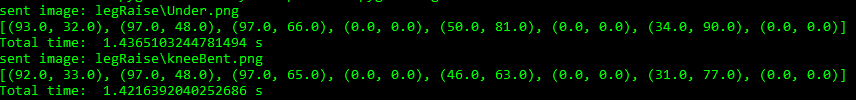

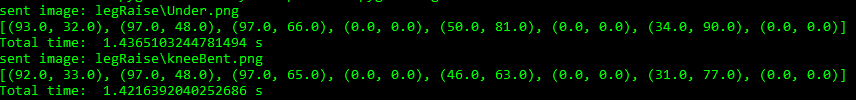

This would allow the FPGA to only process the locations of the joints that are used for the appropriate exercise and let the FPGA skip the processing of the other joints and simply output 0s for those joints as in the example below.

By doing this, we were able to reduce the arrival time to less than 1.5 s and were able to receive the feedback after taking an image to our target requirement.

Note: The timer begins before opening the image and ends after it receives the appropriate feedback from the posture analysis functions.

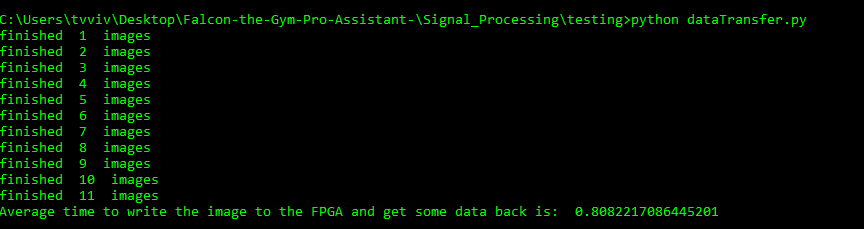

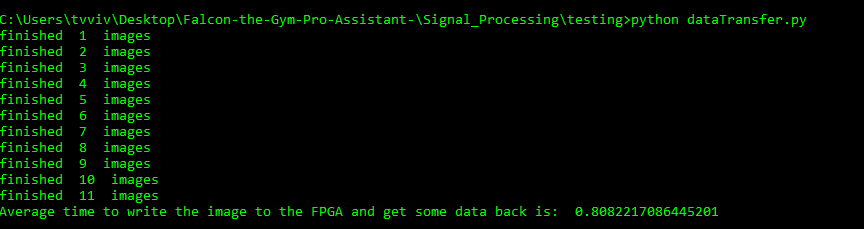

I also began testing the various portions of the code. I was able to verify that the time it takes to send the image via UART and receive some information is less than our target of 1 s.

In terms of the schedule, I am on track. Since I do not have to do any further optimizations to make for the code and the other portions of the project are not fully refined, I plan on spending a large portion of the remaining time for data collection by serving as the model and ensure that the various portions have enough data to allow them to be fully refined.