What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress.

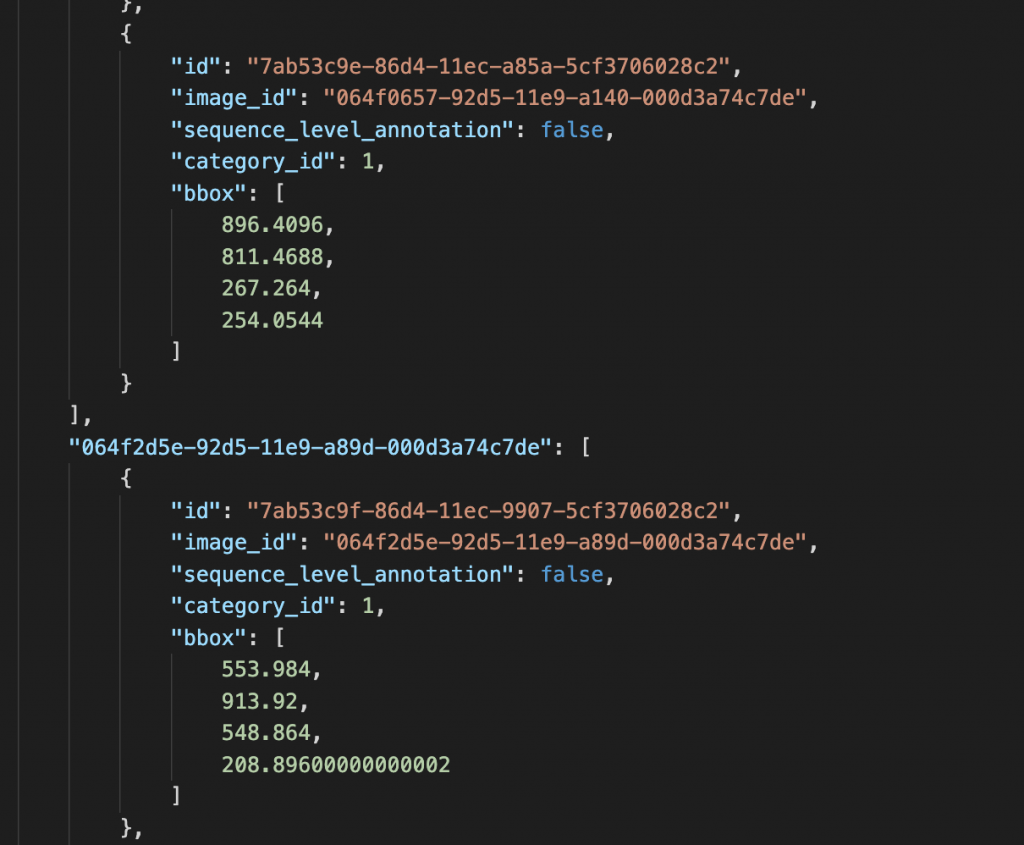

I made some major changes to the detection algorithm this week. I was able to understand the error with the bounding boxes which was a major step in the right direction.

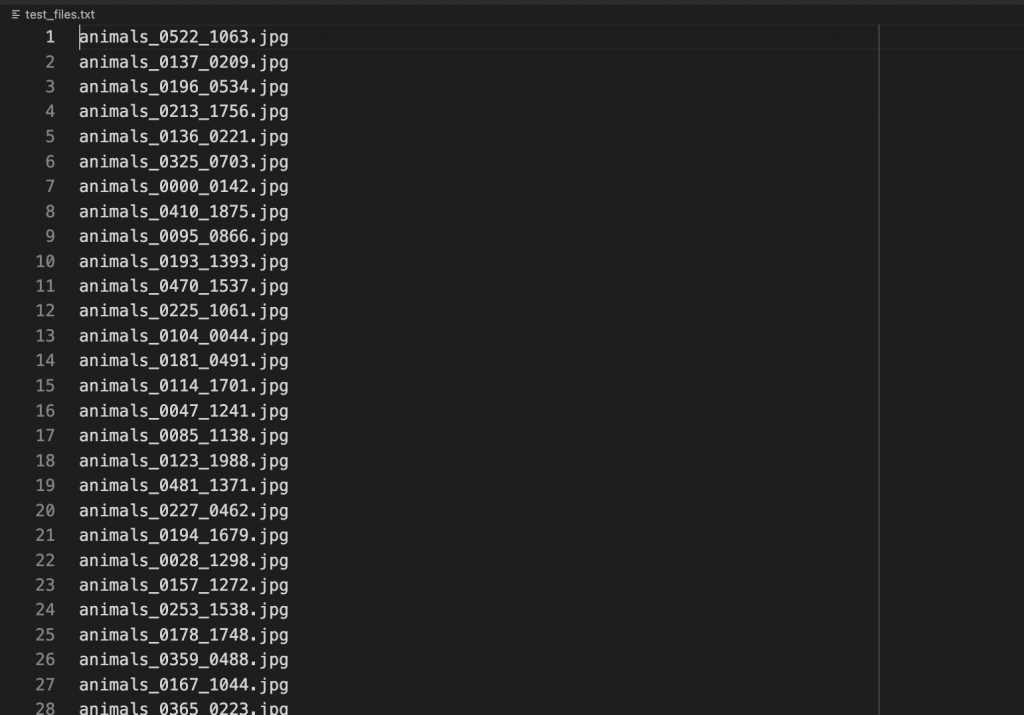

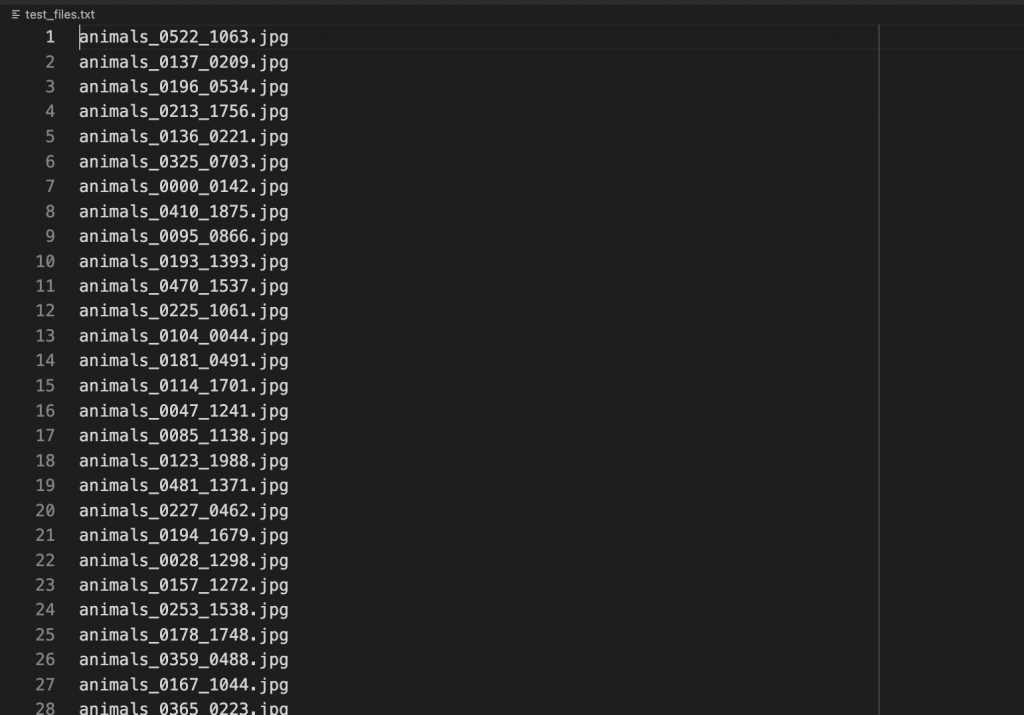

While fixing this, I came across some problems related to the naming of files (because of Linux and macOS differences). Attempting to fix the naming of files should have been trivial but did not work as expected; I tried seeking help with the issue but decided on creating a script that renamed the downloaded dataset files to avoid any errors in the future.

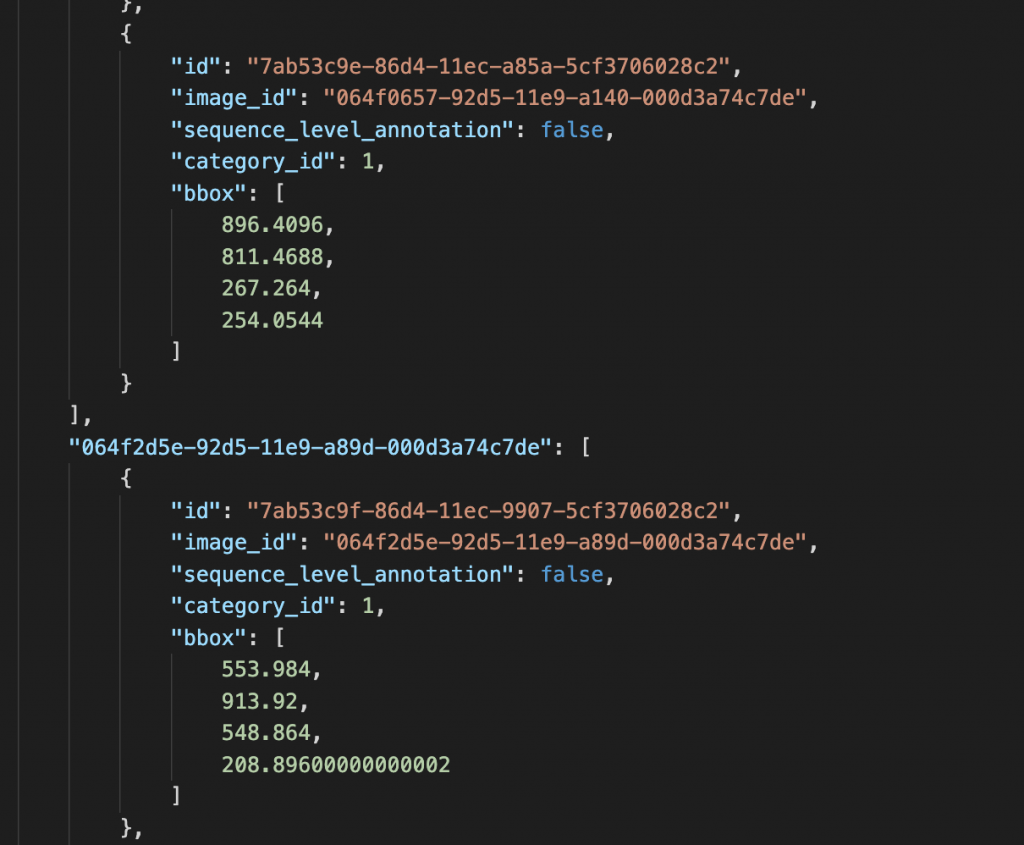

I re-partitioned the dataset correctly and edited the representation of JSON files and the annotations for the dataset.

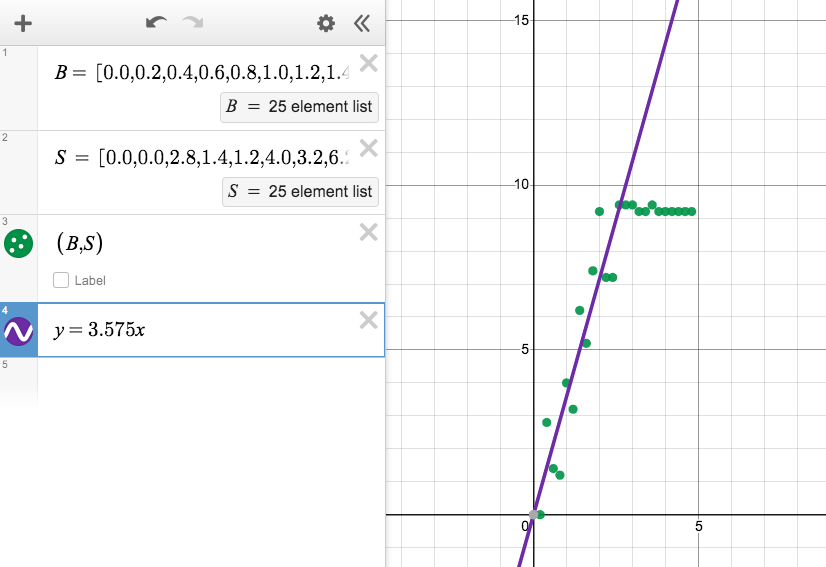

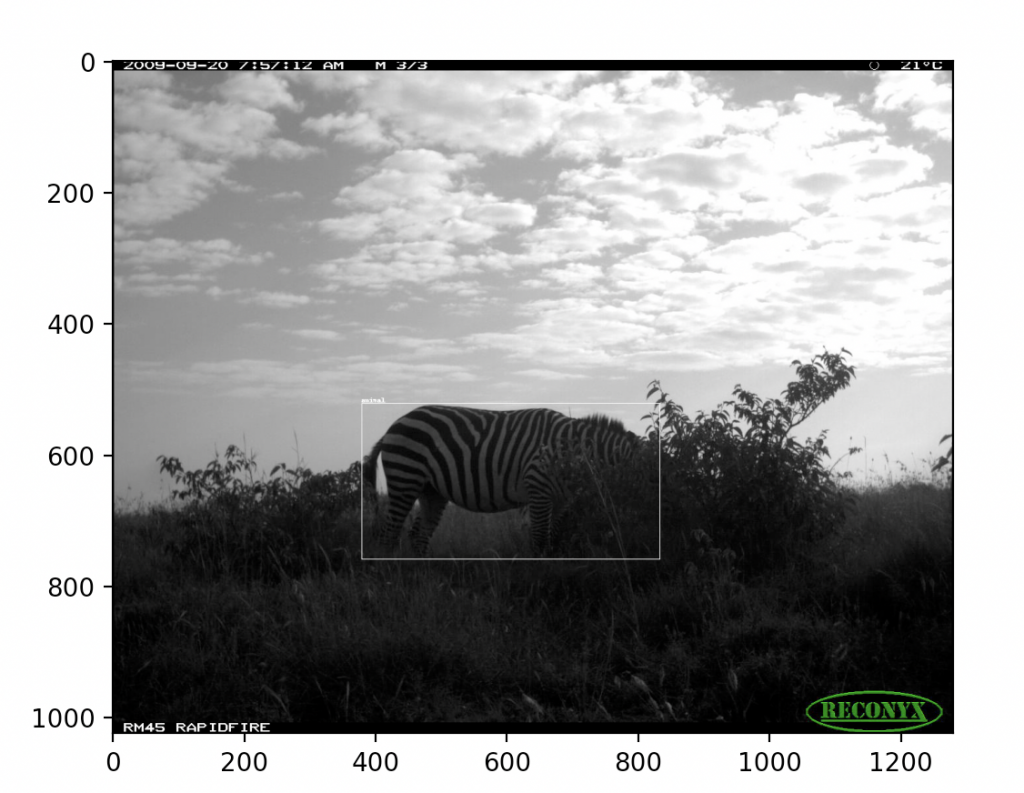

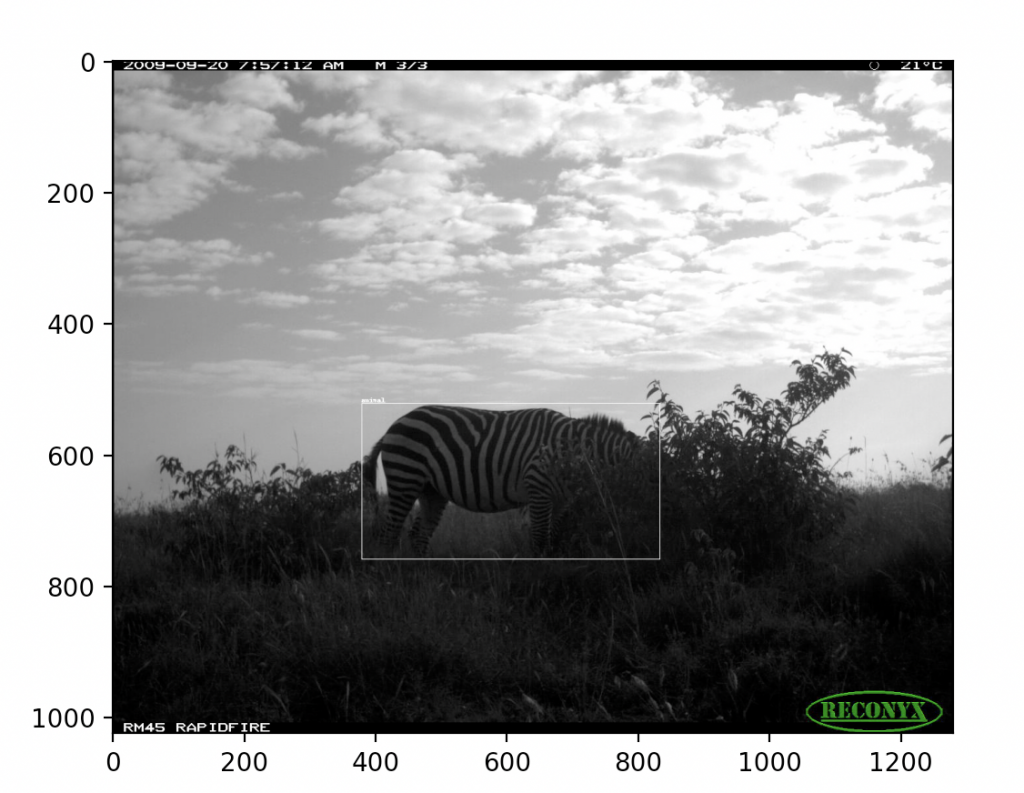

Following this, I was able to test the edited bounding boxes. After running tests for sets of random files (from the train, validation, and test data) we saw good results and after some scaling, I was satisfied with the way they were represented (according to the YOLOv5 format).

After testing, I was able to attempt re-training the model. I specified the correct destinations and ensured that all hyperparameters defined in the hyp.scratch.yaml and the train.py files seemed correct. Since training is a long procedure, I consulted my team for their approval and advice and then moved on to running the training script for the neural net.

The issue I came across was that training on my laptop was seeming to take very long (~300 hours) as shown below.

I asked Professor Savvides for his advice and decided to move training to Google Colab since this would be much quicker and wouldn’t occupy my local resources.

All datasets and YOLOv5 are being uploaded to my Google Drive (since it links to Colab directly) after which I will finish training the detection neural net.

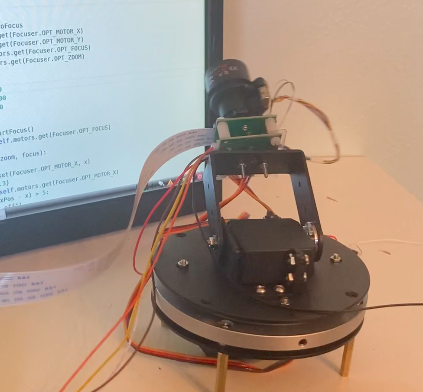

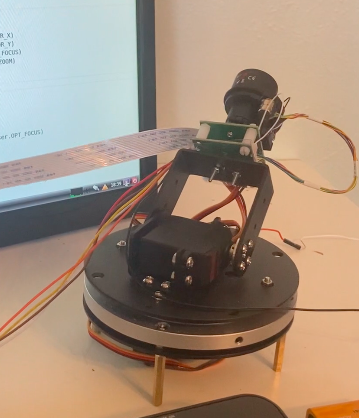

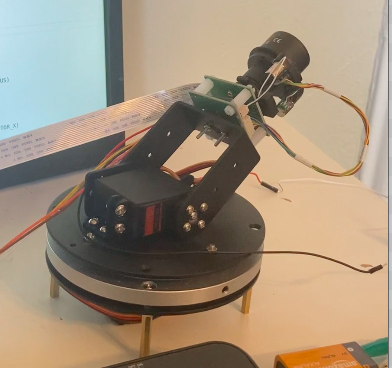

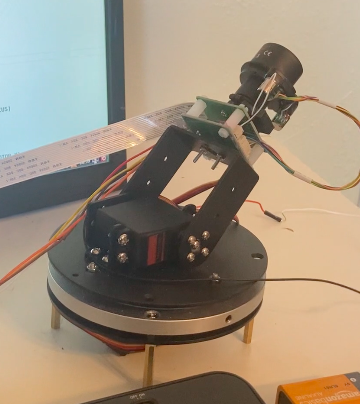

In the meantime, I am refining the procedures outlines for testing and setting a timeline to ensure adequate testing by the end of the weekend. While doing so we came across some problems with relaying the video of the physical setup through the JetsonNano. After some research, I am now focussing on implementing one camera until we can get some questions answered regarding using both in real-time.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

We fell behind a little bit due to roadblocks related to the setup, real-time video feed, and training the neural net correctly.

By the week done this week, I should be back on schedule if I am able to finish training and test the algorithm to some extent. We still need to get the physical setup to communicate with the Jetson exactly as we desire and as soon as this is done it should be a simple procedure to integrate this with the tested detection algorithm.

What deliverables do you hope to complete in the next week?

By the end of next week, I will have the detection algorithm finished and tested as described above. Further, I expect to be able to take a scan of a room using the setup as I would to detect animals, and following this, I hope to integrate and test the features of the project.

This would mean we can focus on polishing and refining the robot as needed as well as fine-tune the different elements of the project so they work well together.