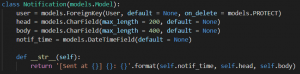

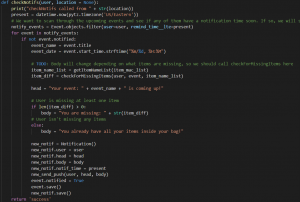

This week, I completed the removal functionality for items and events as well as web push notifications that get sent once the notification deadline for an event has arrived. I used the Django webpush package to send web push notifications to the user and had to register a service worker to enable this functionality. Additionally, I had to add another attribute for the Event model, “notified,” so that our checkNotifs function doesn’t repeatedly send notifications for events more than once, and created a Notification model to use for debugging and logging. This feature can be viewed here, and the logic for the checkNotifs function can be seen below:

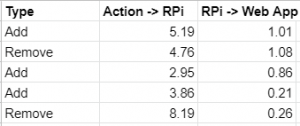

I also worked with Aaron to test the delay between an action (adding or removing an item to/from the backpack) and the RPi receiving the item as well as the delay between the RPi and the web app interface updating. We found that the time fluctuates quite a bit between the action and the RPi, but once an RPi receives an item, the interface update is typically under a second.

We have had to push back on integrating Joon’s image recognition module with the web app until Joon finished migrating the model to AWS, so this aspect of our progress is behind schedule. We are also a little behind schedule on the schedule learning feature as well as Bluetooth persistence, though we’ve planned the bulk of these tasks for the upcoming week rather than this previous week. To catch up to our project schedule, we’ll likely focus solely on these two tasks for the upcoming week. Thankfully, we’ve also planned for a little slack time in our final planned week of work (week of 4/25), so we can also use that time to wrap up any leftover tasks. At this point, all my individual tasks for our project have been completed, and the remaining tasks all involve some form of integration or collaboration to complete.

In the next week, I hope to complete usability testing of our web app, the schedule learning algorithm, and Bluetooth persistence with Aaron. I also need to integrate Joon’s image recognition module into our web app’s registration process.