Weekly Report #12 – 5/4

Jerry:

I’ve been giving another go at training the neural network, where this time I didn’t use the Google Open Images dataset due to the bad quality of the bounding boxes in the images. I reverted back to the COCO + Caltech Pedestrian dataset combo, and this time I also added negative training examples from the NYU Depth v2 dataset, which had many images of relatively cluttered rooms with few people in view. I manually took out all the images there that had people in it, and trained the network longer than before. This time we had success; the system has much fewer false positives and is better at drawing bounding boxes for people who are partially out of view.

We also tweaked the anchoring algorithm to give a grace period of around a second after a bounding box is lost, changed the smoothing algorithm to predict the motion of bounding boxes (to a certain maximum number of frames in the future), and tweaked the numbers for a smoother tracking experience. Now the system can track people who are moving relatively fast, and is pretty good at zooming in while keeping a person’s face in view.

We also made the person tracking code run automatically on boot. The last step is to build the enclosure, collect some demo footage, and prepare for the final demo.

Nathan:

This week I’ve been spending most of my time preparing the enclosure for the final presentation. I’m not quite done sculpting the box in Solidworks, but I plan to have it complete by tomorrow (Sunday) morning. I’ll post an update then. For now, however, I’ll describe some of the specifics. It will be 9″x7″x4″ (dimensions subject to review), made out of plywood procured from the Makerspace, and laser cut to fit. It’s taking slightly longer than I expected because I’m making the joints interlock both for the better aesthetic and superior mechanical properties. Also makes it easier to test-fit before we glue it all together. There will be holes in the back for 3 thing: a power button, a 12V DC jack for power, and a combined HDMI and USB port. The USB portion will be vital for attaching a storage device, while the HDMI will be for optional display functionality. I’ll edit in some good pictures once it’s done, but I plan to have the cutting done by 10am or so, and the final assembly and such done by noon. Will leave time to do it all over if something goes wrong, just in case, but I’m confident no such situation will come to pass. This will be my last significant contribution to the project before the final report.

Karthik Natarajan

This week we didn’t have too much to do as most of our project was done for the final presentation. So, overall, throughout this week, after Jerry finished training an updated neural network, I helped him with modifying the code and tweaking some of the parameters to make our motion smoother with the new neural network. On top of that, I have been helping Jerry work with the motion sensor to incorporate the shutdown/turn-on feature. Outside of that, I helped Nathan plan the measurements out for the enclosure so we can use the laser cutter tomorrow morning. At this point, I think most of the risk factors are gone and we are pretty much on schedule, so we should be ready for the public demo. 🙂

Team:

It’s the home stretch, but we’re in good shape for the final demo on Monday. The only unfinished items are the final tuning of the tracking, which is more of an indefinite polishing step than a well defined to-do item, and the integration with the enclosure, which should be completed by mid-day tomorrow. There are no remaining risks to the project, nor should there be any at this late stage, and barring some sort of catastrophe, we should be all set for a successful Monday demo. There are no other changes to report.

Weekly Report #11 – 4/27

Jerry:

So as mentioned on the team report, we worked hard to port all our code to run with the Arduino library. In that version of the code, the focus and zoom controls moved independently of one another, so focus would be lost while the zoom was being modified. In addition, the zoom controls were quite slow. I realized that the slow zoom was caused by a bottleneck in the I2C bus, and that the clock speed of the I2C bus was configurable to be as much as 10 times higher than the default value. Over the week, I modified the Arduino code to simultaneously modify both zoom and focus so that the camera never loses focus while zooming in, and enabled the faster I2C clock option so that zooming takes much less time.

When that was done, I tried to improve the Yolo-v3 neural network by getting a larger data set with clearer images, and performing data augmentation on the data set to improve the network’s ability to handle bounding boxes that extend outside the image. However, when I trained the network with the new data set it looked like the new network performed more poorly. It turned out that the data set I used (Google’s Open Images data set) had many missing and inaccurate bounding boxes, making it much lower quality than COCO and the Caltech Pedestrian dataset even with a larger size and higher quality images. I think I will still try to re-train with just data augmentation.

I have also implemented a mechanism to switch between multiple targets in the Ultra96 code, locking onto one person for certain periods of time before switching to another. This should make up one of the last components left on the checklist of features to implement.

Karthik Natarajan:

This week I have been working on trying to save the video stream to the SD card on the board so we can look at the footage when necessary. To start this process, I initially tried to use gstreamer so we could take the compressed file directly from the camera. However, this approach resulted in a lot of compilation errors due to “omxh264dec”, “h264parse” or some other arbitrary package not being installed. And, to fix that I even tried to install all of the plugin packages available for gstreamer but to no avail :'(. So, after toiling with this for a fairly long time I moved over to trying to use openCV. Which, after messing with the Makefile, started to save uncompressed video on the board. And, if we try to compress the frames with openCV our code starts to run slowly and result in lost frames. Therefore, as of right now, I am concurrently looking into gstreamer and openCV to figure out which option would be better able to fix this problem. Also, earlier in the week I helped port the Circuitpython code to arduino C code as mentioned in the team report.

Nathan:

After the Monday demo (see Team section for details), I spent my time gathering measurement data and preparing the presentation for this upcoming Monday or Wednesday. The main topic of the presentation will be the evaluation of our system, including power and identification accuracy measurements, so I’ve been focusing on measuring the power draw of the system and making organized comparisons to our original requirements. To that end, I’ve also been testing different DPU configurations so that we can choose the optimal one for our final product, based primarily on framerate data from the execution of our neural net, though I’m also experimenting with some Deephi profiling tools. I’m also working on updating past diagrams and such for the presentation, including making note of all the additional customizations we’ve made since the design review. Once this presentation is done, I will move on to working on the poster and wrapping up the construction of the enclosure, for which I picked up the internal components earlier this week.

Team:

It’s crunch time for the project, but while there are still a number of tasks to accomplish before the end, we feel generally positive going into the final stretch. All of the major components are functional, and the remaining tasks (integration of the motion detection, tuning of power, tuning of tracking, and enclosure) are either simple or just polishing existing aspects of the system.

We had our last in-lab demo earlier in the week, and for that we finalized the integration of the pan, tilt, and zoom systems. We unfortunately had to convert our code from CircuitPython to Arduino C to fit within the Feather M0’s RAM limites (32KB), but that only temporarily slowed us down. We had a few bugs regarding the zoom behavior, but those have since been fixed, and we’ve sped up the motion system’s performance dramatically by tuning the I2C bus used for communication between the Featherboard and Featherwings.

The outstanding risks are the integration/completion of the remaining components (listed above), but there is no longer anything that fundamentally threatens the integrity of the project. By the end of this coming week, the remaining tasks should be complete, and we should be essentially ready for the final demo. Accordingly, the design of the system and Gantt chart remain unchanged from last week.

Weekly Report #10 – 4/20

Jerry:

This time, I’ve gotten the DPU properly instantiated and working on our custom boot image. It turns out that debugging these pieces of firmware can be pretty tricky. The first few times I re-compiled the Petalinux image, I excluded the DNNDK files, DPU driver, and other libraries like OpenCV, and added them in after compilation. After shuffling about to find where to put the files, I managed to make everything except the DPU driver work. The DPU driver, on the other hand, seemed eerily silent. It had a debug print in the first line of its initialization, but I never saw that line. I thought it was because the debug prints weren’t enabled in the kernel, but I tried several kernel configuration and still saw no prints. I then thought it was because the driver was compiled in release mode, removing all printk’s, so I recompiled the DPU drivere (this time, including it and all the other libraries directly in the Petalinux boot image at compilation), but it still didn’t make a noise. Finally after some Google searching for what others found when their (totally unrelated) drivers didn’t print, I found out that I needed to add something to the Linux device tree. It was great seeing all my redundant print statements show up once I recompiled the DPU driver with the device tree update.

There was also a little moment of dependency hell trying to make our Yolo-v3 run. At first I had an error message that the architecture of our DPU wasn’t supported by DNNDK (but why would you include this architecture as an option in the IP??). One painful Vivado recompilation later, I found out that the convolutional neural network IP instantiated an old version of the DPU. Luckily, DNNDK offered a (non-default) compiler for the older version of the DPU. Guess what, that DPU supported the architecture I started with earlier… Another painful Vivado recompilation, and I finally got the DPU to correctly predict a bounding box around a test image.

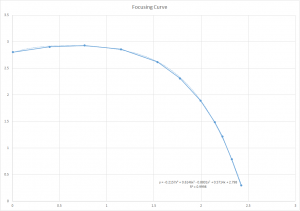

After that we focused on the focus / zoom equipment. I used the training data from Karthik’s calibration to fit a curve, where it turned out that a 4th degree polynomial had a very close fit to our observed points.

Karthik Natarajan:

Throughout this week, I mostly worked on calibrating the zoom lens and finding the proper value of focus. Firstly, after integrating Jerry’s new stepper library into our featherboard, we were able to move multiple steps at a faster rate than before. And, we have been able to decrease the heat coming from the servos that was mentioned before by releasing the stepper motors once they have finished moving. After integrating Jerry’s modified stepper.py library, I worked on getting the zoom lens to focus properly at different levels of zoom. To reduce both the complexity and the time for each adjustment, I decided to use a function to model the relationship between the focus and the zoom of a lens. This idea was actually initially prompted by finding an analytical curve for the relationship online. However, because this curve was lens specific, I manually looked for the best focus step values, for a fixed set of 11 zoom values by looking at how sharp a person appeared at a distance proportional to the zoom.

After doing this for a while, I saw that there was a small difference due to hysteresis in the proper focus value when moving the stepper motor backward vs forward. To fix this problem, we introduced a slack variable which changed the number of steps based on which way the stepper motor was moving. After getting all of these points, Jerry graphed the data in excel and we came up with the curve below

Nathan:

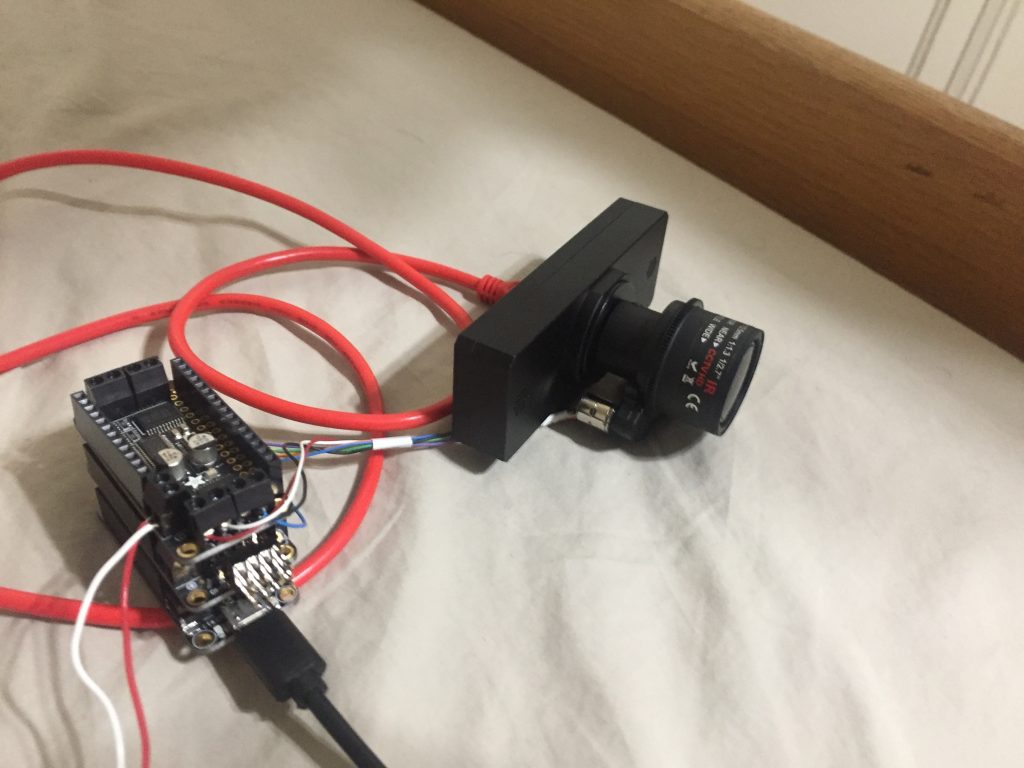

I spent this week primarily on integrating the Logitech C920 with the Kurokesu enclosure we received last week. This was substantially more difficult than expected, and involved a fair amount of, shall we say, “brute force” in the disassembly of the existing C920 enclosure. The screws in particular had an almost malign reluctance to budge, and so needed to be goaded in a process involving some pliers and a vice clamp. The soldering itself was also rather persnickety, involving delicate manipulation of fine wires, and even scraping away a layer of the PCB in a process that has no doubt measurably shortened my lifespan. In any case, after much turmoil, the C920 PCB was successfully transplanted into its new home in the Kurokesu case, and it works! This thankfully removed one of the major risks our project faced, and paves the way for a smooth transition into our Monday demo and final presentation.

This upcoming week, I’ll be working with Karthik and Jerry to prepare for our demo on Monday, and will actually get started on buying/building the case for all the components. This is more an aesthetic matter than anything else, but I consider it important for the final presentation.

Team:

This week was generally a success for our team. It was more work than expected, but the C920 PCB was transferred to the Kurokesu enclosure, and the zoom lens successfully integrated. A picture is included at the bottom of this blog post, though regretfully we forgot to take a picture of the intermediate steps. This removes one of the biggest outstanding risks this project faced, and completes a key milestone for the Monday demo.

As an update from last week, and pending any breakthrough regarding the matter, we’ve decided to go through with the power-on/power-off wake behavior for the Ultra96, with boot times around 5s, acceptable for our use case. To compensate for that longer than anticipated delay, we’re working on introducing an intermediate power state with the DPU deactivated, allowing for primitive low-power inference before the full capabilities of the SoC are engaged. This change allows us to remain in a power-on state more liberally, improving the responsiveness of the system within our power profile.

Other tasks for this project’s completion include the construction of the case/package, final tuning of the zoom/focus, and integration of the power system. However, these constitute more of a “to-do” list than actual threats to the project, so we feel quite confident in the project’s state heading into the final stretch, and at this time have a significant majority of the core systems complete.

As for scheduling, there has been some minor clean-up regarding remaining tasks, including breaking up the optimization and final system integration, but there are no major changes to report.

Weekly Report #9 – 4/13

Karthik Natarajan:

So, after last week I worked more on moving the zoom servos. Specifically, I was able to use the stepper motor library provided by CircuitPython to get focus servo to move one step at a time. We did it specifically one step at a time because CircuitPython only supported moving single steps. But, as more time went on we realized that there were two problems with this. Firstly, the stepper motors tended to be pretty hot because of continuously starting and stopping after attempting each step. Secondly, because the stepper motor only moved one step at a time, we weren’t able to move the stepper motor as fast as we wanted to. Because of this, we decided to re-implement the stepper motor library as mentioned above in Jerry’s status report. After this, I decided to test the library on the zoom lens. As of right now, we are still in the process of testing that but we have ensured that the new library still keeps all the necessary functions from the default stepper motor library circuitPython. We will have more information about this on Monday.

Jerry Ding:

After spending much effort on this and looking carefully at the board schematics, it looks like it’s hard to further decrease the Ultra96 board’s power consumption below 2W without fully shutting it down. Though I’ve successfully gotten the processing system (CPU, memory, power management unit) and the programmable logic powered down (and confirmed this with debug prints), the remaining peripherals still consumed a non-negligible amount of power.

Instead, we decided to follow a backup plan and fully power down the system every time. The original boot time was over 20 seconds even with a relatively simple hardware block diagram but by disabling kernel features, optimizing the u-boot bootloader, compressing the kernel image and moving the PL programming step to a later step, we were able to get this down to 5 seconds.

Though taking time to boot up is suboptimal, we believe it is still not too difficult to meet our originally stated goals of zooming into a person up to 20 feet away subject to a variety of paths and lighting conditions. Our microwave sensor’s range is rated for 53 feet, and we have two sensors to get essentially a 180 degree field of view, so there will be plenty of buffer distance to work with. In addition, I looked at many example videos of package thieves, and they usually are not running or in a particular hurry on their entry path (possibly to avoid looking suspicious). Five seconds after motion is detected, the person should be easily filmed. Though a longer detection range will cause more false positives, the fact that no power is being consumed in the off state at all will make our power budget easier to work with.

In addition to this work, I wrote an alternative version of the CircuitPython stepper motor library code to allow multiple steps in one function call. I made sure that the minimum amount of Python code will be executed between each step, and wrote a good number of unit tests to make sure that my version of the library behaves identically to the original. Once the stepper motor work is done, there shouldn’t be much left except polishing the user-space applications for a smooth product.

Nathan:

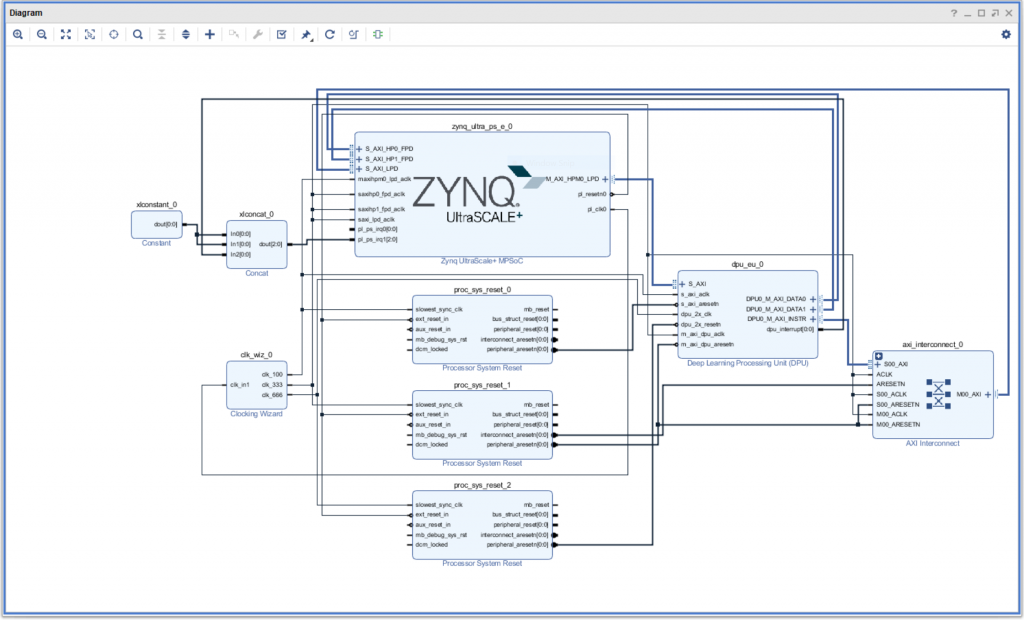

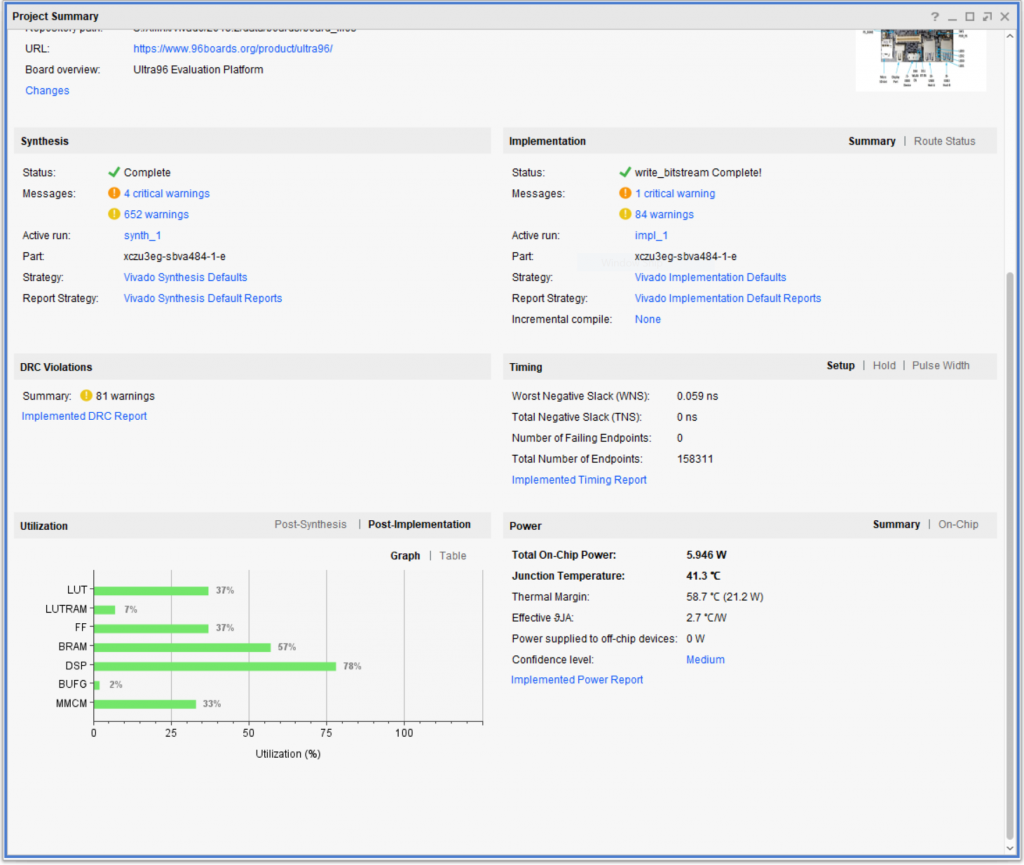

I’ll start off with an update from last week. It took longer than I expected, as Xilinx’s documentation was missing a few steps (connecting some of the clock, reset, AXI signals, and a few minor functional blocks), but I completed the DPU build. You can see my design layout below.

I used the B1600 core as a “middling” size implementation of the IP. You can see some of the parameters in the attached image. The current problem I’m struggling with is some sort of timing difficulty. It has trouble reading a timing file and reports that some part of the design does not meet the timing criteria. I’m convinced these two issues are related, so over the next week, I will be working in Vivado to get this fixed and suitable for implementation on the FPGA. Additionally, I’m working on the enclosure, but in light of the more pressing Vivado work, have pushed it back by another week. I’ll meet with Professor Nace if he’s available and ask about what I have access to in the Makerspace and get started on fabricating the basic components.

Team:

Our biggest ongoing concern as a team is the difficulty getting the board to go into a deep sleep state. While we’ve been able to suspend most activity, for reasons unknown, the board still consumes a substantial amount of power. To address this, we’ve decided to split our efforts into two direction. We will continue to try to fix the sleep behavior, while also working on reducing the boot time. While not exactly ideal, if we can get the boot time low enough, the range from our motion sensors should allow for the system to boot up before someone reaches the package, and thus we will maintain our functionality.

Another thing we’re waiting on is the C920’s enclosure from Kurokesu. It’s in shipment, and should ideally arrive within a week or so, but we do not have a confirmed arrival time as it stands. If it’s late we will need to work quickly to get it implemented in time for the end of month demo, but based on the average shipment times, this should not be necessary.

Our only major schedule change for the week is pushing back the enclosure fabrication, but there is some extension to the tuning of the power system, testing of zoom, and DPU implementation. All schedule changes are maintained in the Gantt chart, with the link provided here again for convenience: https://prod.teamgantt.com/gantt/schedule/?ids=1467220&public_keys=0rh40k2Z0hkS

Weekly Report #8 – 4/6

Karthik Natarajan:

This week I spent my time on doing two distinct things. Firstly, I spent time trying to convert the code from Arduino C to CircuitPython so it would work on the Adafruit board. Also, the problem with the memory mentioned by Nathan last week was actually not a problem. Instead, as we started looking through the files, we saw that Nathan’s Mac automatically added unecessary temp files to the board that were not needed to run the code. And, deleting all of these freed up a considerable amount of space. On top of that, when moving the code over we realized that the CircuitPython servo library did not support negative degree values, so we could not use a couple of degrees of motion that were available on the Arduino. This also was not really a problem because it did not cut off too many degrees of movement from our motion.

After I finished this, I started working with the zoom lens while Nathan and Jerry looked into other tasks related to ensuring low power usage. The main problem was that the wires were all bundled together but the inputs on the board were on opposite sides. To try and get around this I tried to put wires into each wire slot in the adapter but they turned out to be way to thin to use normal wires. On top of that, when I tried to use the thin wire, it was extremely difficult to strip and even when I did manage to strip it, it was too thin so it was not making a reliable connection with the wire in the adapter. To fix this I eventually cut off the adapter and stripped the wires on the zoom lens. For the next week, I will continue to work more with the zoom lens and figure out how to better desgin the code to use the 2 stepper motors so we can better keep track of which direction to move the motors.

Nathan:

This week, in addition to the team demo work, I spent time working with the newly released DPU IP from Xilinx, which opens up the opportunity to modify the DPU design itself and add our own IP (primarily for power management). We had initially intended to do this from the start of the project, but the lack of access to the IP made us dismiss the opportunity until now. It may be short notice, but I’m really excited to be able to play around with these specifications. Right now our design is a bit overkill. Paring it down can ideally let us save a significant amount of power. I almost have the DPU instantiated on the Ultra96, as in it should be working within an hour from this post. Just need to fiddle with some latencies a bit. I’ll post an update when I succeed.

On the topic of power, I had initially hoped we could reduce active power by cutting the voltage to certain components in commensurate with the clock speed. Sadly, while this may technically be possible, it basically involves reprogramming the PMIC on the fly, which is beyond the scope of our project. For now, I’ll stick to reducing clock speed and logic usage.

It’s somewhat behind schedule (with the Gantt chart updated accordingly), but I’ve also been sketching out the details for our enclosure (i.e. the box we will put all the components in). Ideally I want to have it be mostly a sealed system (though likely a hinged lid for convenience), with access to some important ports (USB, power, display) on the back and top. I’m thinking wood will make a fine material, but need to double check that it’ll not interfere too much with the WiFi.

Jerry:

This week I was mostly free of work from other classes, so I dove deep into the Vivado work trying to create a hardware design that has all the hardware components needed for our low power requirements. Basically, the amount of control we have over the default Linux distribution given by Deephi is limited, so we need to create our own design with a superset of its features. Mainly, we needed to include these in our design:

* Must run Linux

* The ability to control the fan

* The ability to enter a suspended state

* Has the Deephi DPU instantiated

Firstly, once I was able to install Vivado it was fairly easy to make a bitstream that simply turned the fan off.

Then I had to install PetaLinux, which was a huge pain. The installation kept failing to install, saying that it couldn’t install Yocto SDK. This was frustrating to debug because the installer always took about 30 minutes to initialize and it never cached any of its progress, plus there were no useful debug print statements to work off of. Eventually I discovered it was because my path had a symbolic link in it (which I put because I had drives whose names had spaces in them; not using a symbolic link causes even more problems). Then I realized that the board support package for Ultra96 only supported 2018.2, which is apparently not compatible with version I had (just one version higher at 2018.3)… So I had fun reinstalling Vivado and Petalinux 2018.2 instead.

Using Petalinux was also incredibly painful. The folder added to about 30GB, with 300,000 files at some directory. This makes the build very slow (2+ hours), and worse if you misconfigured something there’s no easy way to undo that so you’d need to recreate the project. The saving grace was that the build process had a cache, so I could copy the folder as a backup and revert to that if I broke something. Still, copying took about 30 minutes and building took at least 30 minutes each time even with the cache.

After a dozen reverts and builds, I had a working Petalinux distribution where I could control the fan. Soon after I incorporated the datapath that lets me do deep sleep. However, it seems like the board is still using a non-negligible amount of power when we try to start deep sleep, so we suspect we misconfigured something. This is where I’m at now.

In the process we found out that the programmable logic takes a significant amount of static power, but I was able to add kernel features to let me turn off the PL power domain. The PL loses its state when turned back on, but I also incorporated and tested a driver that will let me reprogram the PL once it is powered back on. So this is under control.

Once the deep sleep works, we’ll actually be pretty close to a finished product. First we would need to also instantiate the DPU, which Nathan is working on. We would then have to attach the zoom lens and calibrate it. Then it’s a bit of polish and we should be done.

Team:

For the demo, we unfortunately have given a bad impression by spending too long getting the system running. We thought we were thoroughly prepared to set up the system on the day of the demo, since we have tested it on the weekend and have made similar setups in the lab, but we hadn’t accounted for the only variable that was different across all previous setups. We wanted to move the system so that we can step farther away from the camera and give it a clearer view, but that meant connecting it to a different monitor, which we have never used for other setups. Not knowing the ports of the monitors very well, we accidentally connected to the display out port of the monitor, so the monitor did not respond at all. We also learned that our demo application crashes when there is no available monitor, since it imports OpenCV whose routines crash when there is no display. Soon we moved it back to the original monitor, but by then about 10 minutes have passed. We only found out why the first monitor didn’t respond later on. Otherwise, however, the functional aspect of the demo ran smoothly, and the system was able to successfully track all who tried it.

On the Gantt chart, we also updated it to move the design/manufacturing of the enclosure back because we have had some problems with trying to deal with the low power parts of the project.

Weekly Report #7- 3/30/19

Karthik Natarajan:

This week I spent most of my time writing code to interact with the servos and the motion sensor. Specifically, throughout Monday and Wednesday, I worked on getting the servo libraries working so we could move our board. Initially, Ithought the problem was with my code, so I kept looking for the error there. However, after looking on the oscilloscope with both Jerry and Nathan, we realized that the pulse we were sending was not what we assumed. Instead of getting 1 ms pulse, we were getting a distorted spike. To fix this we equated the ground wires for the Arduino we were using and the power source. This code in turn swept both servos on the pan-tilt configuration. After that with a little bit of modification to the code, I was able to make the servos only move when they detected motion. Through this we learned that the motion sensor is asserted low. Overall we should be on schedule for both the demo and the final project deadline. Most of what is left is integration. Specifically, I would probably try to make code that works with the neural network.

Nathan Levin:

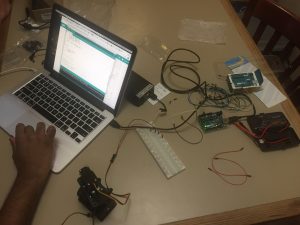

This week I soldered the Adafruit boards together, and have started working with them. I have a basic proof of concept for UART communication working on the Feather’s side of things (see attached image), though it is currently buggy. I think a little more tweaking with the serial settings should be able to fix the bug of not displaying past the first character. I’m currently working on getting communication and control working between the Adafruit board and the Ultra96 for controlling the servos, which is the last major component needed before the demo on Monday.

Related, it looks like our Feather board has too little storage to contain all of the CiruitPython libraries we’d want, so we will use the Arduino ecosystem for now. This is a slight pain, but should not prove much of a long term setback caught now.

Jerry:

I’ve looked into turning off the fan for the board, which involves controlling one of the programmable logic I/O pins. There were some tutorials for how to route the pin to a custom datapath / IP block, but the main problem is that these steps typically occur before getting the boot image. Since we already have the final boot image, this may be more difficult. I have created a forum topic on Xilinx’s webpage, and am waiting for the responses; if controlling the fan isn’t possible on the boot image, we may consider a backup plan where we wire the fan differently so that it can be turned off with a hardware switch.

I have also have learned how to create a new UART device on the Ultra96, with which we can use to communicate with the FeatherBoard M0. Once the FeatherBoard side is set up, everything should be ready to make a demo of the camera tracking a person in view. The motion sensor also works now, so the next step will be to wake the board with the motion sensor.

Team:

As a team, we worked together on some of the UART debugging and communication. Our immediate goal for today and tomorrow (this weekend) is to get a functioning demo working for lab on Monday. We have individual subsystems working, but the key now is to integrate them and have the camera follow someone under the motion subsystem’s control and the software’s analysis/dictation.

Weekly Report #6 – 3/23

Jerry

I was able to successfully quantize and compile the trained Yolov3-tiny network, so that it runs on the Ultra96 board as a demo taking in a camera feed and showing bounding boxes. Right now this DNNDK version of the network doesn’t match the behavior of the original (so the bounding boxes are wrong), but I believe I know the reason for the discrepancy. Soon, we should be able to get a working version of this demo.

When I get this done, I will turn back to looking at implementing the lower power modes.

Karthik:

Because we got all of the materials for the project, I started and finished assembling the pan-tilt servo mount. This mount essentially allows us to move the camera, when mounted, both horizontally and vertically as needed to track a person. There were some complications with regards to the screws on the servo motors but we were eventually able to get those off even if our methods were less than conventional, to say the least. Once Jerry finishes getting the neural network loaded, we will have to start working on the control algorithms for moving the servo.

To help this process go faster, I will start looking into making a hello world that proves that we can interface with the servo motor. Overall I think we have a good pace but we still do need to increase our pace if we want to finish on time.

Nathan:

While the latter part of my week was occupied with a Model UN conference I had to dedicate significant time to hosting, earlier in the week I got access to the servo hardware and a chance to try out some of the basic servo functionality. I started with some basic code from the Arduino servo library, as that is one of the most ubiquitous control schemes and serves as a good proof of concept before trying Circuit Python. Unfortunately, I discovered that there seems to be a problem/incompatibility either with our servo or the Arduino ecosystem, as the servo would refuse to do anything other than turn counter-clockwise until it hit the limit of its range of motion. Manual analog pin output control did nothing to address this issue. To illustrate the complication involved, we found that even while not nominally transmitting a PWM signal to the servo (so it should be at rest), it consistently moved by ~10 degrees when a stove-top igniter ~4m away sparked. This is troubling to say the least, and we are investigating the cause of the discrepancy as a group. I am still somewhat behind schedule, though the coming week should be lighter than past ones. I will focus on servo/motor control and finish the soldering needed to get the Feather boards functional.

Team:

In addition to individual contributions specified above, Karthik and Jerry completely assembled the servo mount, which is now ready for a camera attachment to enable full pan and tilt functionality. Additionally, towards the end of the week we worked together to try to diagnose the servo problems, with a picture of our setup shown below. It should be noted that using the battery as our power source serves as a good proof of concept for how the servos will be powered in the final system, control dynamics aside.

Overall, we are pretty much on track but could be slightly behind depending on how long it takes us to get through the issues we saw throughout this week.

Weekly Report #5 – 3/16

Nathan:

I was not able to get as much work done this week as I intended, with a busier than expected spring break schedule and lack of convenient internet access. For now, I’ve continued to study the Adafruit CircuitPython libraries for servo and stepper motor control, and their UART control as well. I’ve downloaded the necessary libraries, and will try out my example code once I get access to the hardware.

Karthik:

I have also not been doing as much work because of spring break. But I have been researching into possible control logic that we could use for the servo. I will hopefully be able to implement it once I get back to CMU after the break. So, because of that we are still a bit behind our schedule, but as mentioned in the team report we have updated the Gantt chart to better illustrate our current situation.

Jerry:

I mostly just researched into ways to implement autofocusing for the system. Currently, I’m thinking of measuring a lookup table for reasonable focus parameters given the size of the bounding box to be tracked and the current zoom level, and using a contrast based algorithm to fine tune the focus around that point.

Team:

Regarding the Gantt chart, there are a few changes made. One, the enclosure was pushed back by about a week and a half, as leading up to the April 1st demo, the most important thing is getting working mechanical control and vision systems. Some of the power-related optimization tasks were also relabeled to better reflect a more software/firmware-based nature, as opposed to the earlier hardware controls, and changes were made to the distribution of work to bring motor/servo control under the group whole-domain.

Weekly Report #4 – 3/9

Jerry:

Firstly, I was able to set up the board to be accessible without needing a monitor. This would increase our flexibility for working with the board.

I have also been researching into the low power suspend mode and other options for reducing the Ultra96’s power consumption. We discovered that the Linux built-in suspend probably doesn’t power gate the FPGA fabric, as power consumption only falls by half when suspended. There is a chance that the low power suspend requires more deep level tweaking with Vivado (in particular, enabling the option), though we plan to try out the steps afterward in case the DNNDK image also has it enabled.

Since we as a team were able to run various demo CV algorithms with the board, the logical next step will be to try to load our own network on it.

Karthik:

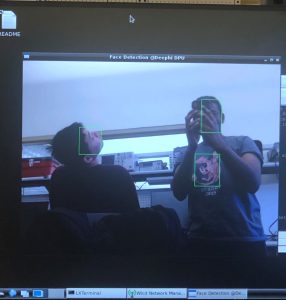

Throughout this week, I helped Jerry and Nathan with trying to set up Deephi on the Ultra96. This took a considerable amount of effort because of some extra time that we needed to debug some issues with transferring the correct files to the Ultra96 board. Specifically, we first tried to use USB, but that didn’t work because the USB was not mounted. Because of this we eventually started to use the wifi connection to the Ultra96 to make an SFTP connection and transfer the Deephi files that way. Eventually, we got it to work and here is a picture with us using one of the face detection demos.

On top of that, we recently acquired the servos that we are going to be using for the project. So, I have started to think about and look into ways to try and interface with the servo motors. Overall, I think I might be slightly behind schedule, but I think a simple demo with a valid control algorithm for the servos should help me get back on schedule.

Nathan:

During, the earlier part of this week, I spent the majority of my time working on finalizing the system details for the Design Report. We decided to use an Adafruit Feather board to control the motors and servos, with controls coming from the Ultra96. I investigated the differences between Circuit Python and Arduino C for the control logic. My initial inclination is to use Arduino C for the sake of portability, and I will try to get a demo working by the end of tomorrow.

I also ordered the last of the components for the mechanical control system, including the Feather board and control board accessories. On top of that, I ordered the lens, however, the vendor is apparently shady about the shipping info, so it’ll take about a week longer than intended for it to arrive. In the meantime, I’ll focus on the servo control, which points the camera at the subject.

P.S. I have been investigating machining the enclosure for the camera at the CMU makerspace instead of buying it. Would save ~$100 and 2 weeks shipping time. None of us have such experience, but we can seek advice.

Team Status Report:

Most of our time this week was spent debugging different issues while trying to run the Deephi demos on the Ultra96. The first issues started with the wifi. Specifically the wifi software that our archlinux OS used had a bug which stopped it from working with wifi networks that used WPA2 security. Our first attempt to get around involved installing a new wifi GUI that would allow us to connect to networks that used WPA2 security. However, after doing that, we were unable to connect to any wifi networks. So, eventually, after trying to fix the issue multiple times, we were able to get it working by hard-reseting, unplugging all of the peripheral devices and reflashing the archlinux OS on the SD card.

We also tried looking into ways to profile the power usage of the board. We could get a crude figure for system power just by looking at the current draw from the power source, though we’d have to find a way to adapt this approach to work with the dedicated DC adapter. We tried installing powertop to get a more detailed breakdown, but the utility wasn’t able to show any power usage statistics. Next we plan to try insalling some tools from Xilinx to get a better idea about the power usage.