What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

1. We have completed the integration of the speech module and the object recognition module to the overall system and conducted primitive user-testing during development. We have now looped back to the integration of the proximity module and found that the timing issues we faced were not due to the hardware or software programs we built, but due to the innate inability of the jetson nano to handle real-time processing. We found online that other users who attempted this same integration of the HC-SR04 ultrasonic sensor to the NVIDIA Jetson Nano faced the same challenges and the workaround is to offload the ultrasonic sensor to it’s own microcontroller. This hardware route will require us to completely separate the proximity module from the rest of the system. The alternative solution we have come up with is to handle this with a software solution that will get a distance estimation from the OR module, using the camera alone to approximate the distance between the user and the objects. This logic is already implemented in our OR module and our original idea was to use ultrasonic sensors to compute the distance so as to have improved accuracy as well as reduced latency so the proximity module doesn’t rely on the OR module. However, due to this unforeseen change of events, we may have to attempt this software solution. We aim to make the call on which direction this weekend. As Meera, our hardware lead, is away due to unfortunate family emergencies, we hope to get her input when she can on which (hardware/software) solution to take with this challenge. For now, we will be attempting the software route and conducting testing to see if this is a viable solution for the final demo.

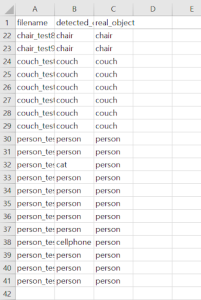

2. After connecting the camera module and the OR model, we realized that there is a latency for every frame, possibly due to the recognition delay. Therefore, even if the camera is turned to a different object, the Jetson outputs the correct object around 5 seconds after the change. This can crucially jeopardize the success of the project because we had set the use case requirement to be less than 2.5 seconds of recognition delay. The risk can be mitigated by using an alternative method of capturing frames. A screen capture can be used instead of the video stream, which can potentially resolve the delay issue. However, the problem with this method is that the process of Jetson Nano running the program of a camera capture, transferring the information to the model, and deleting the history of the captured frame can take more time than the current delay. This alternative solution can also delay the product delivery due to more time necessary for the modification of the program.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

As mentioned above, the HC-SR04 ultrasonic sensor integration seems to be incompatible with the NVIDIA Jetson Nano due to it’s inability to handle real-time processing. To address this problem, we have two potential solutions that will change the existing design of the system. We have outlined the two options below but have yet to make a concrete design change due to unfortunate circumstances in our team and also having to direct our focus on the upcoming final presentation.

1. Hardware Option: Microcontroller to handle Ultrasonic Sensor

Entirely offload the proximity sensor from the NVIDIA Jetson Nano and have a separate Arduino microcontroller to handle the ultrasonic sensor. We have found projects online that integrate the Arduino to the NVIDIA Jetson Nano and definitely believe this is a possible solution should our hardware lead Meera also be on board.

2. Software Option: Distance estimation from OR module

As described above, pulling the distance data from the existing logic used to detect the closest object to the user in the OR module is our alternative route. The downsides to this include the lowered accuracy of the distance estimation done solely using an image as compared to using an ultrasonic sensor. The upside would be a much quicker design & implementation change as compared to the hardware route.

As the final presentation is coming soon, we may use the software route as a temporary solution and later switch to the microcontroller hardware route to ensure full functionality for the final demo. The hardware route will certainly add development time to our project and we risk cutting it close to the final demo but we will achieve a more robust functionality with this approach. As for the cost, I believe we can acquire the Arduino from the class inventory so I don’t think this will add much costs to our project. The software route will be a quick implementation with 0 cost.

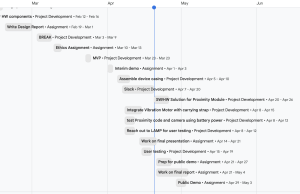

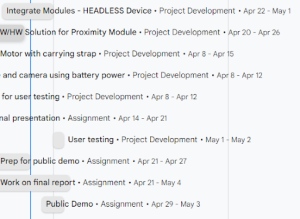

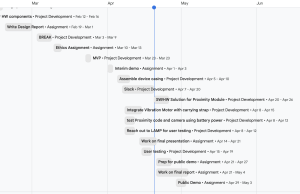

Provide an updated schedule if changes have occurred.

Josh and Shakthi will work on integration and testing of headless device 4/20-4/25. Josh, Meera, and Shakthi will conduct testing and final demo work.