Accomplishment:

- Enabled Gstreamer option on opencv-python on Jetson to allow real time capture. The opencv version 4.8.0 did not have a gstreamer option enabled, so a manual installation of the opencv with that option enabled was necessary. Because the opencv folder is too big, around 8 GB, I used a memory swap within the Jetson to temporarily increase space on Jetson. The build and install was run after the download.

- Worked on integrating the OR Module, Speech Module and the Proximity module into the NVIDIA Jetson alongside Shakthi. The speech module and the proximity module were integrated within the loop of the OR model, so that for each frame, the Jetson will identify which button is pressed and which object is detected to output a desired result.

- Added “cat” and “cellphone” as one of the indoor object options in the OR model and DE feature.

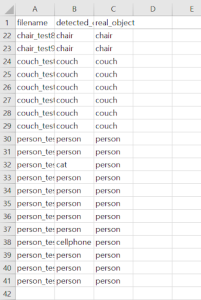

- Tested OR model with a test file I have created. It stores the detected results and the real objects and compares them to yield the accuracy data. Tested 40 images composed of 6 cat images, 6 cellphone images, 10 chair images, 6 couch images, and 12 person images. Among them, 38 images were able to correctly detect the closest object. That makes the accuracy 95%. The incorrect images were due to the overlapping of several objects in one image. As an example, the model falsely identified the closest object when an image contained a cat right beneath a person.

- Conducted unit testing on buttons and speech module with integration with the OR model. Pressed buttonA for vibration module and pressed buttonB for speech module consequently to test the functionality. Both modules had 100% accuracy.

- Performed a distance estimation testing under four different conditions on detecting a person: first was to stay around 1.8m from the camera, second was to stay around 1.2m, third was to stay up close around 0.2m, and the last was to stay around 2.2m.

- The result was that 1.8m detected 1.82m, 1.20m detected 0.89m, 0.20m detected 0.38m, 2.2m detected 1.94m. On average, there is an uncertainty of 21.5%. Since the DE feature works based on the reference images, a little calibration is required. We will conduct more testings to find the most accurate calibration on the distance result.

Progress:

- I made progress on successfully implementing the OR model to the Jetson Nano and allowing the camera to send real time data to the model for object detection.

- We need to work on making the device headless, so that the device can be run without the monitor and wifi.

- During the process of moving the device to headless, the speech module broke, so will need to work on the module again.

Projected Deliverables:

- By next week, we will finish deploying the Jetson headless, so that we can test out the OR model by walking around an indoor environment.

- By next week, we will conduct more testing on the Jetson OR to find the most accurate calibration for the distance of the closest object.

- By next week, we will integrate the speech module again.

As you’ve designed, implemented and debugged your project, what new tools or new knowledge did you find it necessary to learn to be able to accomplish these tasks? What learning strategies did you use to acquire this new knowledge?

I learned a lot about machine learning frameworks and techniques by integrating an OR model and developing a distance estimation feature. I learned how to train an OR model with my own dataset with pytorch, modify training parameters such as epochs to yield different training weights, and display and compare detection results with a tensorboard. To learn this new knowledge, I allocated a lot of time researching by reading research papers, navigating through github communities, and scanning many tutorials. It was very challenging to find online resources that had the same issue as me because the systems are generally all different for each user. I also realized how important the relevancy of a post is because the technology upgrades rapidly, so I found many cases where the issue occurred due to the outdated sources.

Furthermore, I was able to get some experience on deploying modules on Jetson Nano. I learned a new skill of “memory swap”, which allowed me to temporarily increase the memory of the Jetson if I needed to import a huge module, such as opencv. I also realized how difficult it is to work with hardware modules and learned why we need to leave sufficient slack time towards the end of the project. As an example, the detection rate of the OR model was much slower than when it was run on the computer. If I did not spend time modifying the weight of the model during the slack time, I would not have been able to deploy the module and yield the detection result with less latency. Likewise, through multiple occasions where deployment of the model did not function as what I would have expected, I acquired this learning strategy.