Accomplishment:

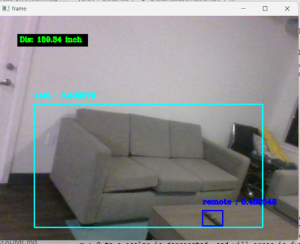

- Implemented a python program that tests the OR model + DE feature against test images. It retrieves the closest object detected in the image and verifies accuracy by the respective image filename. As an example, if the filename is “person_test5.jpg”, the actual closest object is a person in the image. In the program, it filters out “person” from the filename and compares it with the detected closest object.

- The program was run against chair (8), couch (6), person (5) images. The result came out as 100% accurate.

- Started working on deploying the OR module to Jetson. I transferred python files and reference images from my computer to Jetson.

Progress:

I failed to meet the schedule due to the system setting of Nvidia Jetson. Importing the torch module on Jetson is taking more time than expected due to unexpected errors, so the schedule is postponed for a few days. Installing appropriate modules to Jetson is the critical component of the project, so I will make this as the highest priority and attempt to resolve the issue as fast as possible.

Projected Deliverables:

By next week, I will finish deploying the OR model to Jetson, so that we can start testing the interaction between several subsystems.

Now that you have some portions of your project built, and entering into the verification and validation phase of your project, provide a comprehensive update on what tests you have run or are planning to run. In particular, how will you analyze the anticipated measured results to verify your contribution to the project meets the engineering design requirements or the use case requirements?

I have implemented a python program that tests the OR model + DE feature against test images. It retrieves the closest object detected in the image and verifies accuracy by the respective image filename. As an example, if the filename is “person_test5.jpg”, the actual closest object is a person in the image. In the program, it filters out “person” from the filename and compares it with the detected closest object. The program was run against chair (8), couch (6), person (5) images. The result came out as 100% accurate, which is far greater than the use case requirement of 70% accuracy. If time permits, I am planning to include more indoor objects, so that the model can cover a wider range of objects while maintaining high accuracy.

After the deployment of the OR model to Jetson, I am planning to use the same test file to run a testing on images taken from the Jetson camera and produce an accuracy report. In this case, since we are sending the images to the model in real time from the Jetson, we would not be able to rename the file in the format of the actual object. Therefore, I will instead use live outputs of detected closest objects from the Jetson and manually check whether the detection is accurate.