Significant Risks and Contingency Plans

The most significant risks to our project right now is getting everything to work. The last two weeks, we worked on quickly readjusting our project to accommodate for the fact that we are now using a rover instead of a drone. This meant developing new plans for our implementation and our overall system so that our project could maintain the same functionality as before. Now, the key risks are making sure that our new implementation strategies will work. To ensure this is the case, we made sure to try and not change what we had from before, and then analyze what differences occurred now that we had a rover. This was easier to handle since in the past, we had focused on modularity, meaning the CV servers and the website were not affected as heavily. To add to this modularity approach to add an information hub to help with information flow in our project, and also providing a barrier to note where one module starts and ends.

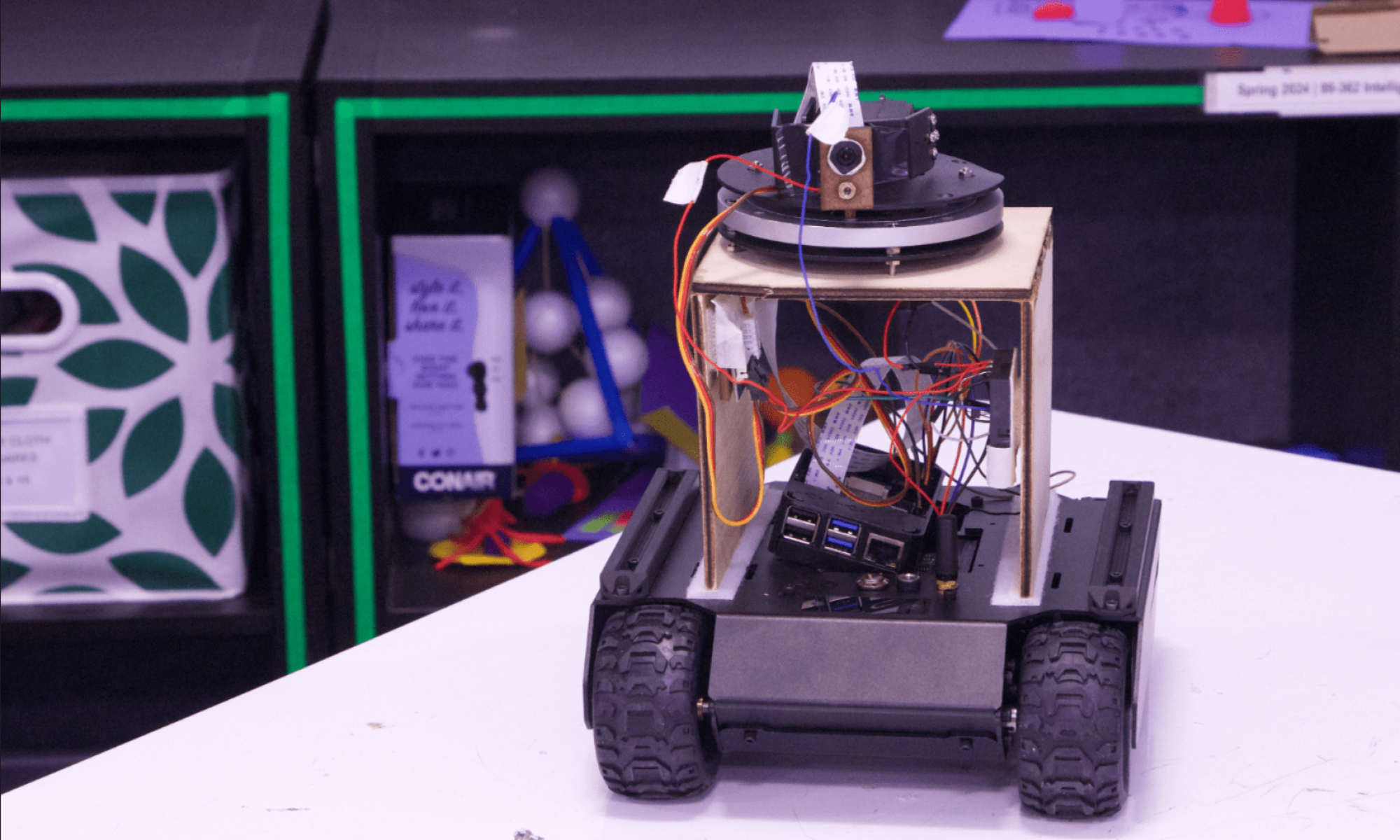

Regarding the rover, our main concern is having controlled communication with the rover. We are working on using a pre-made web app to learn existing methods of communication, then interface/hack off these concepts. Should this method not work, we also plan on having a Raspberry Pi aboard the rover (for the camera video data), and we can also send JSON commands to this Raspberry Pi instead. The JSON commands can then be transferred to the rover using UART communication.

System Changes

We have conducted a significant amount of research to figure out how communication and functionality of the rover would work. We decided that the easiest and practical way for communication would be through the ESP32 module on the rover. Additionally, using a Raspberry Pi module on the rover would allow us to control the laser pointer and gather video data from the camera. Apart from these inclusions, there have been no major system changes, and it seems like integration of all the components would be seamless through WiFi communication. There might be some changes in the way the distributed CV server and the Django Web server would receive and send out information, and we would know of any changes once we work on figuring out the communication protocol of the WAVESHARE rover via the Raspberry Pi.

Other Updates

There have been no schedule changes nor other updates. Potential schedule changes may occur after we work on the rover next week after its delivery, notably as we attempt to accomplish our goal (as requested by Prof. Kim) of having an end-to-end solution by our meeting Wednesday. If this is not accomplished, then the plan is to have something close to this by the end of the week.

Specified Needs Fulfilled Through Product Solution

We also make some further considerations that are important to think about while we develop our product. We identify these considerations by breaking them down into three more groups. The first group (A) deals with global factors. The second group (B) deals with cultural factors. The third group (C) involves environmental considerations. A was written by Ronit, B was written by Nina, and C was written by David.

Consideration of global factors (A)

Our product of a search and rescue rover heavily deals with global factors. The need for efficient and cost-effective search and rescue operations is inherently a global concern, as disaster and war could affect any country in the world. In general, our rover would be able to leave a positive economic impact on the global economy, as risks to human life are mitigated by removing the need for manual intervention in search and rescue operations. Furthermore, our rover caters to different terrains, making it usable in various geographical locations and scenarios.

The technologies our rover uses also considers global factors. The use of a web application that could be accessed by users anywhere in the world could allow for real-time collaboration between various international rescue agencies. Using WiFi to communicate with various components in our system could allow us to expand our system to incorporate satellite WiFi, allowing for the distributed server to communicate with any rover anywhere in the world. Using a distributed system also improves scalability of the product, as multiple rovers in multiple rescue missions could send their video data for analysis, improving efficiency of rescue operations that might be happening concurrently in different locations.

Cultural considerations (B)

During the development of our search and point rover, it’s important to be mindful of its use case across different communities and cultures. Some features to consider would be adapting the rover’s interface to support different languages, communication styles, and symbols. In addition, abilities to customize and personalize the rover would allow users to tailor the rover’s interface to their cultural preferences. Ensuring that the rover does not impose on sacred cultural areas, being mindful of gender sensitivities, and privacy considerations are also vital. Additionally, receiving feedback from these different cultural communities in the rover’s design facilitates optimized technology that respects cultural and ethical values of diverse populations. By integrating these cultural considerations, we can create a search and point rover that is not only technologically advanced but also respectful across various cultural backgrounds.

Environmental considerations (C)

with consideration of environmental factors. Environmental factors are concerned with the environment as it relates to living organisms and natural resources.

Search and Point would have no environmental side effects whatsoever. As the rover performs its tasks of searching and pointing the laser pointer, it does not leave behind any sort of residual presence. The rover is battery-powered, allowing it to not have any harmful byproducts (unlike gasoline-powered motors). The batteries are also rechargeable, allowing for a renewable and safe way of maintaining the rover’s functionality. The decentralized system revolving around a centralized information hub means that the actual part of the project that is deployed into the environment is actually quite small; all the main computing power is offloaded to servers elsewhere. The only part of the environment our project actually leaves an imprint on is the rover running over the landscape, which is an arguably negligible part of environmental damage. The laser we chose is also a relatively low power laser, meaning that there are not any harmful side effects from using the laser.