Significant Risks and Contingency Plans

This week, we met with Andrew Jong to discuss what kind of drones would be useful for our project, and what capabilities would be feasible to implement. After this, and with further discussions with Prof. Kim and Tamal, we decided that making a pivot to using a land-based autonomous vehicle instead of a drone would be more feasible to implement, as it would be less risky and expensive.

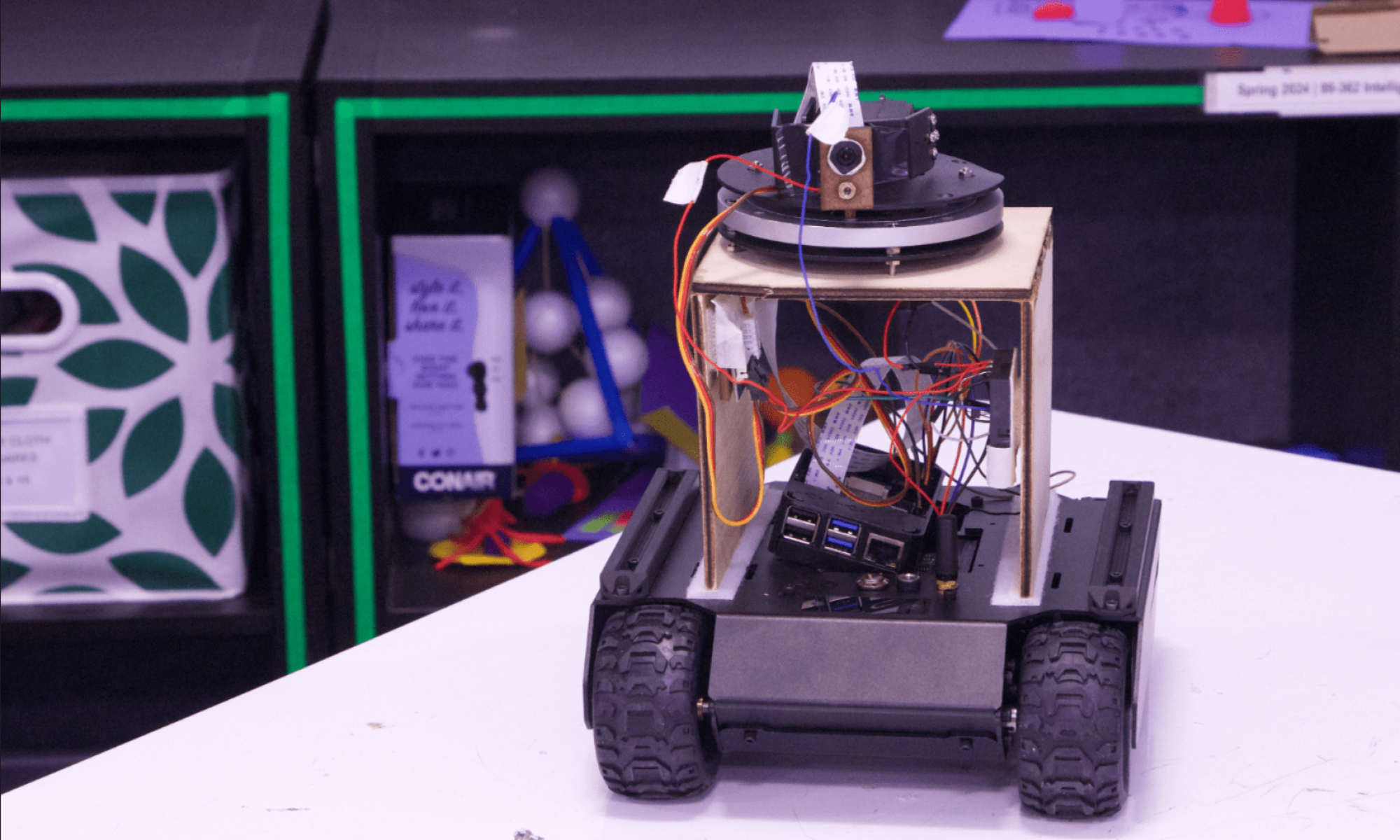

The most significant risks right now all involve the capabilities of the rover. It is imperative that we find a suitable rover that has a software API to navigate it autonomously. Additionally, the rover must be able to navigate through an uneven terrain effectively and accurately. There are also some risks with figuring out how to conduct our scenario testing for demo day, and how a camera and laser mount would exactly function. We are actively investigating many possible types of such rovers and what capabilities they hold. Our main prospect are Waveshare WAVE ROVERs, which are cheap, have software API, GPIO pins, and an all-around versatile body. Regarding this, most of our risks and back-up plans come around to learning how to use this rover, and changing our implementation plans to fit around this. While our general idea may not be changed, our implementation plans certainly will be.

Our contingency plan, in case we don’t find such a suitable rover, which seems improbable, is to buy a more expensive drone that has all of the capabilities we need to complete our project. However, it seems highly unlikely that we use a drone, as using a rover seems easier for testing purposes. Additionally, a rover would be more durable than a drone, as any accidental collisions on the drone might break it. We would also be looking into potential issues with testing a rover on campus, and look if any licenses or certifications are needed for this.

System Changes

Depending on what kind of rover we decide on purchasing, there are numerous system changes in terms of the API we would use to control the rover, how communication between the rover and the Django and CV servers would work, and what kind of path the rover would take. There are also some changes in our use case, as using a rover allows us the flexibility of being able to search an uneven terrain and potentially avoid obstacles (undecided if we actually should implement this). However, much of the implementation of the web application and the distributed CV server should remain the same. All aspects of the design and system integration of the rover are a work-in-progress, and we are working hard to make sure this pivot goes smoothly and doesn’t hinder our progress. As aforementioned, learning to interface with the rover (like the WAVE ROVER) would require implementation differences on all portions, and these differences will be the focus of our work moving forward.

Other Updates

Schedule updates are expected, but not finalized, to accommodate for the delay in obtaining our mobile platform. This delay will attempt to mitigated by ordering the parts prior to spring break, and then analyzing the documentation on using these parts during their delivery. Also, now that we plan to use a WAVE ROVER instead of a drone, each of our individual components are expected to face some changes in integration and implementation.