Accomplished Tasks

This week was the week of the Final Presentation. I was the one who presented this week, and the final presentation went well, mostly due to the preparation we did beforehand. I worked on putting the slides together, and made sure to rehearse my presentation for the big finale!

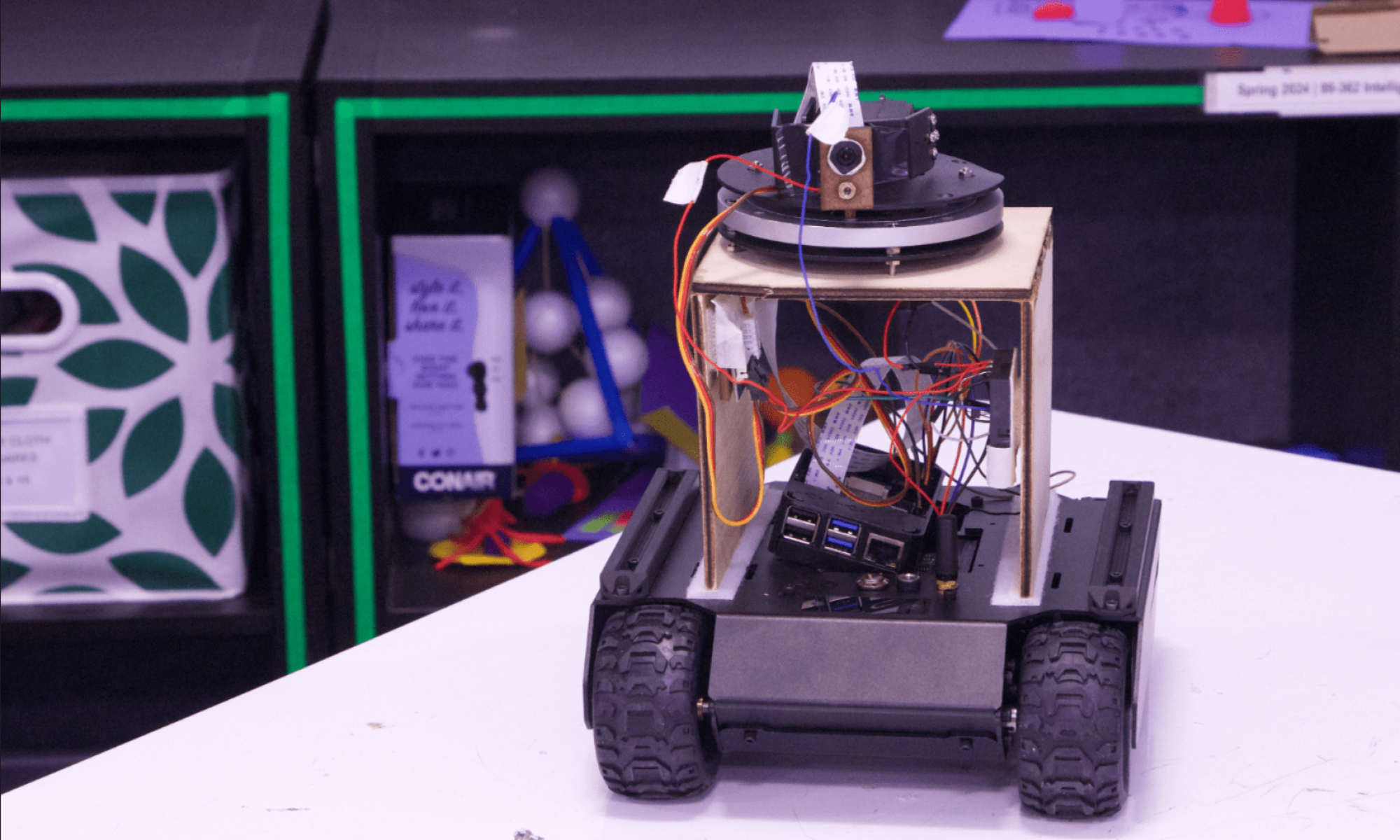

For content, I worked hard on ensuring that the rover would work when put altogether. This meant tuning the rover so that there could be accuracy during the laser pointing. At first I ran into issues where due to the latency of the CV, the rover was unable to converge on a correct point to aim the laser at; essentially it would keep overshooting the correction. Resolving this issue meant making the rover adjust very slowly so that it would converge and fire with great accuracy! It just happens to be perhaps overly slow, which is still under investigation.

For the presentation, we left it at “x-axis” accuracy, but I also worked on improving “y-axis” accuracy as well. This is similar to x-axis accuracy, except the tuning comes from turning the camera up and down instead. Tuning for this will need to be done still.

Progress

My progress is on track, with the full end-to-end rover put together, and very accurate! Now it comes down to tuning the rover to perform even more accurately by toying with vertical accuracy. This will also involve many tests and small adjustments, but with the main infrastructure there, it should be doable. There is also further consideration into the “search” behavior for the rover, since the inability to replicate movement is proving to make exact creeping line search not possible.

Next Week’s Deliverables

Next week, I plan to have the rover completely accurate, just in time for the ultimate final demo! I will also work on deciding a finalized search method. Lastly, I will also work on putting together all the final documentation pieces.