Tasks accomplished this week

This week, I worked primarily on making sure everything was functional for our interim demo. This involved coding some logic to show the professor and TA that the CV server is actually able to detect humans. I did this primarily by implementing code that draws bound boxes on the region of the image detected as a person (4+ hours). This potentially opens up to the possibility of displaying this information on the website, which I will discuss thoroughly with Nina.

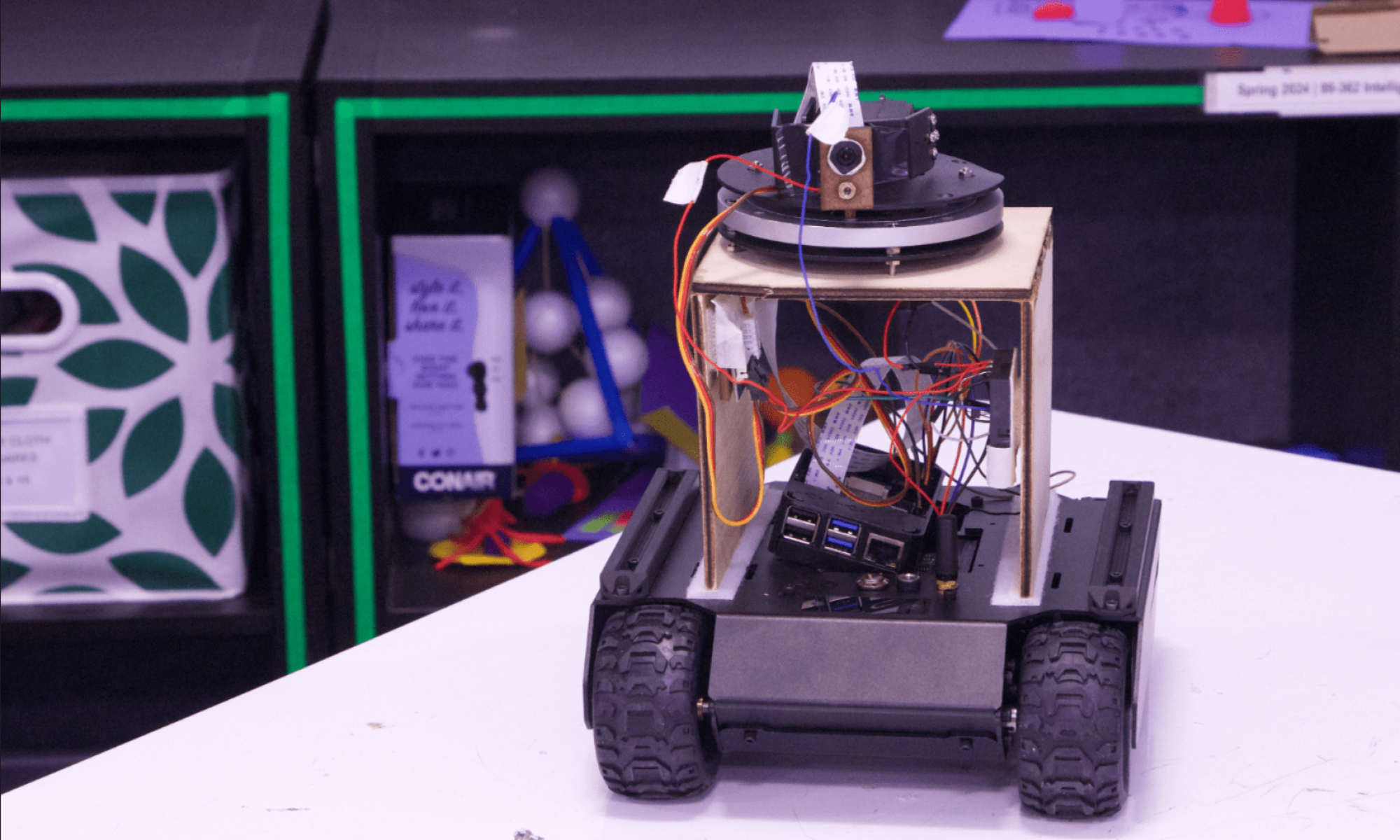

I also implemented some control logic for the rover (9 + hours). This was implemented on the CV server and involved making trigonometric calculations on how much the rover should turn. This involved recognising the depth of the human (how for the person is from the camera), which I did by measuring the relative size of people from the camera. This allows us to accurately turn the rover towards the person. However, I still need to account for latency between the CV server and the person, which I will implement next week.

Progress

I believe I am still on track. Every component of the distributed CV server is implemented, and some design decisions need to be finalised, which would be completed within a couple of hours. This would allow for me to focus more on scenario testing in the future.

Deliverables for next week

For next week, I hope to make decisions on how many worker nodes to spawn by performing speedup analysis. Additionally, by doing this, I hope to get a sense of the latency of communication between the CV server and the rover and include it within my control logic.

Verification and Validation

Throughout my progress in developing the CV server, the laser pointer, and communication protocols, I have been running unit tests to make sure the modules being implemented function as desired and meet our design requirements. I have tested out the laser pointer activation thoroughly through visual inspection and execution of the server-to-rover communication protocol. I have also thoroughly tested out the accuracy of the object detection algorithm through execution on stock images and have attained a TOP-1 accuracy of 98%, which is significantly greater than our design requirement of 90%.

I am also going to analyse the speedup achieved by the distributed CV server through unit testing of the execution of object detection on the video stream, which will allow me to determine the number of CV nodes to spawn. This must be greater than 5x as per our design requirements. I will also thoroughly test out the accuracy of the point control of the laser through scenario testing and make sure to tune this logic to achieve our goal offsets. This will be done by manually measuring the distance between the laser and the human upon execution of the entire system.