This week, our team was able to switch/finalize our idea from pool refereeing to car tracking and generation of stream. Because of this, I was able to put in orders for the things we needed the most at this time, which were the racetrack, arduino, and motors. We also need cameras soon, but for now we are planning on using the camera I bought to test pool double hits. I personally did research on the hardware side, so why arduino uno, why servo motors over stepper motors, and the interfaces between hardwares. I have not started thinking about how to mount the camera stands, but I plan on using some sort of stationary object and attaching a motor onto it, and then attaching the camera onto the rotor. Our group met/talked almost daily to get each part of the design report somewhat thought out, so I personally focused on the system implementation and design studies on the hardware side, as well as testing/verification/validation. We are planning on meeting tomorrow to join these thoughts together and have something to present the TA and faculty. We were unable to make a new schedule yet, so we will do that tomorrow as well.

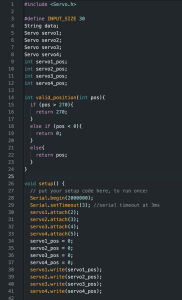

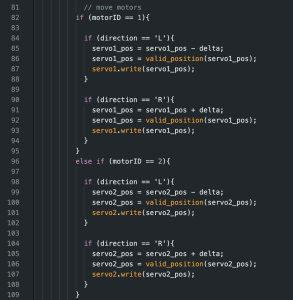

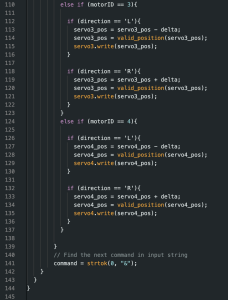

We are without schedule at the moment, but we know we are quite behind. We hope that finalizing the idea will give us the boost needed to actually start working on the project. We will also be utilizing spring break and some of the slack time to catch up. Personally, to catch up, I will get working on some sort of arduino code to control the motors at fine gradients when the motor/arduino arrive. After that, I will start designing the camera stands.

Next week, I hope to have some form of code ready that allows the arduino to control the camera motor movements given some input from car detection/tracking module. Also I hope to start designing the camera stand, or at least have an idea of what it would look like and how I would build it. Lastly, we will work on the design report all of next week.