This week was our final presentation in front of our Capstone group. We wanted to get a good working demo before our presentation and possibly have a video to show off our progress. We were able to achieve this!

Given that the report was more so based on our testing, verification, and validation, there was a significant push towards finalizing our testing strategies and collecting data.

Here is a brief outline of our major tests:

- Understand how many frames we get per camera for different power levels. This helps us process how well the feed would look for any single-camera

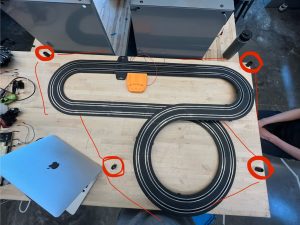

- Testing the switching of the camera. This included proximity tests where the car would be moved back and forth between the cameras to test whether the switch would take place as predicted

- Detection tests through positioning the car in different orientations and (a few different lighting conditions). These tests did not seem to be conclusive as the number of orientations and lighting conditions can vary a lot. We ideally should have tested at the extremes of the design requirements but reproducing lighting conditions proved to be challenging.

While we could not fully account for different lighting changes we did make a change to accommodate the slower switching process that takes into account what the order of the cameras is. This order is recorded after the initial lap and updated to ensure any camera removals or additions are seamless. (In the case that a camera has to be shut down/ started up mid-race)

To fully accommodate this new change we will need more testing to make sure the ordering works. One key edge case here is when there is rapid switching between the cameras due to detection of noise/ due to true detection of the car, but given their rapidness, it makes the stream unwatchable. Given that quality of stream is an important design requirement, we will need to make some provisions in the switching algorithm to make sure we deal with this edge case.