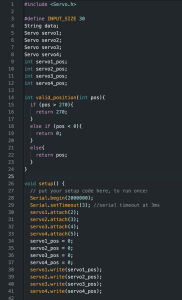

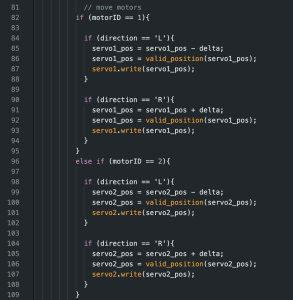

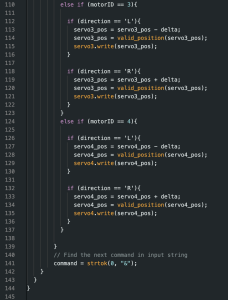

This week, I mainly worked on interfacing with object detection module. Since my motor control module works on the Arduino side, I needed to find a way to take the bounding box of the detected object from the detection module and use it to tell the motors to pan left or right by how many degrees. For this, and for now, I am using a simple algorithm where I place an imaginary box in the middle of the screen and if the detected object’s center point is to the left or right of the imaginary box, the function sends a serial data to the Arduino telling it to pan left or right x number of degrees. Now the tricky part is the smoothening of the panning. The two factors that contribute most to making panning as best as possible are the imaginary box width and the number of degrees the motor should turn at a command. Currently, I am at 480 for width out of 640 of the whole frame and 7 degrees panning, since we expect the car to be moving when the camera is capturing it. I will do more testing to finalize these values.

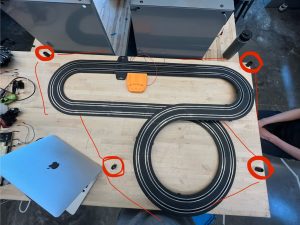

Additionally, something I worked on this week was finding a way to slow down the car, as it was moving too fast for testing purposes. From professor Kim’s advice, we attempted to manually control the voltage supply of the track instead of plugging in directly through 9V adapter. I removed the batteries and adapter and took apart the powered area of the track. I soldered two wires to the ground and power ends and clipped it onto a dc power supply and it worked perfect. The voltage we are using for testing is set at 4V, which is significantly slower than before.

Camera assembly has also been wrapped up this week, as the screws came in finally. Although it functions, I will try to make it more stable in the next few weeks when I get the chance.

My progress is now up to schedule.

Next week, I hope to have an integrated, demo-able system. Additionally, I wish to keep fine tuning the motor controls as well as stabilize the camera stands.