What did you personally accomplish this week on the project?

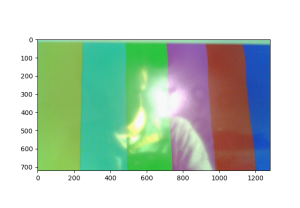

For this week, I got the camera driver working on the ESP32. The code that I have currently works at the 240p resolution that we are targeting.I also tested it at 360p, 480p, and 1080p to make sure that it is able to accommodate future extensions if need be. In addition to writing the camera code, I also have some code written that will write the image data from the camera into a microSD card. While this is not needed for our project, it serves as a useful debugging and development tool. Since the Wi-Fi communication has not yet been written, the microSD card is how we are currently testing the camera driver and pulling images from it. Finally, I also made a couple of small modifications in the JPEG encoder and decoder programs to change the pixel format to match the pixel format of the OV2640. The OV2640 outputs images with a 5-bit red and green channel and a 6-bit blue channel. The three channels are then packed together into 2 bytes before being stored. Such a configuration is commonly referred to as RGB565. However, the proof of concept JPEG encoder and decoder uses 8-bit for all three channels, also known as RGB888. To eliminate the need to convert from RGB565 to RGB888, I decided it was easier to just modify the proof of concept to handle a RGB565 format.

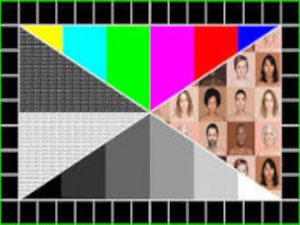

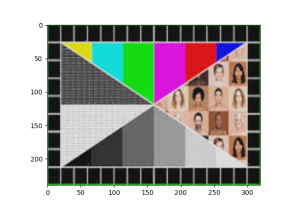

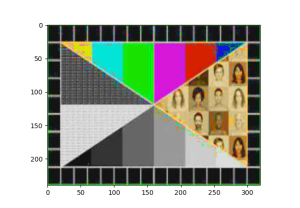

With the camera driver and JPEG pipeline modified, we are now able to take a picture, compress it, then decompress it. The following image shows the end result after going through all those steps. The reflection in the camera are from the screen that was displaying the color test bar images

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Currently ahead of schedule by approximately 2 weeks

What deliverables do you hope to complete in the next week?

For next week, I hope to finish porting the encoder over to the ESP32. After this, we should only have to run the decoder on the laptop and also test out the encoder performance when running the ESP32