What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

As described in the project proposal, the main technical risks we have identified in the project are as follows:

1.We need to be able to compute frame compression fast enough:

One of our primary requirements for the project was to ensure that the entire system is inexpensive and requires low power to run. In light of this, we decided to use ESP32 microcontrollers for compressing and transmitting the image frames from the camera. ESP32’s present a compute limitation to the system, and could probably cause the frame compression algorithm to not run as fast as expected. If need be, we plan on switching to difference compression algorithm such as delta compression

2.Stream enough data over the wireless connection:

Our prototype MVP uses 6 cameras, and a single receiver node. The receiver node’s ESP32 will be receiving data frames from all the 6 camera nodes simultaneously, and hence there will be a high amount of data being transmitted over the wireless connection. We need to ensure that we drop no more than 10% of the data frames. Having less than 10% dropped frames means that there will only be a 100ms of video that will be lost when a frame is dropped. No animal will be able to cross the surveillance area in less than 100ms. In case we exceed that 10% threshold, we plan on increasing the data access points on the receiver node. Frame drop percentage will be computed by comparing the number of frames transmitted vs the number of frames received, and ensuring that the loss percentage does not go above 10%.

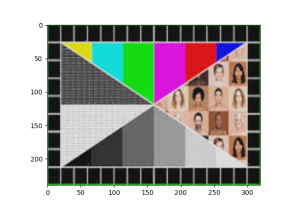

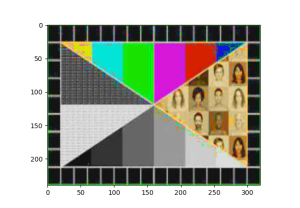

3.Decompress all the incoming frames fast enough:

As mentioned above, the receiver node will be collecting data from 6 camera nodes, decompressing them, and then driving the display. The decompression algorithm would have to be fast enough to ensure concurrent streaming from all the 6 camera nodes, without high latency and computation errors. We will be using a FPGA on the receiver node to perform the decompression, and if an issue arises due to the FPGA, we plan on opting for a larger FPGA with a higher compute capability and parallel processing techniques.

4.Optimize performance to minimize power consumption:

One of the major requirements from any portable security system is to ensure that we don’t need to charge it often. Keeping this in mind, we envision our system to be able to run for at least 24 hours on a single charge / battery. The entire system’s performance, including the camera’s feed capturing, compression, transmission, decompression and streaming to the portable monitor, all have to be optimized so that the setup works for at least 24 hours at a single charge / battery setting. Our contingency plan for this would be to increase the battery size if the system ends up taking too much power, even after final optimizations.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

No major changes have been made yet, however we still need to decide on the final specs for the camera (240p or not), and other technical specs for the microcontroller and monitor, based on our use case.

Provide an updated schedule if changes have occurred.

No schedule changes as such.