Final Report Can be Downloaded Below…

Carnegie Mellon ECE Capstone, Spring 2023. By Aditya Agarwal, Kumar Darsh, Alejandro Ruiz

This week I presented for our final presentation, and spent the majority of my time working on this and preparing for it.

We also worked on our poster and I took a lead role on this. We continued user testing and I was looking for additional sheet music for us to test and look on, such as Happy Birthday, Hot Cross Buns, and I reached out to a couple musicians I know to see if they were available to test our application.

Finally, I looked into figurine out how to deploy our application to the cloud on an EC2 instance with an Apache server.

No major design changes were required to be done to our system.

One of the major risks is that we have realized that songs being played at varying tempos are not transcribed correctly sometimes, for example our transcription might skip over some notes if it is being played too fast.

We have been focusing on testing our system for the major part of the week and these are the tests that have been performed.

SNR Tests

Added white noise to audio signal to see how it would affect the transcription of the audio. Kept increasing the amount of noise such that SNR values would be decreased, and see what might be the best SNR value to reject audios at.

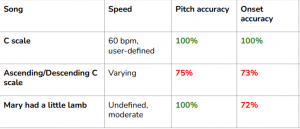

Pitch Tests

Looking at the output of our pitch processor we can determine whether there have been detected any incorrect pitches. We can get the pitch accuracy by dividing the number of correct notes over the total amount of notes. If the percentage is greater than or equal to 95 that would be a successful test. Otherwise, it would fail the test.

Rhythm Tests

Looking at the output of the rhythm processor we can determine the amounts of notes and rests detected. The output is an array of 1s and 0s. As soon as there is a 0 that means a rest has occurred. Otherwise a note is being played. We count the number of notes that it has detected and the number of rests. We wanted the accuracy to be greater than or equal to 90% for a test to pass, otherwise it would fail.

Some results for 3 songs can be seen in the table below:

During this week, I have been focusing on testing. This involved for example testing SNR threshold values. For example, I added white noise to audio signals and saw how the output of the transcription changed as I increased the amount of noise added. It seems like the SNR being set to 60dB seems to be a good value because if we go below that then the transcription sheet’s pitch accuracy definitely will fall below our 95% requirement. I also tested the rhytm and pitch accuracy on simple songs and it seems to meet our requirements. However, on more complex songs with varying tempos it might not be as accurate.

We should be on track, for next week it is mainly going to involve working on the papers and the poster and getting everything ready for demo day.

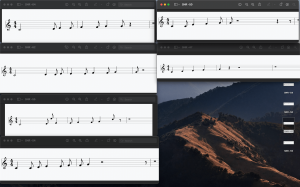

During this week it has been mainly looking at testing, which means playing audio files and seeing how they were being transcribed. For example, I created a function to make sure that the right amount of notes were being played in a certain stave such that the total duration of the notes were not longer than the duration of the stave since this was an issue we were having. Another issue we were having is the time signature started displaying all the time 3/8, and I fixed it so that it now displays the time signature selected by the user.

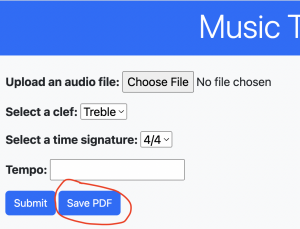

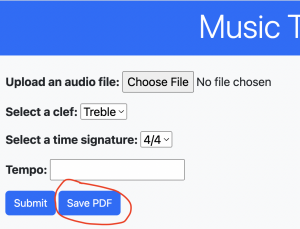

I have also been trying to fix the generating PDF issue since Kumar was not able to implement it, and I have also been finding it challenging. It seems like as normally you could just use jsPDF to get into a PDF a certain div element. And I tested this with a simple div containing a sentence and it could generate a PDF containing that header. However, when we add to the PDF the div which contains the SVG element created by VexFlow it will display all blank. We will have to look into different libraries.

We might be a little behind due to this PDF issue, but if it does not work I do not think it is worth stressing too much over since it’s not a very important feature for our project to generate a PDF in my opinion.

For next week it will be mostly testing, work on PDF issue, and get final papers done.

Our primary design changes is the note formatting step. We decided that to increase robustness of the project, all the code that parses the durations of the Note structures in order to make them readable by Vexflow would occur in the back-end, after the notes have been integrated but before sending them to the front-end. It access the user-input data, specifically the time-signature, to mathematically determine how each note would be represented in the desired out. For example, this step a note of duration 1 beat would be represented by a quarter-note if the time signature is 4/4 but an eighth note if the time signature is 3/8.

We’ve encountered a recurring issue when it comes to testing, where audios recorded using an iPhone are considered unreadable by the scipy wavfile.read. We’re considering using the librosa library to read audio files instead. We also are facing an issue with figuring out how to download the transcript in PDF form. jspdf is giving us too many issues and we’re looking at alternatives.

For the next 2 weeks, we will be focusing exclusively on testing. This involves quantitative testing regarding the note and rhythm accuracy. This week we will start asking peer musicians for a score out of 10 as well.

Not much to report this week – Alejandro and I tried making the pdf download feature work with jspdf and another library, but it isn’t working with various import and JavaScript errors.

For now, we’ve moved onto testing as we are able to print out the music notes on the webpage. I downloaded some sheet music and played it through an audio app. We’re now in the process of running this through our app to test our accuracy percentages. There are some file issues that we are trying to resolve.

Given that we’re just doing testing and refining for the next couple weeks, I would say I’m on track with our status as a group.

This week I continued working on the backend for the PDF button. One of the issues is that the documentation is not very clear on using the jspdf library to write html code into a pdf file. I thought I was able to implement it, but the method had been deprecated (fromHTML) so now I’m trying to use doc.html to write it. Another issue is that the flow of figuring out how to write this in the code since all the information in our html div gets lost after the program is done running – but the pdf button should be able to be clicked after the sheet music is generated. I might need Alejandro’s help in resolving this.

The other issue we faced was the .wav file not being accepted by our code. I spent a while trying to debug this issue in the method we use to read in the .wav files and clean the file. However, we weren’t able to resolve this and seemed to get lucky – as Alejandro was able to record a file “Twinkle Twinkle Little Star” that was accepted by the code.

So far, we have tested our app on short musical audios. We plan on running this on Twinkle Twinkle Little Star, as well as taking in user feedback from musicians who know what the sheet music should look and sound like for an audio. I am designing this form and will send it out by next week. Personally, the front-end has all the requirements we need – and I am working as described on the pdf generation and perhaps a user login page. This is the individual prompt.

Progress

I realized that when reading the time signature form options what is being processed is the number as a decimal in python. Therefore, I had to make a switch statement and give the time signature of 6/8 a different value when selected than that of 3/4 since otherwise, they would have the same value.

I also implemented a function in the backend that calculates the number of staves that we will need to draw dependent on the duration of the audio file.

I developed the front-end code for the save pdf button that we added, and I helped Kumar with ideas of the implementation of the backend function that turns the Vexflow output to a PDF when the user clicks on the “save-pdf” button.

Like we discussed in the Team Status report we are having issues with some audio files. Therefore, I tried to record the output of Twinkle Little Star with my phone and send that audio file to our system to process. It seems like some audio files are able to be handled but others give us errors. We are still not sure why this is occurring and therefore part of the focus of next week will be to figure out why.

I also cleaned up code accross project by removing dead code and adding documentation to make sure we are all on the same page and have an easier time when trying to understand each other’s code.

I finally edited the code that we had that drew the notes using Vexflow. Before, we looped all the time through the notes and when we reached the notes that had assigned a specific stave, drew them into that stave. This is inefficient because we loop through all the notes all the time, even though we only need the notes belonging to the stave we are drawing at that iteration of the loop. Therefore, I modified the way the notes were being processed and made a dictionary that matched staveIndex to the notes. Now, we just access the notes by getting the value assigned to a stave index and have reduced the complexity. The system did indeed get faster.

We should be on track in terms of progress.

For next week, I will be focusing on trying to figure out the issues with the audios, as well as helping my teammates out in whatever they need from the current tasks they are doing, since I know at least Kumar was facing issues implementing the backend of the save PDF button.

Testing

We will need to test the output of our integrator system. We have already started testing it by recording simple audios with the piano and feeding it into it. We will first need to test that the amount of notes in a stave is correct. We can test this by looking at the time signature and ensuring that the total length of the notes in a stave is less than the time signature, and that the note going into the next stave could not be added to the previous. We will also need to test that the amount of notes is correct. We can do this by listening to the audio and counting the amount of notes that we have heard and then count the number of untied notes that our integrator outputs. We should also test the accuracy of the pitches of the notes. We can do this by recording the notes pitch and comparing the notes pitch that our integrator outputs. Since we said we wanted it to be >= 90% pitch accuracy accurate, as long as it meets this threshold we should be good. We should also test smaller functions, like the function I created to compute the number of staves. This can be done by looking at an audio file and calculating how many staves we need for it and then comparing it to the output of our function.

Risks

While attempting to test some home-made audio files, we found that the app wouldn’t accept files of the type we were inputting. This was confusing as they seemed to be the same file types as the original tests we were using. It seems like the method of recording, such as the microphone used or the placement of the mic, can affect whether or not a recording is suitable for the app. We will need to determine what the key factors are, and whether or not we can modify our code to allow for a wider range of recording types.

Design Changes

There are currently no major design changes to our project.

Progress

![]()