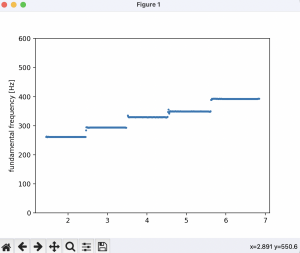

This week I worked on determining how to use the Short-time Fourier transform to depict an audio signal in both the time and frequency domains at the same time. I recorded several basic audio samples using my iPhone of me playing the piano; one was a C note held for a couple seconds, another was a C scale ascending for five notes. I started by using MATLAB, as that is the system most designed for this kind of signal processing and plotting. I wanted to determine how to isolate only the relevant frequencies in a signal and determine at what point in time they are at a relevant magnitude.

I used this method from the scipy library to generate the STFT:

f, t, Zxx = stft(time_domain_sig, fs=sample_rate, window = 'hann', nperseg = sixteenth, noverlap = sixteenth // 8);

The key difficulty here was determining the parameters of the STFT. The functions asks us to pick a window shape, as well as the size of the window and how much the sliding window should overlap. I attempted to use a rectangular window at the size corresponding to one second of the audio clip, but I realized that a smaller-sized Hann window worked better to account for the signal’s constantly changing magnitude. I also assigned a window size of 1/2 the sample rate, because each note of the scale I played for about 1 second, meaning I would have 2 windows applying the Fourier transform to each note. I translated this code to a python script and wrote a method that takes a file name as input and generates the STFT.

This code resulted in the following graph:

You can see the earlier pulses are more accurate to the start of the pulse than the later ones, suggesting a smaller window is needed to get accurate DFTs. The cost of this accuracy is a slower and more redundant process of obtaining the frequency-domain representation of the signal.

I am on schedule for researching the frequency domain representations of the audio signals, because my goal was to have a proper back-end representation of the signal’s magnitude at relevant frequencies. My plan for the next week is to fine-tune this data to be more accurate and write code to detect which frequencies correspond to which musical note and generate a dictionary-like representation of the note, it’s magnitude, and it’s length. My deliverable will be the python code which outputs an easily comprehensible list of notes in text form.

![]()

s of the layouts and user interfaces that we roughly hope to replicate with our final app d

s of the layouts and user interfaces that we roughly hope to replicate with our final app d