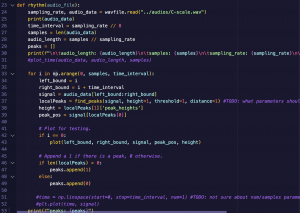

My work this week primarily focused on the Software area of our project. I worked within the django app to design a method of outputting a list of notes detected within a time signal.

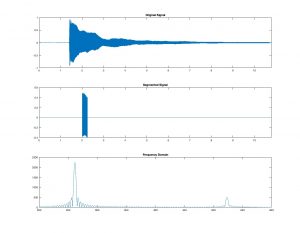

The initial method involved examining a segment of N-frames, where N is one-quarter of the sample rate at a time. Copied the samples in the segment to their own array, then ran the scipy method fft() on it. However, this posed a problem bc the different size of the segment resulted in a less accurate output in the frequency domain.

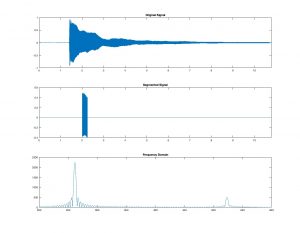

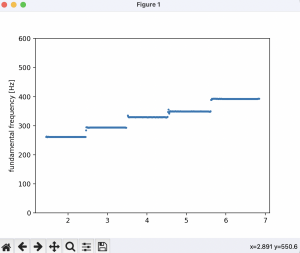

I changed my process. Instead of creating an array the size of only one segment, I copied the entire time-signal then multiplied it by a box window, so that every sample is 0 outside of the desired time range. This had the same accuracy of frequency that analyzing the entire signal at once would do. The code below is the output for

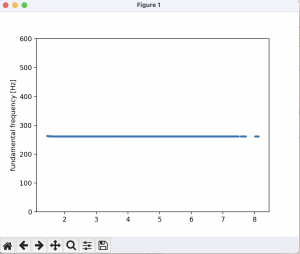

One major error that’s put me behind schedule is that the size of the window results in capturing portions of the signal with differing frequencies. This results in the calculated fundamental frequency being a note between the two actual notes played: for example, an F# is detected at the border between the F and G notes of the C scale. I plan to make up for this by attempting to use Alejandro’s progress with the rhythm processor. I will try to determine how the pulse-detection algorithm can be applied to the frequency processor to prevent it from calculating the FFT at the points in the signal where a new pulse is detected. As integrating the processors was already part of the plan for next week, it won’t be a serious deviation from the schedule.

>>> from audio_to_freq import *

>>> getNoteList('')

[2030]

Note detected at [261.41826923]

C

[2030]

Note detected at [261.41826923]

C

[2030]

Note detected at [261.41826923]

C

[2030]

Note detected at [261.41826923]

C

[2030]

Note detected at [261.41826923]

C

[2278]

Note detected at [293.35508242]

D

[2278]

Note detected at [293.35508242]

D

[2278]

Note detected at [293.35508242]

D

[2567]

Note detected at [330.57177198]

F

[2544]

Note detected at [327.60989011]

E

[2566]

Note detected at [330.44299451]

F

[2562]

Note detected at [329.92788462]

F

[2713]

Note detected at [349.37328297]

F#

[2713]

Note detected at [349.37328297]

F#

[2710]

Note detected at [348.98695055]

F

[2716]

Note detected at [349.75961538]

F#

[3048]

Note detected at [392.51373626]

G#

[3040]

Note detected at [391.48351648]

G

[3049]

Note detected at [392.64251374]

G#

[3039]

Note detected at [391.35473901]

G

[3045]

Note detected at [392.12740385]

G#

['C', 'C', 'C', 'C', 'C', 'D', 'D', 'D', 'F', 'E', 'F', 'F', 'F#', 'F#', 'F', 'F#', 'G#', 'G', 'G#', 'G', 'G#']

This is an example of how the signal is modified to detect the frequency at a given point. The note detected is a middle C.

s of the layouts and user interfaces that we roughly hope to replicate with our final app d

s of the layouts and user interfaces that we roughly hope to replicate with our final app d