What are the most significant risks that could jeopardize the success of the

project? How are these risks being managed? What contingency plans are ready?

- Hardware (Sun A): my biggest concern is designing an actuator system that will be safe to use and functional. For instance, because I am using 14 solenoids — not using all 14 at a time though — the amount of current required for the system will be close to 5A. I think that the 18100 power supply may not be suitable for the system. This also means that I can’t use a breadboard to build a system — which means that I would have to solder onto a PCB board to create this actuator system. A little intimidating… But, I am hoping that my experience as a 220 TA and in 474 will be helpful 🙂 However, if this design does not work (fingers crossed), I will have to call for SOS (and hopefully prof. Budnik is available to help). But, if that doesn’t work out either, we’ll just have to build a smaller system with less number of solenoids.

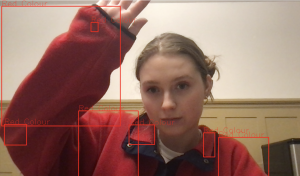

- Software (Katherine): my concern is gesture recognition. Being able to recognize certain gestures using computer vision may be difficult, but I am confident that we could atleast recognize where a hand with a colored glove is in a screen, so that would be a perfectly good contingency plan.

- Music Software (Lance): Although I am slightly worried about accidentally designing the music generation algorithm to be out of scope, I am simultaneously excited to begin working on it and creating music. Personally, I’d love to implement all of the ideas discussed in my own post (and more!), but I will focus on gradually building the algorithm from the ground up.

“ Were any changes made to the existing design of the system (requirements,

block diagram, system spec, etc)? Why was this change necessary, what costs

does the change incur, and how will these costs be mitigated going forward?

- Hardware: we secured a keyboard from the ECE receiving, which means we have 50 dollars more flexibility to buy additional MOSFETs in case I burn some of them. But, other than that, no changes were made so far.

- CV: Not as of now.

- Music Component: N/A

“ Provide an updated schedule if changes have occurred — N/A

“ This is also the place to put some photos of your progress or to brag about a

component you got working.

Recognizing color!

Our project includes considerations for welfare and societal implications because we hope that our product could allow people who do not have the ability to play music to play music.