We decided to slightly simplify our control method in favor of usability. Instead of controlling single notes with one hand and chords with the other, we have opted to instead control note pitch with one hand and various other parameters (volume, subdivision, rests, etc.) with the other. This should give the user far greater control over what they play. To compensate, we will need to choose what chords to play while accompanying the user. This issue is discussed in more detail in Lance’s post. There is a slight risk of accidentally selecting chords that do not mesh with what the user wishes to play, but in all honesty, because the key is always going to be C major (other another key in a different scale mode with all white keys), this can be handwaved with the justification of interesting harmonic intervals. We don’t plan on placing any chord notes above the melody note, so there should also be no issues there.

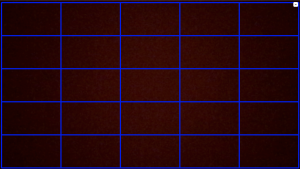

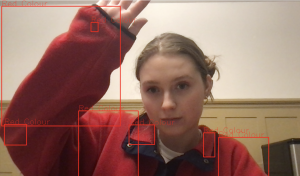

We also created the grids needed for the CV recognition in both note playing and generative mode, and the actual generation of notes in the simple note playing mode. The generative mode was defined to use a grid and patterns that we store, and added a block that the user can place their hand in to switch the mode. (more information in Katherine’s post)

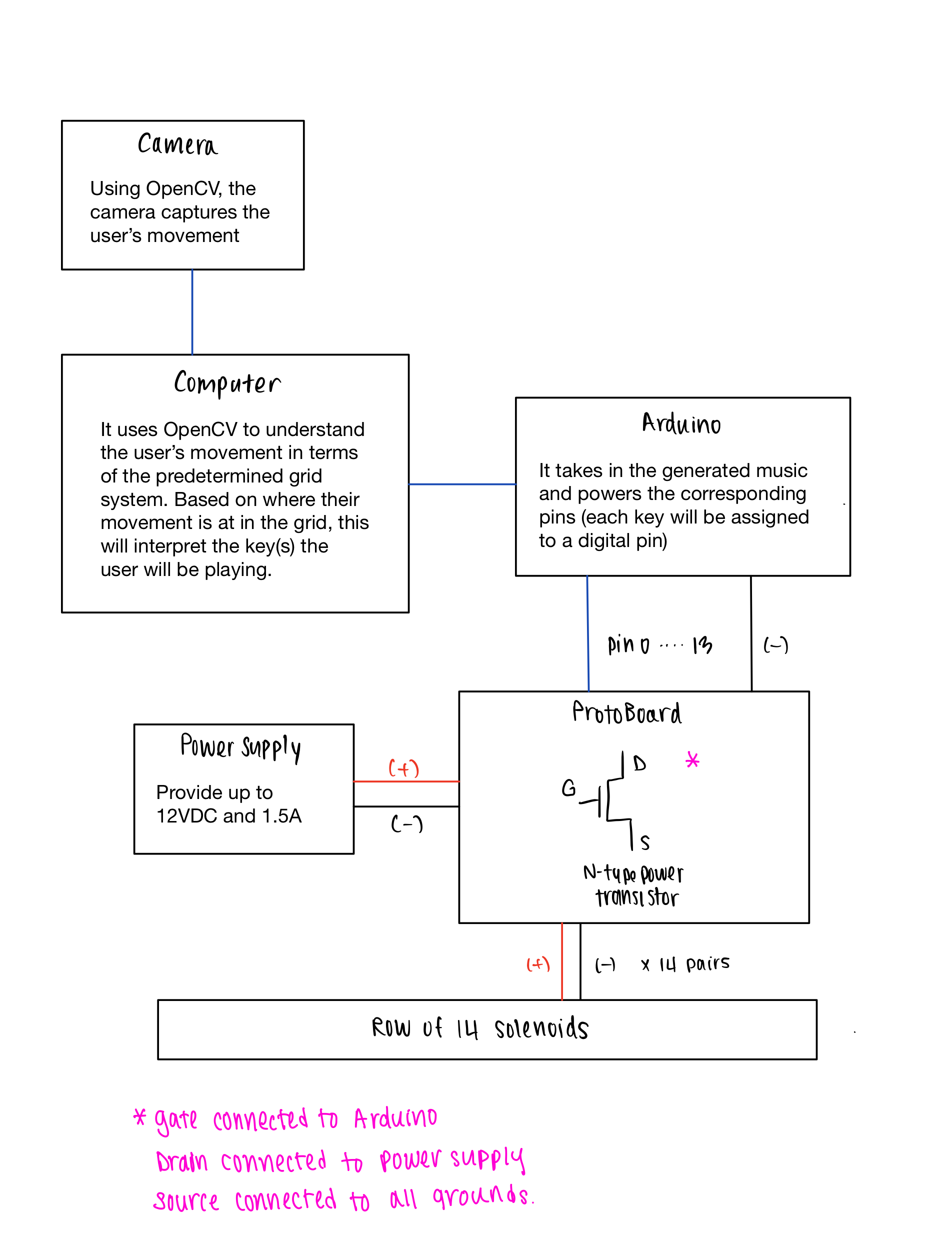

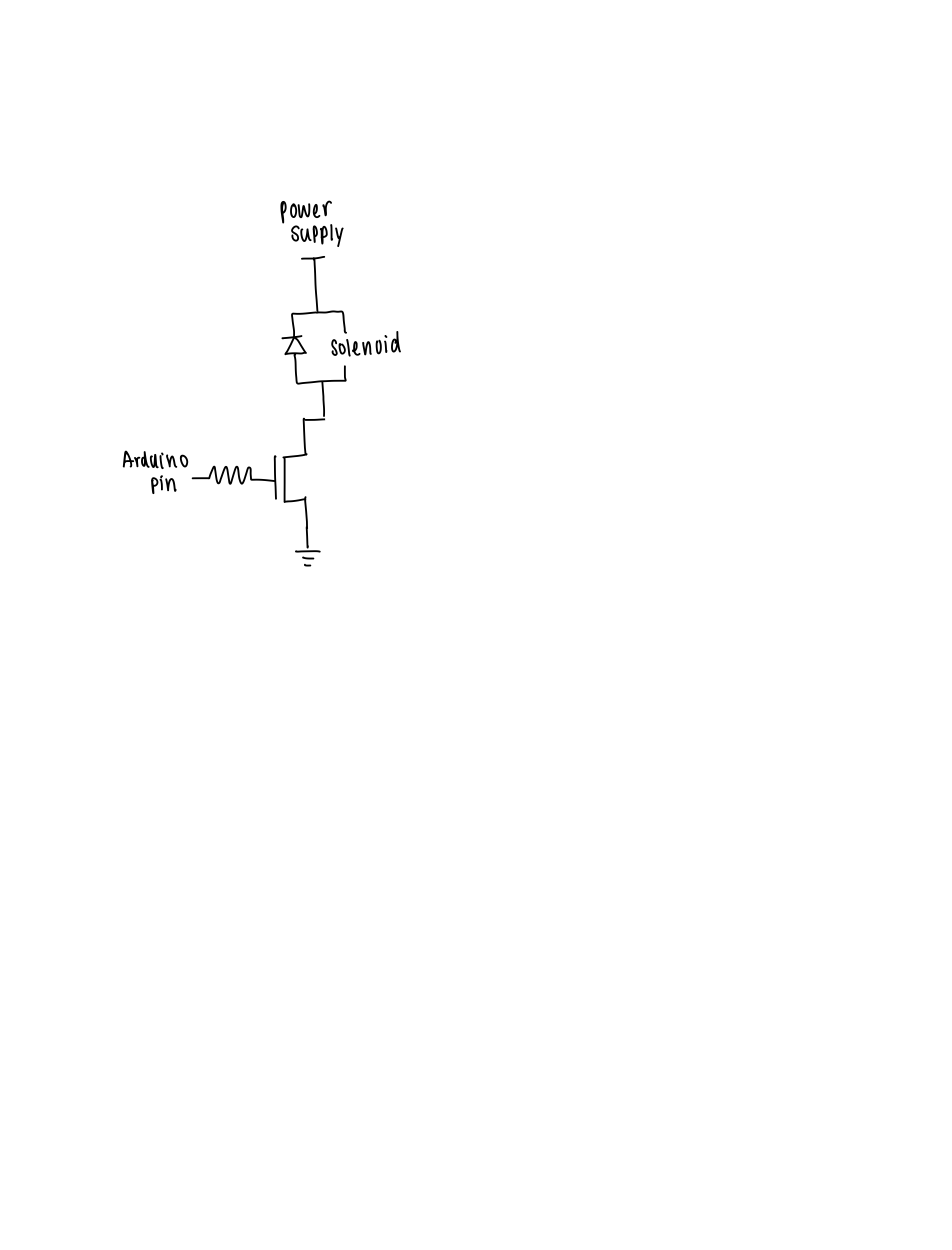

As for the hardware side of the project, I finished ordering the parts to create a proto-type version of the actuator system. I only ordered one solenoid from Adafruit to see if I need to resort to ordering a $20+ solenoid for the project because the small solenoid from Adafruit only costs us $8 per piece (and we need 14 solenoids in total). I drew up the circuit diagram for the proto-type and I also created a block diagram for the presentation and got it approved by the team. I will be presenting next week so I have been practicing for that as well!

No update was needed for the schedule because we are all on track 🙂