What did you personally accomplish this week on the project?

I got symbol detection working !!! This was actually such a difficult journey but I think it will make our project better. It’s accuracy is not great but I think I can make it a lot better with more negative images and training. I also had the idea to combine the color detection and symbol detection to make it really accurate! This would hopefully mitigate issues of detection lips for red, or other things like that. This would also help with not having a 100% accurate symbol detection. I would do this by lining the symbol with red and then test if the color detection and symbol detection has overlap, meaning this is most likely the correct symbol. I also fixed an issue in the fabrication design because when I did the new design with the 6mm I made a mistake on the front plate that makes it tilt upwards. This is a pretty simple fix so I just corrected the file and cut a new one with Suna.

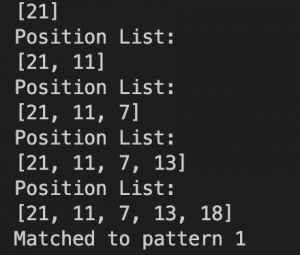

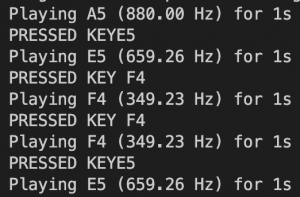

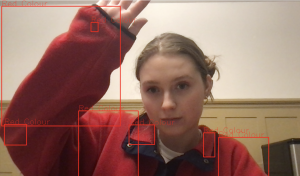

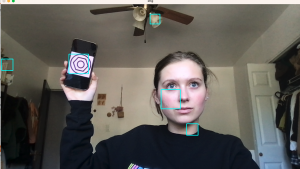

Back to the symbol detection — I had so much trouble getting this to work because opencv has not updated recent versions to include the commands that I needed, because they have been prioritizing other features. Therefore, I had to try to revert to older versions of opencv (below 3.4) but this lead to so many issues. I tried using a virtual environment on my computer, as wall as downloading Anaconda to try and get this older version through them. There was a lot of issues with different packages not being the right version. Finally, I found a video talking about this issue, but they were working on a Windows. I tried to convert what they were doing to Mac commands, but they were using resources that weren’t compatible, so finally I got on to virtual andrew and sent all of my data over. Now, finally, I was able to get the right version of opencv that I needed and run the training and create sample commands. I started with a smaller number of photos than I probably should, just to see if I could get the cascade file, so there is definitely room for improvement. This first image is when I first got it working, and you can see it is detecting a lot of things beyond just the correct symbol.

I began messing with settings on what size it should be detecting, to get rid of some of the unreasonable sizes it was detecting and got this:

Which is a little better. Combination with color and more samples will make this much better.

Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

On schedule.

What deliverables do you hope to complete in the next week?

Fine-tuning the cascade file and combining it with color, which shouldn’t be very hard, to have better detection for the symbol. Integration with Lance’s part still needs to be done this week as well.