What did you personally accomplish this week on the project? Give files or

photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

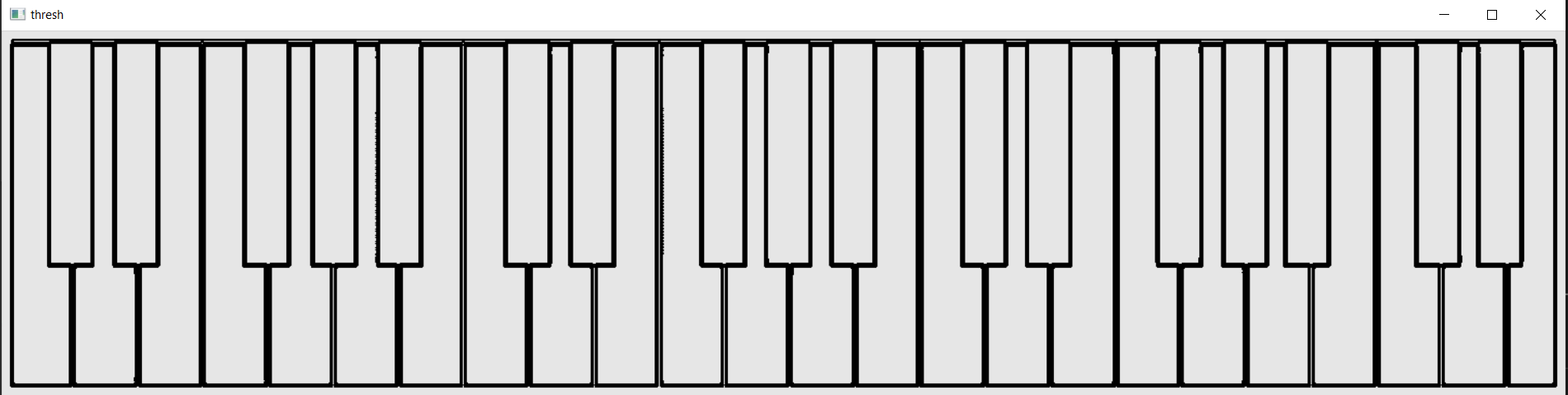

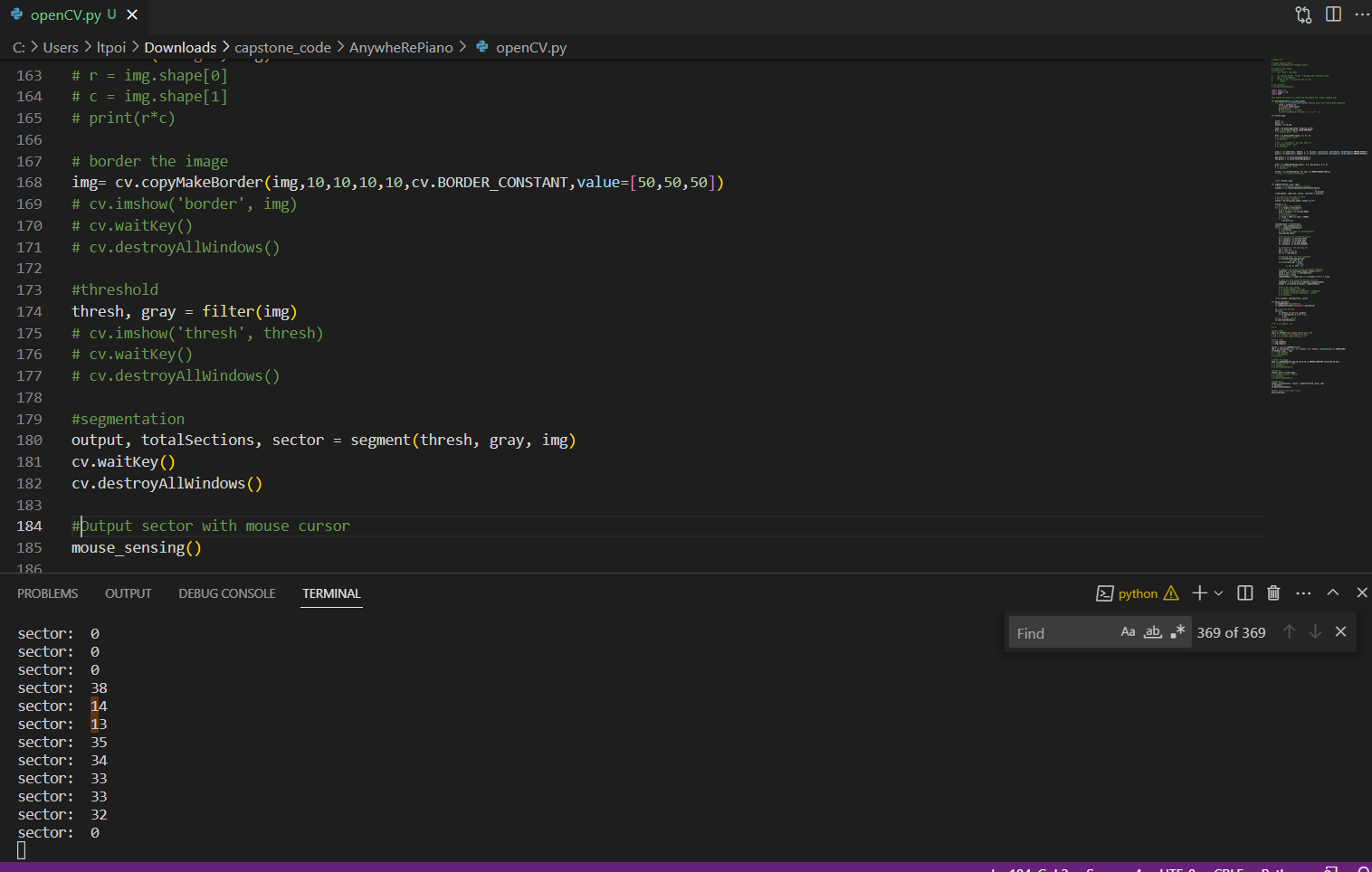

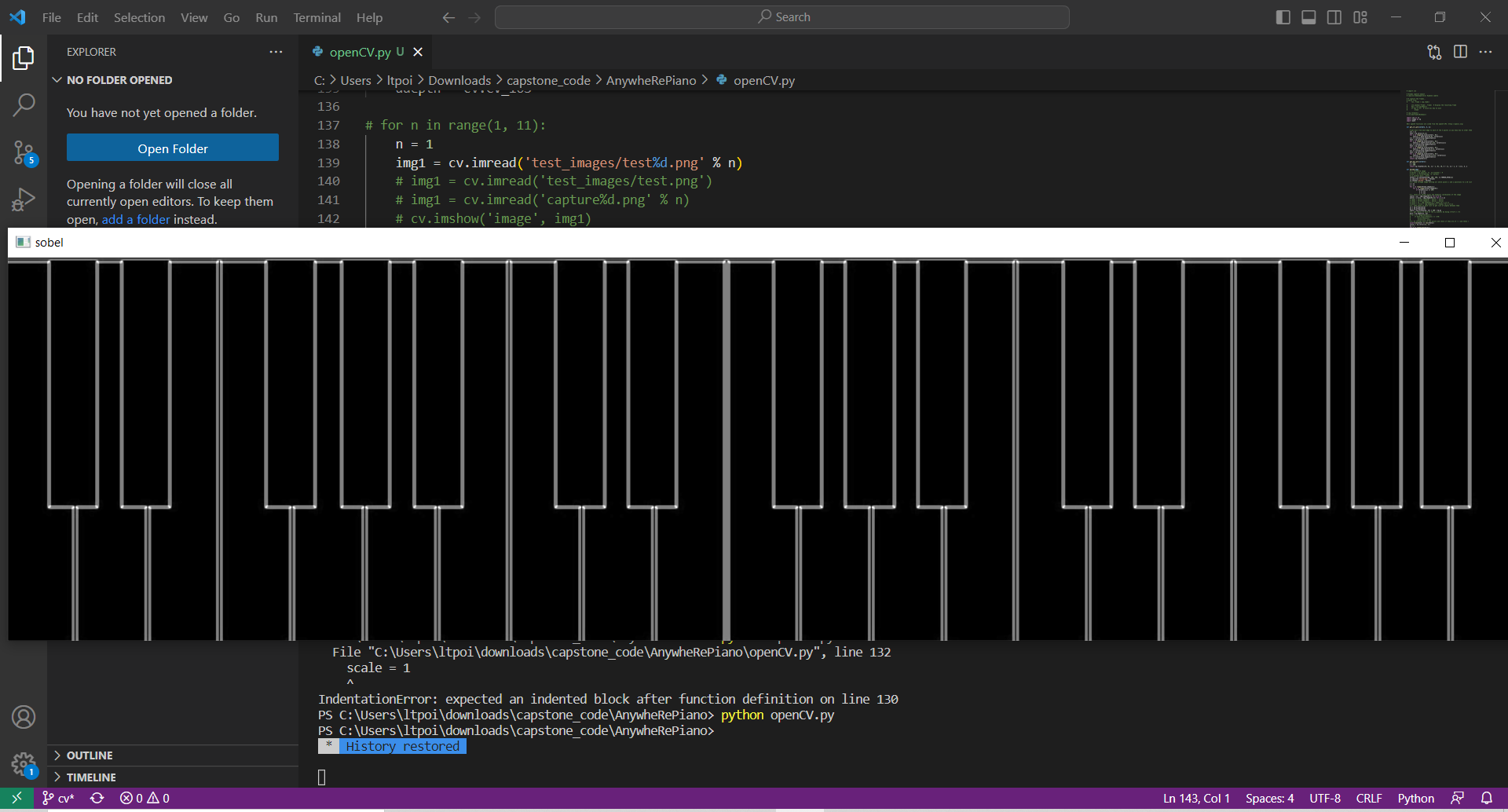

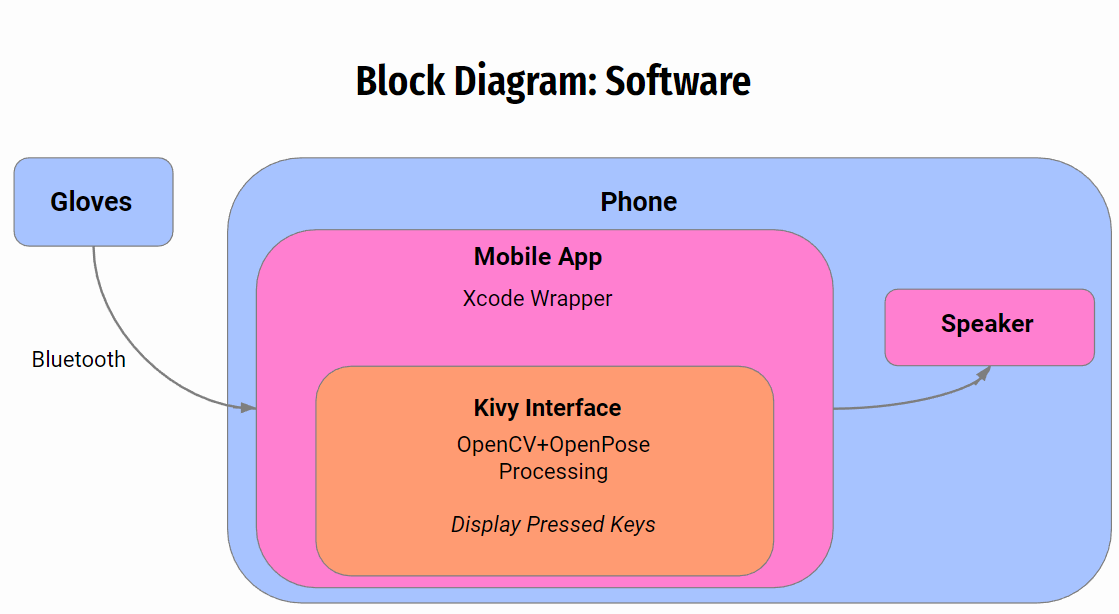

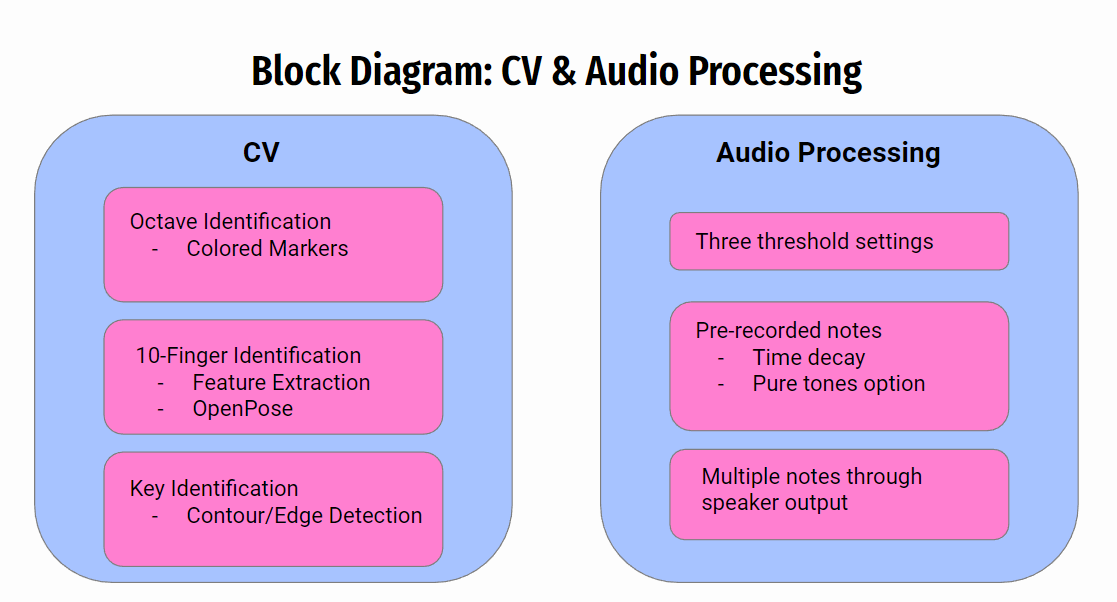

This past week, I worked on researching the capabilities of OpenCV to see the extent of what functions to use to identify and differentiate piano keys and fingers from one another. This is still a work-in-progress and still needs to be more fleshed-out. Some other additional things I helped out with was brainstorming with my team some more details on our project that was not mentioned during the design review presentation but are integral. Some of these was researching a battery to be used to power the gloves + micro-controller.

Another one was for the CV component was coloring the octaves on the piano printout to aid the software side to identify what octave of notes are being played. Since we know that the full layout of the piano keys cannot fit inside the range and view of the camera, it is paramount to distinguish these octaves from one another since the camera view would not be able to know without seeing the full plane on the piano keyboard layout.

On the idea of coloring components for the CV, we also discussed adding colored fiducial markers to each of the fingers that would face up on the glove towards the camera when being played. This would help the CV calgorithm identify based on that color that it is a finger in the image, and then the fiducial would differentiate which finger it is that is pressing a key. All of these 10 fiducials would be different than the others so as to identify the 10 distinct fingers.

I also worked this week to set up a camera stream between my iPhone camera and my laptop for the purposes of testing. Although it is not necessary, since it is more preferrable to start out with testing the algorithm with images and frames of a video, but our end phase requires that we take out frames to process of a live streaming video feed. This step is still important and therefore not trivial. It relies on a software called Iriun which can be downloaded as an app on the app store and on my laptop. It then connects as a webcam to my laptop through the same wifi. CMU blocks this though since it is a public connection, so it currently only works in my house, which should suffice for testing still if need be. If not however, I can always take images with my phone, send them over to my laptop, and conduct image processing on them there. This is what it looks like below.

Lastly, I worked alongside my team on the design review report document. I worked on the introduction, use-case, and design requirements. It was tricky differentiating between use-case and design requirements, but thanks to the design template and its directions, as well as Prof Gary’s clarification on the matter, I was able to come up with both qualitative and quantitative requirements that accurately describe the details of the project.

“ Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

My progress is on schedule as of right now. Nish and I will also be working on some Kivy related items in conjunction with OpenCV according to the schedule as well. Spring Break also takes a chunk of a week away from us, but our schedule accounts for this already.

“ What deliverables do you hope to complete in the next week?

Next week is Spring Break, so for the week we come back after, I would like to have my computer vision algorithm be able to detect the location of white keys by having bounding boxes over them. I would also like to create and printout the colored fiducials for the fingers. Fiducials is a common technique used for identification in compter vision, so there is likely a website that makes creating these fiducials easily since this concept of being able to identify essentially numbers 1-10 with a camera has already been done before. There will most certainly be documentation about this out there.