Great news for us during the last week before spring break: the model could finally run through training! On Friday, Ting and Vasudha met with Tamal to set up the HH 1305 machines environment so we could run YOLO on GPU, which would make training a lot faster. Coming back from spring break, we will run through training with 150 epochs (or more) and start fine tuning with more datasets that we have collected.

We have also decided to switch from Jetson Nano to Jetson Xavier NX after studying their capabilities. We determined that Xavier’s computational power, measured in FPS (frames per second) for GPU , is more suitable for running a CV model as complicated as YOLO.

We have also finalized our mechanics design (mostly the material and structure of the lid and the swinging door) after discussion with the staff meeting. Besides that, most of our work is devoted to finishing the design report. We have pinned down details of the core components mentioned in the design presentation and we have done more research to justify our design decisions. Overall, it was an all-inclusive overview of our design and experimenting process over the first half of this semester.

Our biggest risk is once again the mechanical construction of the lid, once again due to our lack of knowledge in the area. To mitigate the risk, we have spent 3 hours a week researching and redesigning to improve our design, and this past week, after talking to the TA and Professor, we once again updated our design, this time constructing a wooden lid frame supported by four legs that the trash can can be easily removed from for emptying. Here is a picture of our latest design https://drive.google.com/file/d/1kVl_is8CKyrQoq8ftapcW93fXAPjJfYD/view?usp=drivesdk.

As contingency plans, we have kept several backup design plans that can be adapted if our main bin design fails. Regarding construction itself, we found that the techspark workers can possibly help us out with cutting the materials we need, lowering the overall risk as well. With the success of our model being able to run through training, we are on track in terms of software, but still a little behind mechanically due to the materials not yet arriving, as well as our recent changes in design.

Another risk is not being able to get the code running on the 1305 machines. We mitigate this by setting up GCP credits.

Next week our goal is to train as many epochs as we can on the 1305 machines. We also plan to start constructing our structure.

Q: As you’ve now established a set of subsystems necessary to implement your project, what new tools have your team determined will be necessary for you to learn to be able to accomplish these tasks?

- Right after the break, we will start setting up Jetson and deploy our detection & classification code to it. So working with Jetson and the CIS camera attached to it will be a major task.

- We will also migrate the ML model from Google Colab to run on the HH 1305 ECE machines. We will use FastX as our new tool.

- Although not immediately, after integration with Jetson is on track, we will also start some mechanical work such as laser cutting to build the mechanics section of Dr. Green.

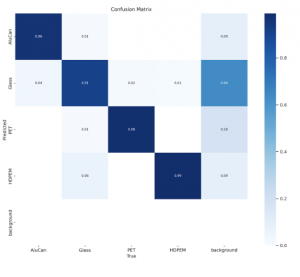

Our accuracy is >90% for all 4 types of drinking waste after training for 20 epochs

Our accuracy is >90% for all 4 types of drinking waste after training for 20 epochs