After some cross debugging (setting up backup jetson and trying different MIPI/USB cameras), we have finally sorted out the camera issue. On Sunday I was able to get the usb camera working and taking videos/images. On Monday, the whole team double checked and we decided to switch to usb cameras now. During labs, we have done measurements to determine the height and angle of the camera. Ting and I also worked on integrating the camera code with inference YOLO code (detect.py). We are able to capture images and trigger detect.py from the script that makes the camera captures images. We have done initial rounds of testing and the result makes sense. We are working on transforming image results to text files so that we could parse detection results with code and pipeline it to Arduino. We are able to find the classifications and confidence values and we could also direct them to text files. Right now as I am writing on Friday, we are still working on the logic of outputting a single result when there are multiple items identified. Overall, progress is much smoother this week compared to the last. We have also done initial mechanical measurements to better prepare for the interim demo.

Ting’s Status Report for 3/25/23

This week, we were able to successfully migrate our scripts that were running on Colab to run on the Jetson. We tested with some random water bottle images, and the results were okay, but not great. They would serve the purpose of our classification since our threshold is 0.85. We spent a lot of time after this focusing on getting the camera set up. It’s not going as well as expected, we kept getting a black screen when we booted up the camera stream. We tried multiple different commands, but even though the Jetson recognized the camera none of the commands could get the stream to show up. I also tried running a script for using CV2, but that wasn’t working either. I met with Samuel to debug, but he thought that we would be better off shifting tracks and using a USB camera instead. We will be trying that next week. We are on schedule with the hardware, all the servos and lights and speaker are coded up and linked to the Arduino. We drew out specific dimensions for the mechanical part, and Prof. Fedder will cut the wood for us. If we get the camera stream working next week and get it integrated with the detect script of YOLOv5 we will be on track with the software.

Team Status Report for 3/25/23

Team Status Report for 3/25/2023

This week our team got together to discuss the ethics of our project with other teams. The biggest ethical issue that our team came up with was that if users were to hack the Jetson, they could alter the built in rules and it could misinform children as well as contaminate recycling. During the discussion, the only concern that was brought up was that our idea would just not be effective, so it would teach children a false sense of hope when the outlook of recycling is so bleak. Other than the ethics, we worked on the hardware and Jetson camera integration.

On the hardware side, we got the physical circuit built, working, and able to accept serial input from a usb connected computer (a set up that we will migrate to the Jetson).

In terms of mechanical design, a major change we made after talking to the TA and professor was deciding to make the entire frame and door out of wood to avoid the risk of structural integrity that came with the acrylic. We also decided to use a flexible coupling shaft between the arduino and the axle for better movement.

Aichen and Ting struggled to get the camera working with the Jetson. We got the Jetson to recognize that the camera was present, but when the streaming screen popped up, it would just be black and there would be errors. Ting worked with Samuel to debug, but found that it would be better to just try a USB camera instead. Samuel lent the camera that his team used with their capstone, and Aichen is working on testing it with Jetson to see if this is the correct path to go down.

Our biggest risk now lies in the camera subsystem. Without the camera working and being able to capture, we cannot test our detection and YOLO classification algorithm on real time pictures. Because we are still not sure what the roots of the problem are (broken Jetson or unsuitable camera type), debugging and setting up the camera may take some time. Other than that, another risk lies in the sizing of the inner door. However, this part should not be too concerning as we could always start with extra space and make adjustments after experimentations.

A great thing for us, the hardware work is caught up and the whole hardware circuit, as well as (serial) communication between Jetson and Arduino is both done. We are a little behind on mechanics as there are still more parts to be ordered and it is hard to finalize measurements without physical experimentation. The ML system alone is on track as training seemed to return great results and the inference code could be run on Jetson as expected. Soon, we will start fine tuning. As mentioned in risks, the camera is taking us much longer than expected and it is hard to do live testing of detection & classification without that set up well.

Looking ahead, our goal for this next week is to catch up as much as we can in terms of our schedule so that we are prepared enough for the interim demo. For the demo itself, as a minimum we hope to have the Jetson classification + hardware operation working, however, our goal by this time is to have everything besides the mechanical portion somewhat integrated and working together. This is essentially to buy time for our mechanical parts to be completely ordered and arrive, and after the demo we can spend the final three weeks fine tuning and connecting our hardware to the mechanical parts to the best of our abilities.

Vasudha’s Status Report for 03/25/23

This week, in addition to the ethics assignment and lecture, I focused on setting up the hardware circuit and helping debug the camera set up. For the hardware circuit, I initially attempted setting up the circuit according to my simulated MVP design. However, I soon found that some of the parts were not exactly the same as the ones in the simulation, and therefore could not be connected the same way or use the same libraries. A big example of this was the neopixel strip, which was actually the “Dotstar” version that used SPI, therefore needing a separate clock and data line. I therefore had to move the neopixel strip pins to use 11 and 13, as those were the pins on the Arduino Uno that had the MSIO and CLK SPI signals. Besides this, the speaker and the servos could be connected as per simulation. After some debugging, I was able to successfully connect and operate the neopixel strip, servos, and speaker. After this, I added code to have the Arduino accept and use serial input, connected the Aduino with a usb to my computer, and ran a python script to see if the Arduino would accept and correctly control the components based on the given input, which after some debugging, worked as well. The next step in this process will be running the input script on the Jetson itself to make sure the connection between it and the Arduino works the same way.

After setting up the hardware subsystem, I helped Ting and Aichen debug the CSI camera connection. Although the device could recognize that a camera was connected, despite trying to install and use multiple streaming commands, none of them seemed to work. The errors produced by these commands seemed to indicate that something might be wrong with the camera+Jetson connection itself, so we will try using the camera on the nano to see if there is an issue with the camera or the Jetson port, and temporarily use a USB camera in case the CSI one does not end up working.

I also worked on further fine tuning our mechanical design and specified measurements for the different parts of the frame and door.

Schedule wise, we have now caught up in terms of hardware. We are behind on the mechanical side due to the constant revision of the plan and not having all the parts we need for the new design. We are also a little behind on the software side due to unexpected issues regarding the camera. Therefore, our plan is to focus our time fixing and fine tuning the software and the camera+Jetson subsystem so that we have that working with the hardware in time for the interim demo, and then focus more time on the mechanical portion once those parts arrive.

This next week, I plan on testing the Jetson+Arduino integration and helping out with the camera/software debugging so that we get back on track and have everything ready for the demo the following week.

Aichen’s Status Report for 3/25/2023

This week, the absolute of my efforts went into setting up/debugging the camera. After being able to run inference on Jetson, we started setting up the camera (MIPI connected to Jetson), which could be “found” but not able to capture using different commands as well as cv2 module. After the first camera got burned, we switched to the backup camera which threw us into the same trouble. After a few hours of debugging, we decided to go with different routes now. First, I’m currently setting up the backup Jetson Nano to see if it could work with a USB camera. Second, if that works, we could also try it with the MIPI camera. If that still works, then it might be the Jetson Xavier’s problem and we would have to set up YOLO environment on Jetson Nano.

Schedule wise, the camera’s issues are a blocker for me, but we will do the best we could to have the camera subsystem functioning.

There’re some code updates in the github repo (the same link as posted before).

Besides that, I worked with Vasudha to get the “communication” between Jetson and Arduino working. I used a script in Python to send serial data (which will later be run on Jetson) to Arduino. After the whole team’s working, our HW circuit could behave based on the serial input it receives.

Vasudha’s Status Report for 03/18/23

Last week, I focused more on helping out with the software side, and worked on debugging our 1305 GPU setup and code. Since a lot of libraries were missing and our code was in a Jupyter notebook format rather than a Python file, I went through, installing what was missing, fixing dependencies, and trying to get the code to run. This set up is a backup plan to the Google Colab set up that Aichen and Ting are working on, and since the hardware components have arrived, I will be focusing more on that set up to catch up in regards to our schedule. However, the software debugging is still something I am currently working on, and hopefully we will be able to figure one of these solutions out before the end of next week so that we can then migrate this code to the Jetson.

On the hardware side, some of our parts had finally arrived. I started working on setting up the Arduino and respective components, and hope to have this fully set up and operating the basic design before integrating the mechanical aspect. After attempting to connect and operate the neopixels, I found that the type we had purchased did not match the simulated component (uses SPI communication and therefore requires a different library and extra pin connection for clock). Therefore the respective simulated programming did not work as intended, and will need to be modified.

In terms of schedule, while we are quite behind in hardware due to the delay in the parts arriving, we caught up a little on the software side, and plan to devote more time to the hardware this week to catch up.

As mentioned, next week I plan on finishing up the the hardware component assembly and implementation of the basic design, and finish ordering parts for our final mechanical design (which we modified once again after discussion with the professor).

Ting’s Status Report for 3/18/23

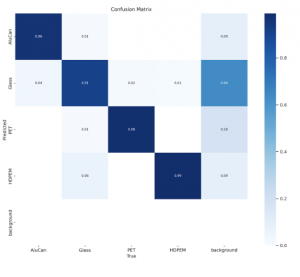

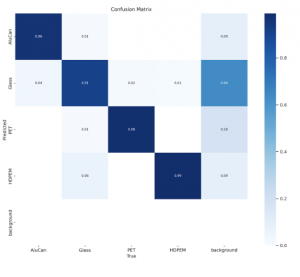

This week I got the training to run on GCP. I created a non-CMU account, and got $300 of free credit on GCP which I used to train 10, and then 20 epochs. The code runs much faster, with 10 epochs taking only 5 minutes. The training accuracy after 20 epochs is over 90% for all four types of drinking waste.

I also started setting up the Jetson. I worked on setting up an instance to be able to use with colab. I was able to connect colab with the local GPU. But after some roadblocks that came when I tried to run inference on a random picture of a bottle, and a conversation w Prof. Tamal, we realized that we can just run on terminal, which we will try next week. We also connected the camera to the Jetson, and will work on the code to have the snapshots from live camera stream be the source to the ML inference.

I believe in terms of the software and hardware coding, we are on track. We are slightly behind on the Jetson and camera integration, having it working this week would have been better. We are definitely behind on the mechanical portion, as our materials have not arrived yet. We plan to digitalize a detailed sketch-up with measurements to send to Prof. Fedder.

Our accuracy is >90% for all 4 types of drinking waste after training for 20 epochs

Our accuracy is >90% for all 4 types of drinking waste after training for 20 epochs

example of yolov5 doing labeling and giving confidence values. they are all mostly 90%, which is past our agreed threshold

Aichen’s Status Report for 3/18/2023

This week, after finishing the ethics assignment, I started setup of Jetson. There were a few hiccups such as missing the micro SD card reader and the very long downloading of the “image” (basically the OS for Jetson). However, my team and staff have all been very helpful, Ting and I were able to fully set up the Jetson by Thursday and we were able to run inference on Jetson on Friday.

I also helped during debugging as we migrate code to GPU on both Google Colab and HH machines. Those could all work now and training results proved to be exceeding expectations.

On Friday, we were able to connect the CSI camera to Jetson and I am currently working on capturing images using the CSI camera and integrating those with the detection code that I wrote earlier. On Monday, we would test this part on Jetson directly. If everything stays on track, Jetson would be fully integrated with the software subsystem by interim demo, which is about two weeks away from now.

For working with Jetson’s camera, I am planning to use the PyPI camera interface, implemented in Python3. This, I believe, is the best choice as our detection & classification code are all written in Python and Jetson naturally supports Python. Here’s a link to the module: https://pypi.org/project/nanocamera/

Team Status Report for 3/18/2023

This week, we started with setting up YOLO running on GPU with both Google Colab and HH machines. Along the side, we have also started setting up Jetson. After the YOLO code could be run on Google Colab, we trained with 20 epochs and the results are shown with images in the end. In short, the code exceeded an accuracy of 90% for all 4 types without fine tuning. Using the weights learned by the training, we have done test runs of inference using Jetson which could also successfully run to finish. The reason we decided to pursue both tracks is that gcp is quite expensive where 10 epochs cost $40. Fortunately, we are able to reach a decent accuracy for now with 20 epochs.

For the images shown at the end of this report, the first one shows a sample result where an item is detected and classified. The text shows its classification result and the number is the confidence level of the classification. Our code could also run on the HH machines now, but because it is already working on Jetson, we decided to pause that route for now.

Our biggest risk still lies in our limited experience with mechanical design. However, we have communicated the concern with the staff in meetings and we have professors and TAs who could help us along the way, especially the woodwork. For now, we are working on design graphics with specific measurements and will share that with staff soon. Besides that, there are no design changes made.

Due to delayed shipping of parts, we are slightly behind on building the hardware part. Besides that, the software system and integration with Jetson is going fine. We are planning to finish implementation of detection & classification on Jetson in two weeks and make sure basic communication between Jetson and Arduino will work by the interim demo. For communication, Arduino needs to receive a boolean value in real time that Jetson sends. The Serial module (same name for Arduino and Python) would be used for that.

Vasudha’s Status Report for 03/11/23

This past week, I mainly worked on finishing up the report and once again tuning the mechanical design based on feedback from the TA and Professor. For the report, I modified and consolidated our diagrams according to feedback to show both our use case requirements and design requirements, and created tables to organize some of the information in the report. Updated design can be found here: https://drive.google.com/file/d/15j3AFNqRnFr1QK4KOhniSD_vvZ2-SMlD/view?usp=sharing

I also worked on defining the abstract, design architecture, and information related to materials, hardware, and mechanical aspects of the project, and helped out with formatting to make sure all parts of the report flowed with each other.

Earlier in the week, Ting and I met with Professor Mukherjee to set up the environment we needed to work on our project on the 1305 GPU machines. Using XQuartz, we set up pytorch using miniconda and created a shared afs folder to allow for our members to all access the project files. This setup process is something we had to learn in order to use the GPUs to train our model.

The mechanical design and building aspect is something that we will need to continue to learn more about in order to properly execute our bin itself. During class, I worked with Ting to finalize the mechanical measurements for the bin given the newer set up with an axle. After feedback during Wednesday’s meeting, we found that the design that we had come up with (all acrylic, clamp to hold dowel on lid frame and other end glued/drilled into door) was mechanically weak due to the thinness of the material and the space we had we were attempting to drill into (ie. height and depth). We then decided to redesign the bin with as little change as possible to avoid having to switch up materials, this time having a wooden lid frame with support legs while maintaining the bin and acrylic door. This way we can more securely drill into the frame and not have the weight of the entire frame rest on the plastic bin below. I then looked into the new materials we needed for this implementation.

In terms of progress, we are behind in regards to the mechanical and hardware portions since our ordered parts have yet to arrive. However, we have simulated the hardware parts and hope that by end of spring break, they will arrive so that we can begin building.

The week after spring break, I hope to have started the mechanical and hardware building and finish ordering any remaining parts from the new design so that we can catch up to our original schedule.