Team status report for 04/30/2022

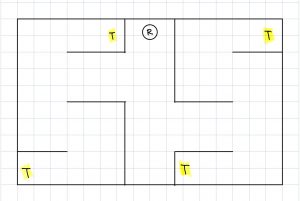

This week, our team focused on integrating the physical components of our system as well as the software stacks . We successfully installed the opencv-contrib library (which is needed for ArUco markers). We also integrated the DWA path planning algorithm into the rest of our system as well. In addition, we set up the environment we will be using for testing as well as for demoing. A picture of the updated diagram as well as a picture of the real life setup are shown below. The markers have not yet been added but there will be 5 of them throughout this ‘room’. Overall, we are close to finishing and integration and testing are the only ‘real’ tasks left.

Raymond Xiao’s status report for 04/30/2022

This week, I worked with Jai on setting up the testing and verification environment. Our current environment is a bit different from the one shown in the presentation. Originally, the size was 16×16 feet but due to size constraints, we changed it to 10×16 feet. In addition, we modified the layout of the ‘rooms’ and added hallways to make it more challenging to navigate. We also increased the number of ArUco markers in the environment from 3 to 5. Pictures of the setup as well as the updated diagram will be included in the team status report.

I also worked on integration of the ArUco marker detection code into our development environment. There were a lot dependency issues that neeeded to be resolved for the modules to work. Overall, I think we a bit behind but we are close to reaching the final solution. For this upcoming week before the demo, I plan to help my teammates with integrating the rest of the system and testing and debugging.

Raymond Xiaos status report for 04/23/2022

This week, I worked on CV. I have some preliminary code that correctly identifies whether an ArUco marker is in the frame (no frame or multiple frames). I am currently working on integrating this into our current system. The plan is to publish this data to a ROS topic so that the Jetson can use it for path planning/SLAM.

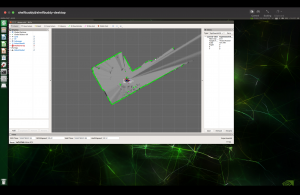

I also helped with setting up and integrating more of the system this week. The SLAM node has been installed and seems to be working correctly. The obstacle mapping seems to be accurate when we tested it but the localization is not turned well.

For this upcoming week, I plan to finish the CV ROS integration and help the rest of my team with setting up our testing environment.

Below is an image of the SLAM visualization as well as the current location the robot is in. As you can see, the data is pretty accurate; the walls and hallway are clearly defined.

EDIT: I forgot to upload a picture of the SLAM visualization. It is below:

Raymond Xiao’s status report for 04/16/2022

I am currently working on setting up the CV portion of our system. The main sensor that I will be interfacing with is the camera. This week, we estimated the parameters of the camera we will be using using MATLAB (https://www.mathworks.com/help/vision/camera-calibration.html). These parameters will be necessary to correct any distortions and accurately detect our location. The camera will be used to detect ArUco markers which are robust to errors. There is a cv2 module named aruco which I will be using. As of right now, I have decided on using the ArUco dictionary DICT_7X7_50. This means that each marker will be 7×7 bits and there are 50 unique markers that can be generated. I decided to go with a larger marker (versus 5×5) since it will make it easier for the camera to detect. At a high level, the CV algorithm will detect markers in a given image using a dictionary as a baseline. These markers are fast and robust.

We also ordered some connectors for our battery pack to Jetson but it turns out we ordered the wrong size. We have reordered the correct size and have also used standoffs as well as laser cut new platforms to house the sensors for more stability. In terms of pacing, I think we a bit behind schedule but by focusing this week, I think we will be able to get back on track. My goal by the end of this week is to have a working CV implementation which can publish its data to a ROS topic which can then be used by other nodes in the system.

Raymond Xiao’s status report for 04/10/2022

This week, I am helping to set up the webcam. Specifically, on calibrating the camera (estimating parameters about it). The camera model we are using is just a standard pinhole webcam. We are currently planning on calibrating the camera on our local machine and then using it on the Jetson. This is a reference to a page that we plan on using to help us in calibration: https://www.mathworks.com/help/vision/camera-calibration.html.

We are also setting up our initial testing environment right now. As of right now, we are going with cardboard boxes since they are not expensive and easy to setup. Depending on how much time is left, we may move to more secure ones.

Team status report for 04/02/2022

This week, our team worked on setting up the acrylic stand that the sensors as well as Jetson will be mounted on. The supports as of right now are wooden supports, but we plan to use standoffs in the future. We have most of our initial system components set up right now for the demo which is great. As a team, we are all pretty on track with our work. In terms of our plans for next week, overall, we will continue working on the iRobot programming and implementing a module for cv2 ArUco tag recognition.

Raymond Xiao’s status report for 04/02/2022

This week, I worked on helping set up for the demo this upcoming week with my team. I specifically worked on helping to set up the iRobot sensor mounting system with Jai. We used two levels of acrylic along with wooden supports for now (we have ordered standoffs for further support). As of right now, the first level will house the Xavier and webcam while the upper level will house the LIDAR sensor. I have also been reading the iRobot specification document in more detail since there are some components of it that are not supported in the pycreate2 module. For this week, I plan on helping set up the rest of the system for the demo as well as a wrapper module for ArUco code that we will be using for human detection.

Raymond Xiao’s status report for 03/27/22

This week, I helped set up more packages on the NVIDIA Jetson. We are currently using ROS Melodic and are working on installing OpenCV and Python so that we can use the pycreate2 module (https://github.com/MomsFriendlyRobotCompany/pycreate2) on the Jetson. We have been testing Python code on our local machines and it has been working well, but for the final system, the code will need to be run on a Jetson which has a different OS and dependencies. We ran into some issues with installing everything (we specifically need OpenCV 4.2.0) and are currently working on fixing this. OpenCV 4.2.0 is required since it contains the AruCO package which will be used in place of humans. We are using this form of identification since it is fast, rotation invariant, and has camera pose estimation information as well. I think I am track with my work. For next week, I plan to help finish installing everything we need for the Jetson so that we have a stable environment and can start software development in earnest.

Raymond Xiao’s status report for 03/19/2022

This week, I focused on reading the iRobot Create 2 specification manual that gives more information on how to program the robot. I also looked more into a GitHub repository that has a package specifically for interfacing with the Create 2 (link: https://pypi.org/project/pycreate2/) through USB. I wrote some test code using this library on my local machine and managed to get the robot to move in a simple sequence. For example, to move the robot forward, a series of four data bytes are sent. The first two bytes specify the velocity while the last two bytes specify the turning radius. To drive forward at 100mm/s, send 100 as two bytes and then send 0x7FFF as the radius bytes. In terms of scheduling, I think I am on track. For this upcoming week, I plan to have code that will be able to also interact with the Roomba’s sensors since that will be necessary for other parts of the design (such as path planning).