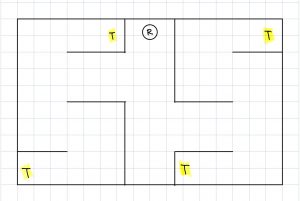

This week, our team focused on integrating the physical components of our system as well as the software stacks . We successfully installed the opencv-contrib library (which is needed for ArUco markers). We also integrated the DWA path planning algorithm into the rest of our system as well. In addition, we set up the environment we will be using for testing as well as for demoing. A picture of the updated diagram as well as a picture of the real life setup are shown below. The markers have not yet been added but there will be 5 of them throughout this ‘room’. Overall, we are close to finishing and integration and testing are the only ‘real’ tasks left.

Raymond Xiao’s status report for 04/30/2022

This week, I worked with Jai on setting up the testing and verification environment. Our current environment is a bit different from the one shown in the presentation. Originally, the size was 16×16 feet but due to size constraints, we changed it to 10×16 feet. In addition, we modified the layout of the ‘rooms’ and added hallways to make it more challenging to navigate. We also increased the number of ArUco markers in the environment from 3 to 5. Pictures of the setup as well as the updated diagram will be included in the team status report.

I also worked on integration of the ArUco marker detection code into our development environment. There were a lot dependency issues that neeeded to be resolved for the modules to work. Overall, I think we a bit behind but we are close to reaching the final solution. For this upcoming week before the demo, I plan to help my teammates with integrating the rest of the system and testing and debugging.

Jai Madisetty’s Status Report for 4/23

This week, I worked mainly on getting the path planning software coded up. However, due to a problem with getting a SLAM cartographer integrated with ROS, I was not able to take into consideration inputs from the SLAM subsystem. Thus, I also helped Keshav with the SLAM integration. We did eventually get the cartographer working; however, the localization of the robot within the created map is very inaccurate. We do plan on using sensor readings from the robot (odometer and possibly infrared sensors) to improve the localization. After we improve the localization, I plan on utilizing SLAM inputs (frontier of open areas in map) in the path planning for robustness.

Team Status Report for 4/23

The bulk of this week was devoted towards integration, mainly with SLAM. We were facing many linker files errors when trying to integrate the Google Cartographer system with our system in ROS. Fortunately, we did end up getting this working, but it did end up eating into a lot of our time this week. Overall, we have individual sub-systems working (detecting ArUco markers and path planning); however, we have not yet integrated these into our system due to the bottleneck of integrating the Google Cartographer system with ROS. Thus, it is difficult to say when we can start overall system tests. There may very well be a lot of tweaking to do with the software after the integration of the many parts. We are planning to come into lab tomorrow to work on integration.

Raymond Xiaos status report for 04/23/2022

This week, I worked on CV. I have some preliminary code that correctly identifies whether an ArUco marker is in the frame (no frame or multiple frames). I am currently working on integrating this into our current system. The plan is to publish this data to a ROS topic so that the Jetson can use it for path planning/SLAM.

I also helped with setting up and integrating more of the system this week. The SLAM node has been installed and seems to be working correctly. The obstacle mapping seems to be accurate when we tested it but the localization is not turned well.

For this upcoming week, I plan to finish the CV ROS integration and help the rest of my team with setting up our testing environment.

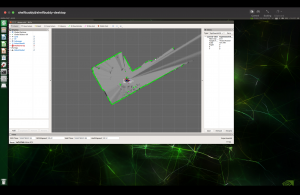

Below is an image of the SLAM visualization as well as the current location the robot is in. As you can see, the data is pretty accurate; the walls and hallway are clearly defined.

EDIT: I forgot to upload a picture of the SLAM visualization. It is below:

Keshav Sangam’s Status Report for 4/23

This week, we focused on integration. As of right now, SLAM is being integrated with path planning, but there are some critical problems we are facing. While the map that our SLAM algorithm builds is accurate, the localization accuracy is extremely poor. This makes it impossible to actually path plan. The poor localization accuracy stems from the fact that when we simulate our lidar/odometry data to test our SLAM algorithm, there is too little noise in the data to show problems with bad performance; thus, it is difficult to tune the algorithm without directly running it on the robot. Manually introducing noise in the simulated data did not help improve the tuning parameters. To solve this problem, we are going to record a ROS bag (a collection of all messages being published across the ROS topics) so that we don’t have to generate simulated data, instead taking sensor readings directly from the robot. By recording this data into a bag, we can replay the data and tune the SLAM algorithm from this. I believe we are still on track since we have a lot of time to optimize these parameters. We also worked on the final presentation slides, and started writing the final report.

Raymond Xiao’s status report for 04/16/2022

I am currently working on setting up the CV portion of our system. The main sensor that I will be interfacing with is the camera. This week, we estimated the parameters of the camera we will be using using MATLAB (https://www.mathworks.com/help/vision/camera-calibration.html). These parameters will be necessary to correct any distortions and accurately detect our location. The camera will be used to detect ArUco markers which are robust to errors. There is a cv2 module named aruco which I will be using. As of right now, I have decided on using the ArUco dictionary DICT_7X7_50. This means that each marker will be 7×7 bits and there are 50 unique markers that can be generated. I decided to go with a larger marker (versus 5×5) since it will make it easier for the camera to detect. At a high level, the CV algorithm will detect markers in a given image using a dictionary as a baseline. These markers are fast and robust.

We also ordered some connectors for our battery pack to Jetson but it turns out we ordered the wrong size. We have reordered the correct size and have also used standoffs as well as laser cut new platforms to house the sensors for more stability. In terms of pacing, I think we a bit behind schedule but by focusing this week, I think we will be able to get back on track. My goal by the end of this week is to have a working CV implementation which can publish its data to a ROS topic which can then be used by other nodes in the system.

Keshav Sangam’s Status Report for 4/16

This week, I focused on getting the robot to run headlessly, with all the sensors, the battery, and the Jetson entirely housed on top of the Roomba. Jai started by CADing and laser cutting acrylic panels with holes for the standoffs, in addition to holes for the webcam and the LIDAR. After getting the battery, we realized that the DC power jack for the Jetson had a different inner diameter than the cable that came with the battery, which we fixed via an adapter. Once we confirmed the Jetson could be powered by our battery, I worked on setting up the Jetson VNC server for headless control. Finally, I modified the Jetson’s udev rules to assign each USB device a static device ID. Since ROS nodes depend on knowing which physical USB device is assigned to which ttyUSB device, I created symlinks to ensure that the LIDAR is always a ttyLIDAR device, the webcam a ttyWC device, and the iRobot itself as a ttyROOMBA device. Here’s a video to the Roomba moving around:

https://drive.google.com/file/d/1U68VRGrZiqcw5ZbmErxF-N3jlftXx110/view?usp=sharing

As you can see, the Roomba has a problem with “jerking” when it initially starts to accelerate in a direction. This can be fixed by adding a weight to the front of the Roomba to ensure equal distribution (for example, moving the Jetson battery mount location could do this), since the center of mass is currently at the back end of the Roomba.

We are making good progress. Ideally, we will be able to construct a map of the environment from the Roomba within the next few days. We are also working in parallel on the OpenCV + path planning.

Team Status Report for 4/16

This week, our team mainly worked to have all of our components housed on the iRobot to run headlessly. Now that we have the robot ready with all of its sensors fixed, we can start to test the Hector SLAM with the robot as a medium and even try to provide odometry data as an input to the Hector SLAM so we can avoid the pose estimation errors (the loop closure problem). After that, we can integrate the path planning algorithm, and after some potential debugging, from there we can start testing with a cardboard box “room” to map. As a team, we are on track with our work.

Jai Madisetty’s Status Report for 4/16

The bulk of the work the past week was dedicated to the housing of the devices since getting the right dimensions and parts took some trial and error. I am currently working on the path planning portion of this project. The task is a lot more time consuming than I imagined since there are a multitude of inputs to consider when coding the path planning algorithm. Since our robot solely deals with indoor, unknown environments , I am implementing the DWA (dynamic window approach) algorithm for path planning. If time permits, I would consider looking more into a D* algorithm, which is similar to A* but is “dynamic” as parameters of the heuristic can change mid-process.

Given we only have a week left before final presentations, we are planning on getting path planning fully integrated by Wednesday, and then running tests up until the weekend.