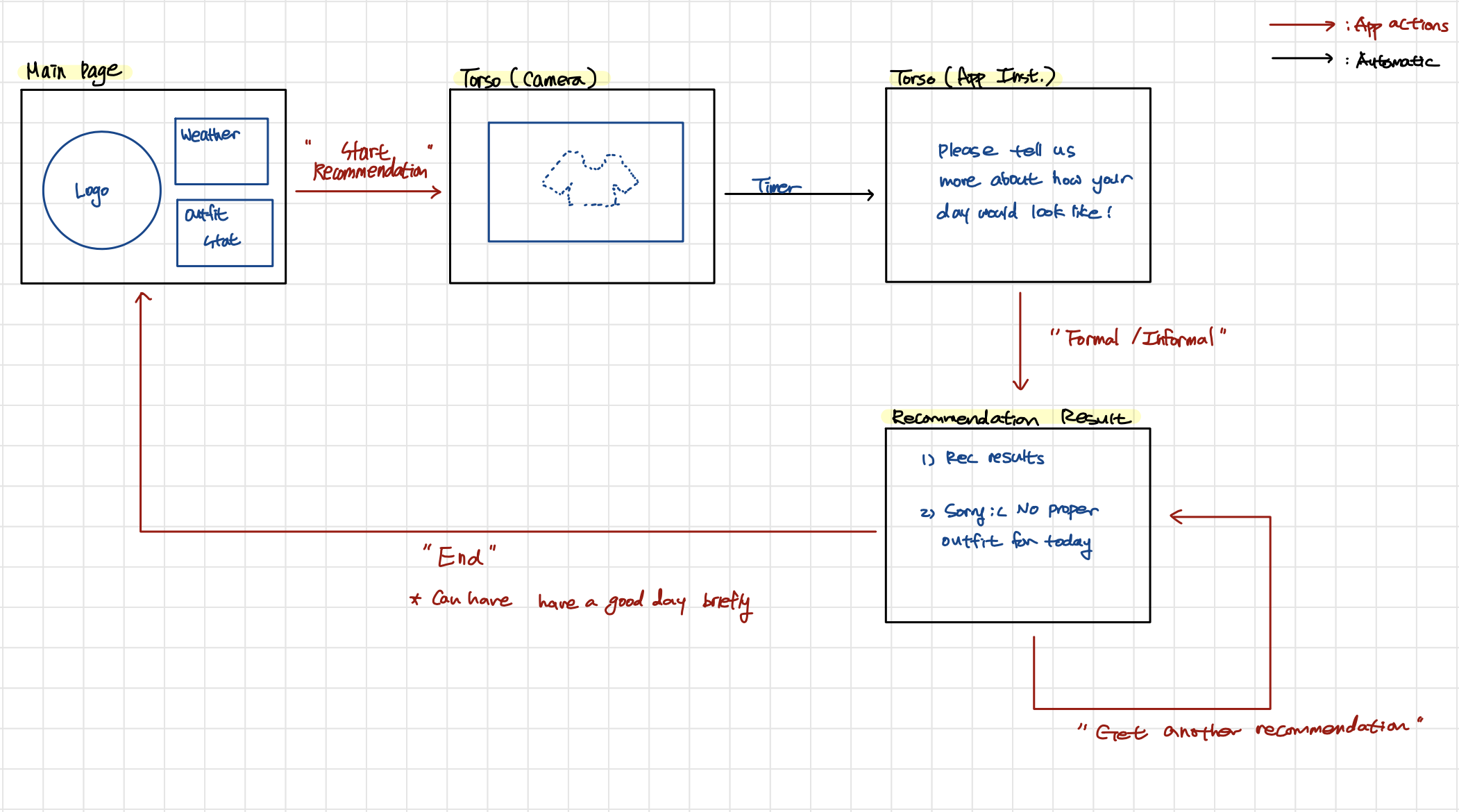

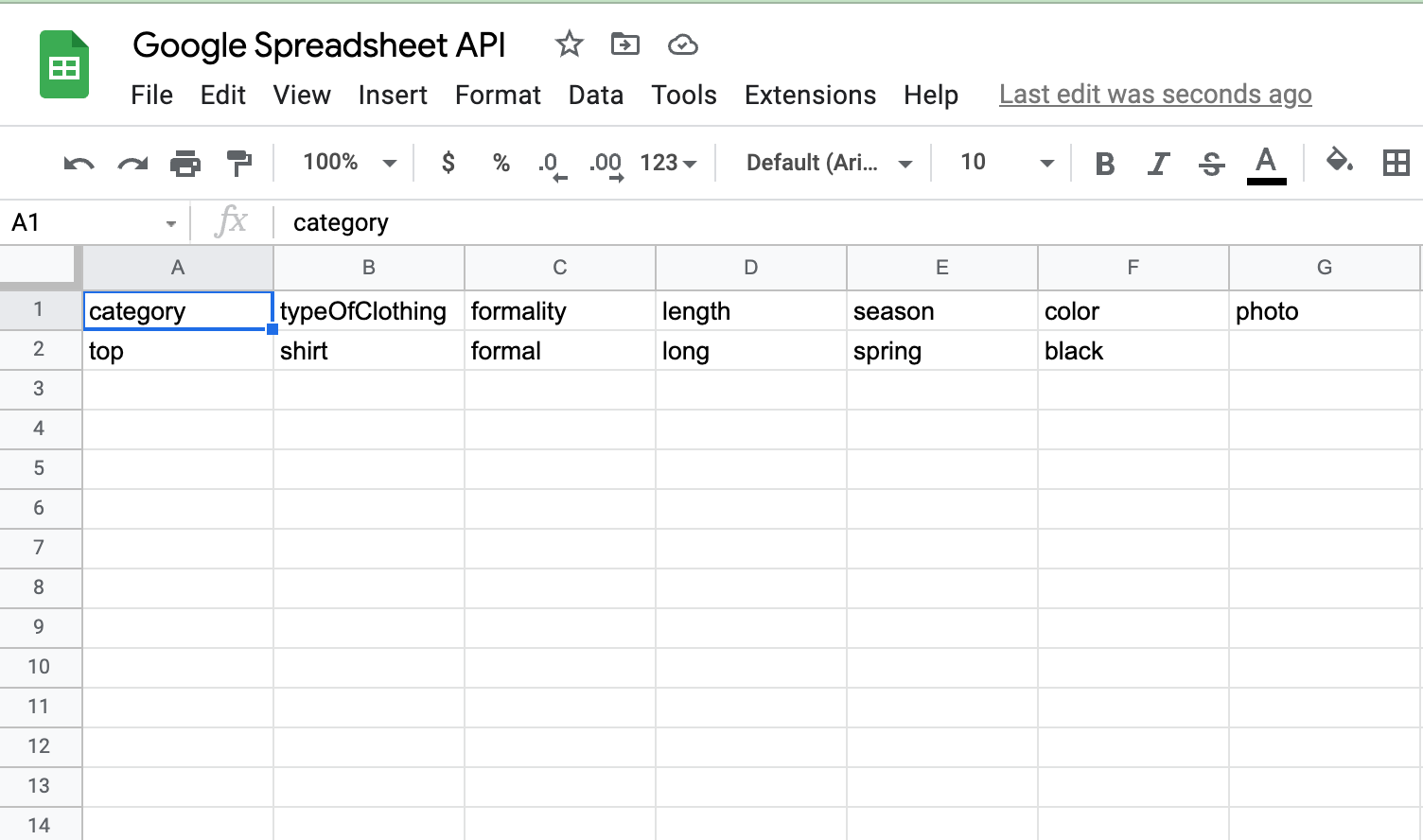

This week I worked on implementing the mirror UI, and its integration with the rest of the project. Last week, the mirror UI did not work on Jetson. Thus, I decided to create a virtual machine with the same exact environment as Jetson in my own laptop, and work on it there. This way, I was able to build a distributable form of mirror UI Electron app for Linux. However, although the app ran completely well on the virtual machine, it did not run on Jetson due to the fact that some requirements for running the app did not align with what are required for the rest of the project. Considering this, and also considering the fact that the mirror UI should be able to communicate with the mobile app, I decided to create a web app for the mirror UI. The reason we originally decided to make a desktop app instead of a web app was because OpenPose and color recognition already created too much loads on Jetson, which slows down the connectivity. However, as we tested with other web browser, OpenPose and color recognition we have now seemed compatible with a web app. This way, it became easier to connect the UI with our app as well. I have made a basic structure of web app that can be compatible with the mobile app, and will shortly test it with Jeremy’s mobile app as well.

Although we are behind the original schedule, I think we are approaching very close to the end of the project as we are wrapping up. I think we are in a good pace considering the deadlines for poster and final demo.

For the upcoming week, I will work on the integration briefly and final demo.