Prior to spring break, I received the micro SD card from our order and configured it to run Ubuntu for our Jetson Xavier NX. I took the SD card and wrote the proper image on it, which was downloaded from the Nvidia website to help set up for the Jetson Xavier. Once we got the camera set up and connected to the Xavier, I spent about a week on it to get live camera feed working. At first I could only get it to take pictures but them realized the display connection cable was the problem and after switching it to a HDMI cable we were able to get live video feed showing.

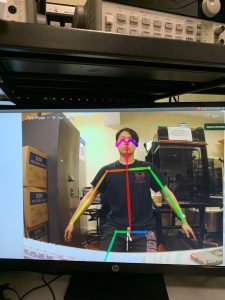

Once we got live video feed working, the next step was to get Open Pose working. We downloaded the correct files and tried to follow the instructions for setting up Open Pose. I spent time after class this week both on Monday and Wednesday to set it up but it seemed like the micro SD card we bought did not have enough storage. So I brought an 64GB (what we had originally was 16GB) micro SD card and configured it to work on our Xavier but this still didn’t seem to fix the issue of compiling the Open Pose file. Jeremy and I spent 3 hours on Thursday tries to install correct prerequisites and drivers but somehow the system cannot find/recognize the correct library. We hope to try it again on Monday but incase Open Pose does not work we have a secondary plan in place to try out. We found a program similar to OpenPose called trt-pose which is capable of detecting limbs and gestures real time just like OpenPose but just for one person. This should be sufficient enough for what we need since only one person will be standing in front of the mirror.

Jeremy and I also were able to find scrap wood that we can use for our project in the TechSpark woodshop. The wood pieces were the appropriate pieces for the frame we will be building for the mirror.

In terms of progress, I would say we are on track to have our project going. The next couple of week will really be the time where we need to grind out both the software and hardware components for our project but I’m confident we can get it done.

Live video feed from Arducam