This week I’ve been thinking and brainstorming different ethics concerns of our project and how we may be able to counter it. Since we have a project that involves a camera, the main item of concern for me is the idea of a surveillance state. There is also the issue of someone with malicious intent hacking our system and rerouting traffic or specific vehicles into bad situations or causing unnecessary harm.

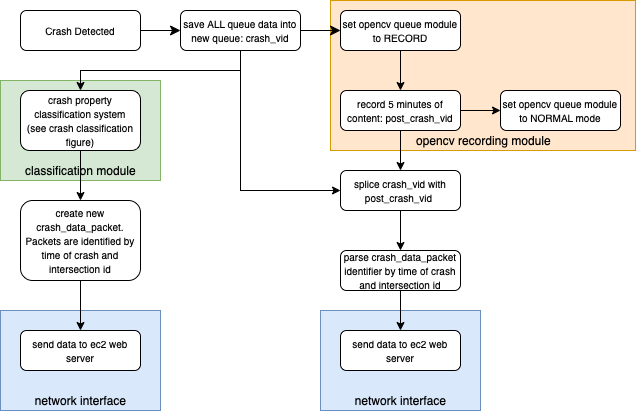

I think there are two simple fixes. For the issue of a surveillance state we make sure that the only part of a camera feed that is ever stored or used for further processing is the parts of the video involved with a car crash. Therefore, this is a basic prevention against using any video not involved in crash detection as it is not stored from the live feed and immediately discarded. As for the malicious intent, one easy way to safeguard against misuse is that every time any rerouting suggestion is pushed out, we re check to make sure there was in fact an improvement in traffic flow according to our traffic rerouting algorithm. If some suggestion is in fact making the situation worse or not helping, we can shut the system down immediately. Of course, this is not to say that these are very fail safe procedures or anything like that. Making our system truly secure could be a semester long task of its own, however I think this week’s focus on ethics has made us consider ethics and we have thought out some rudimentary first step ways to counter ethics issues in our system.